Cloud Native Catalog

Easily import any catalog item into Meshery. Have a design pattern to share? Add yours to the catalog.

Meshery CLI

Import using mesheryctl, visit docs for steps.

1. Apply a pattern file.

mesheryctl pattern apply -f [file | URL]

2. Onboard an application.

mesheryctl app onboard -f [file-path]

3. Apply a WASM filter file.

mesheryctl exp filter apply --file [GitHub Link]

No results found

AWS cloudfront controller

MESHERY481b

RELATED PATTERNS

Istio Operator

MESHERY4a76

AWS CLOUDFRONT CONTROLLER

Description

This YAML file defines a Kubernetes Deployment for the ack-cloudfront-controller, a component responsible for managing AWS CloudFront resources in a Kubernetes environment. The Deployment specifies that one replica of the pod should be maintained (replicas: 1). Metadata labels are provided for identification and management purposes, such as app.kubernetes.io/name, app.kubernetes.io/instance, and others, to ensure proper categorization and management by Helm. The pod template section within the Deployment spec outlines the desired state of the pods, including the container's configuration. The container, named controller, uses the ack-cloudfront-controller:latest image and will run a binary (./bin/controller) with specific arguments to configure its operation, such as AWS region, endpoint URL, logging level, and resource tags. Environment variables are defined to provide necessary configuration values to the container. The container exposes an HTTP port (8080) and includes liveness and readiness probes to monitor and manage its health, ensuring the application is running properly and is ready to serve traffic.

Read moreCaveats and Considerations

1. Environment Variables: Verify that the environment variables such as AWS_REGION, AWS_ENDPOINT_URL, and ACK_LOG_LEVEL are correctly set according to your AWS environment and logging preferences. Incorrect values could lead to improper functioning or failure of the controller. 2. Secrets Management: If AWS credentials are required, make sure the AWS_SHARED_CREDENTIALS_FILE and AWS_PROFILE environment variables are correctly configured and the referenced Kubernetes secret exists. Missing or misconfigured secrets can prevent the controller from authenticating with AWS. 3. Resource Requests and Limits: Review and adjust the resource requests and limits to match the expected workload and available cluster resources. Insufficient resources can lead to performance issues, while overly generous requests can waste cluster resources. 4. Probes Configuration: The liveness and readiness probes are configured with specific paths and ports. Ensure that these endpoints are correctly implemented in the application. Misconfigured probes can result in the pod being killed or marked as unready.

Read moreTechnologies

Related Patterns

Istio Operator

MESHERY4a76

AWS rds controller

MESHERY46e4

RELATED PATTERNS

Istio Operator

MESHERY4a76

AWS RDS CONTROLLER

Description

This YAML manifest defines a Kubernetes Deployment for the ACK RDS Controller application. It orchestrates the deployment of the application within a Kubernetes cluster, ensuring its availability and scalability. The manifest specifies various parameters such as the number of replicas, pod template configurations including container settings, environment variables, resource limits, and security context. Additionally, it includes probes for health checks, node selection preferences, tolerations, and affinity rules for optimal scheduling. The manifest encapsulates the deployment requirements necessary for the ACK RDS Controller application to run effectively in a Kubernetes environment.

Read moreCaveats and Considerations

1. Resource Allocation: Ensure that resource requests and limits are appropriately configured based on the expected workload of the application to avoid resource contention and potential performance issues. 2. Security Configuration: Review the security context settings, including privilege escalation, runAsNonRoot, and capabilities, to enforce security best practices and minimize the risk of unauthorized access or privilege escalation within the container. 3. Probe Configuration: Validate the configuration of liveness and readiness probes to ensure they accurately reflect the health and readiness of the application. Incorrect probe settings can lead to unnecessary pod restarts or deployment issues. 4. Environment Variables: Double-check the environment variables provided to the container, ensuring they are correctly set and necessary for the application's functionality. Incorrect or missing environment variables can cause runtime errors or unexpected behavior. 5. Volume Mounts: Verify the volume mounts defined in the deployment, especially if the application requires access to specific data or configuration files. Incorrect volume configurations can result in data loss or application malfunction.

Read moreTechnologies

Related Patterns

Istio Operator

MESHERY4a76

Accelerated mTLS handshake for Envoy data planes

MESHERY4421

RELATED PATTERNS

prometheus-operator-crd-cluster-roles

MESHERY4571

ACCELERATED MTLS HANDSHAKE FOR ENVOY DATA PLANES

Description

Cryptographic operations are among the most compute-intensive and critical operations when it comes to secured connections. Istio uses Envoy as the “gateways/sidecar” to handle secure connections and intercept the traffic. Depending upon use cases, when an ingress gateway must handle a large number of incoming TLS and secured service-to-service connections through sidecar proxies, the load on Envoy increases. The potential performance depends on many factors, such as size of the cpuset on which Envoy is running, incoming traffic patterns, and key size. These factors can impact Envoy serving many new incoming TLS requests. To achieve performance improvements and accelerated handshakes, a new feature was introduced in Envoy 1.20 and Istio 1.14. It can be achieved with 3rd Gen Intel® Xeon® Scalable processors, the Intel® Integrated Performance Primitives (Intel® IPP) crypto library, CryptoMB Private Key Provider Method support in Envoy, and Private Key Provider configuration in Istio using ProxyConfig.

Read moreCaveats and Considerations

Ensure networking is setup properly and correct annotation are applied to each resource for custom Intel configuration

Technologies

Related Patterns

prometheus-operator-crd-cluster-roles

MESHERY4571

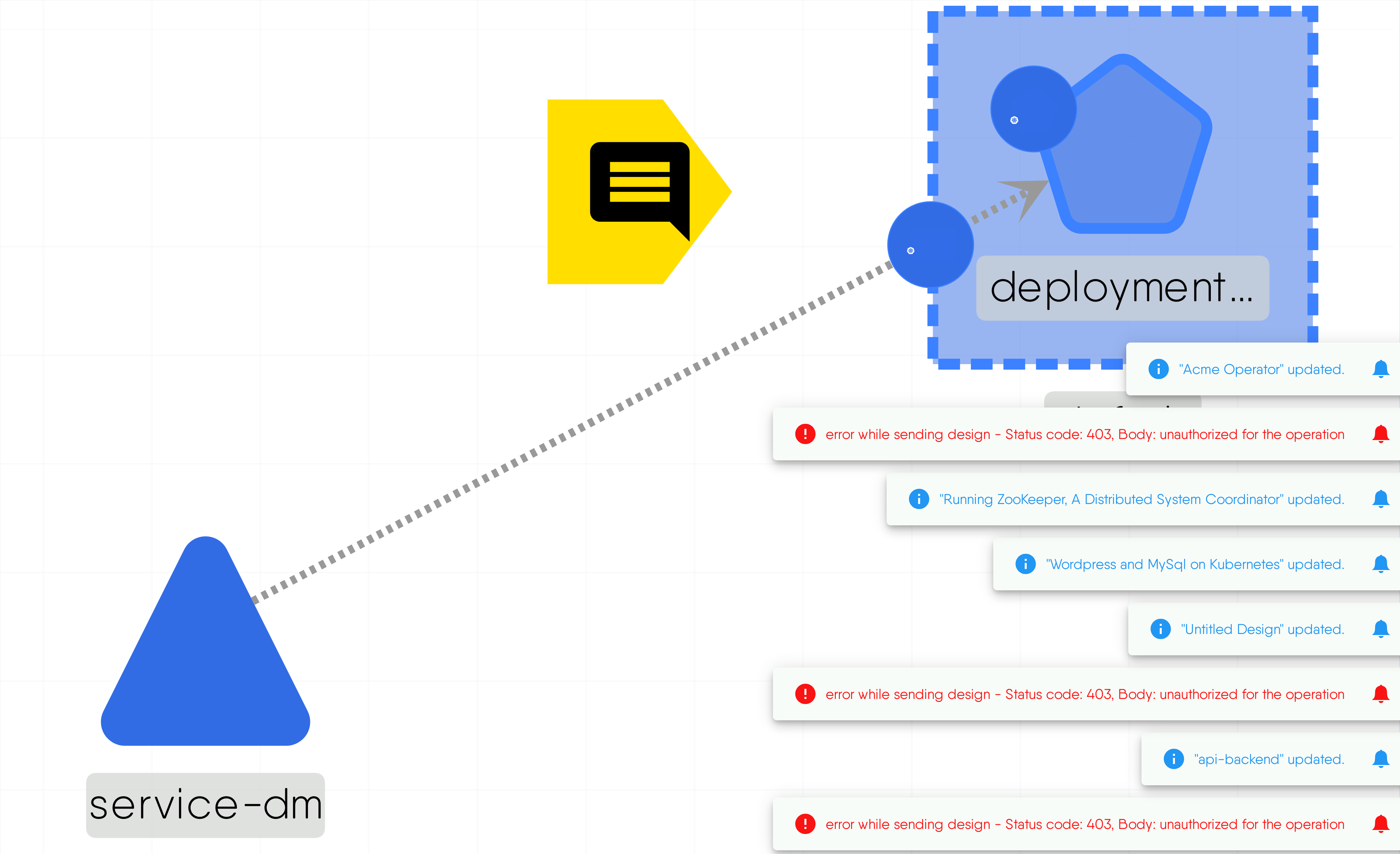

Acme Operator

MESHERY4627

RELATED PATTERNS

ACME OPERATOR

Description

Let’s Encrypt uses the ACME protocol to verify that you control a given domain name and to issue you a certificate. To get a Let’s Encrypt certificate, you’ll need to choose a piece of ACME client software to use.

Caveats and Considerations

We recommend that most people start with the Certbot client. It can simply get a cert for you or also help you install, depending on what you prefer. It’s easy to use, works on many operating systems, and has great documentation.

Read moreTechnologies

Related Patterns

Amazon Web Services IoT Architecture Diagram

MESHERY449f

RELATED PATTERNS

Istio Operator

MESHERY4a76

AMAZON WEB SERVICES IOT ARCHITECTURE DIAGRAM

Description

This comprehensive IoT architecture harnesses the power of Amazon Web Services (AWS) to create a robust and scalable Internet of Things (IoT) ecosystem

Caveats and Considerations

It cannot be deployed because the nodes used to create the diagram are shapes and not components.

Technologies

Related Patterns

Istio Operator

MESHERY4a76

Apache Airflow

MESHERY41d4

RELATED PATTERNS

My first k8s app

MESHERY496d

APACHE AIRFLOW

Description

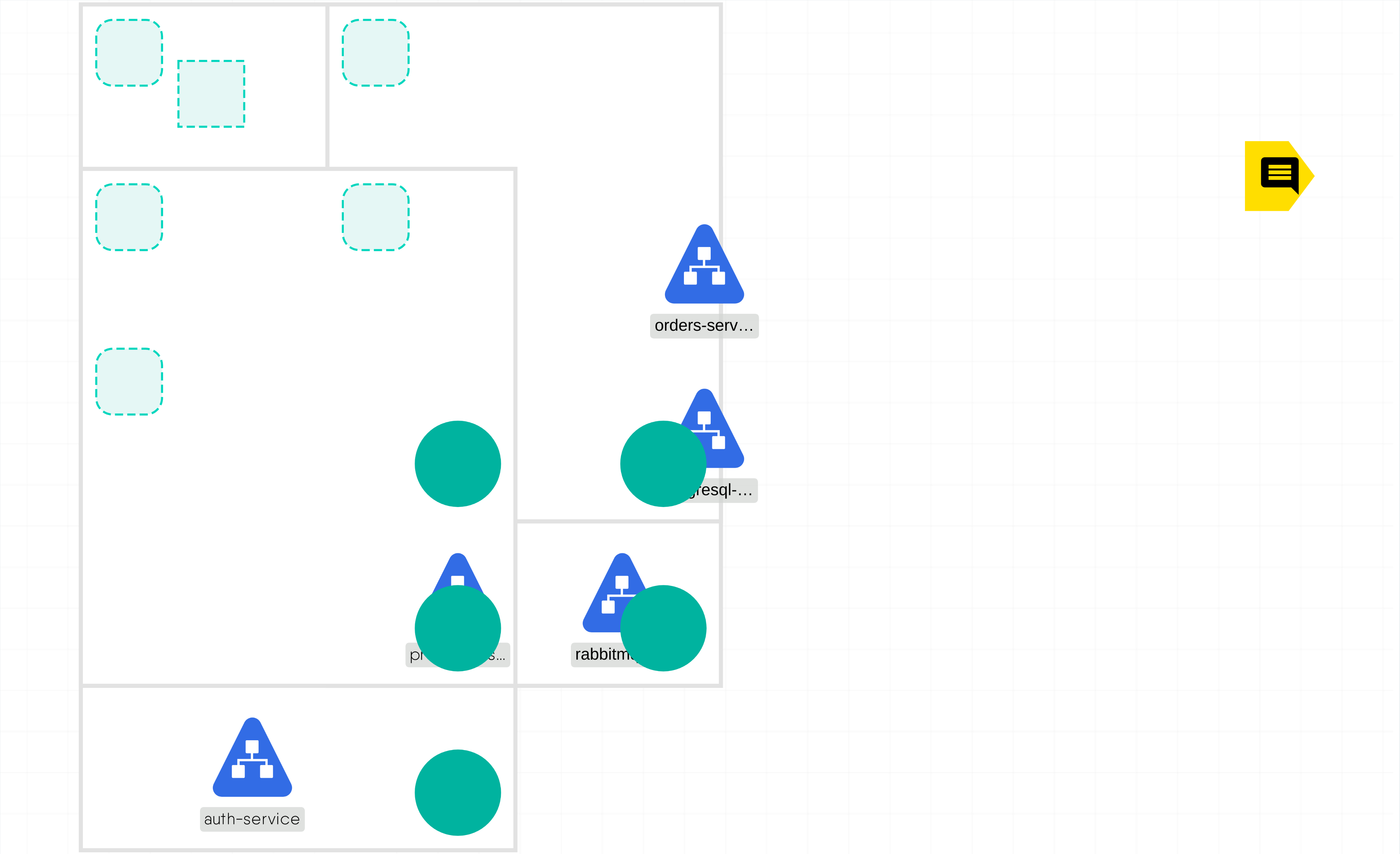

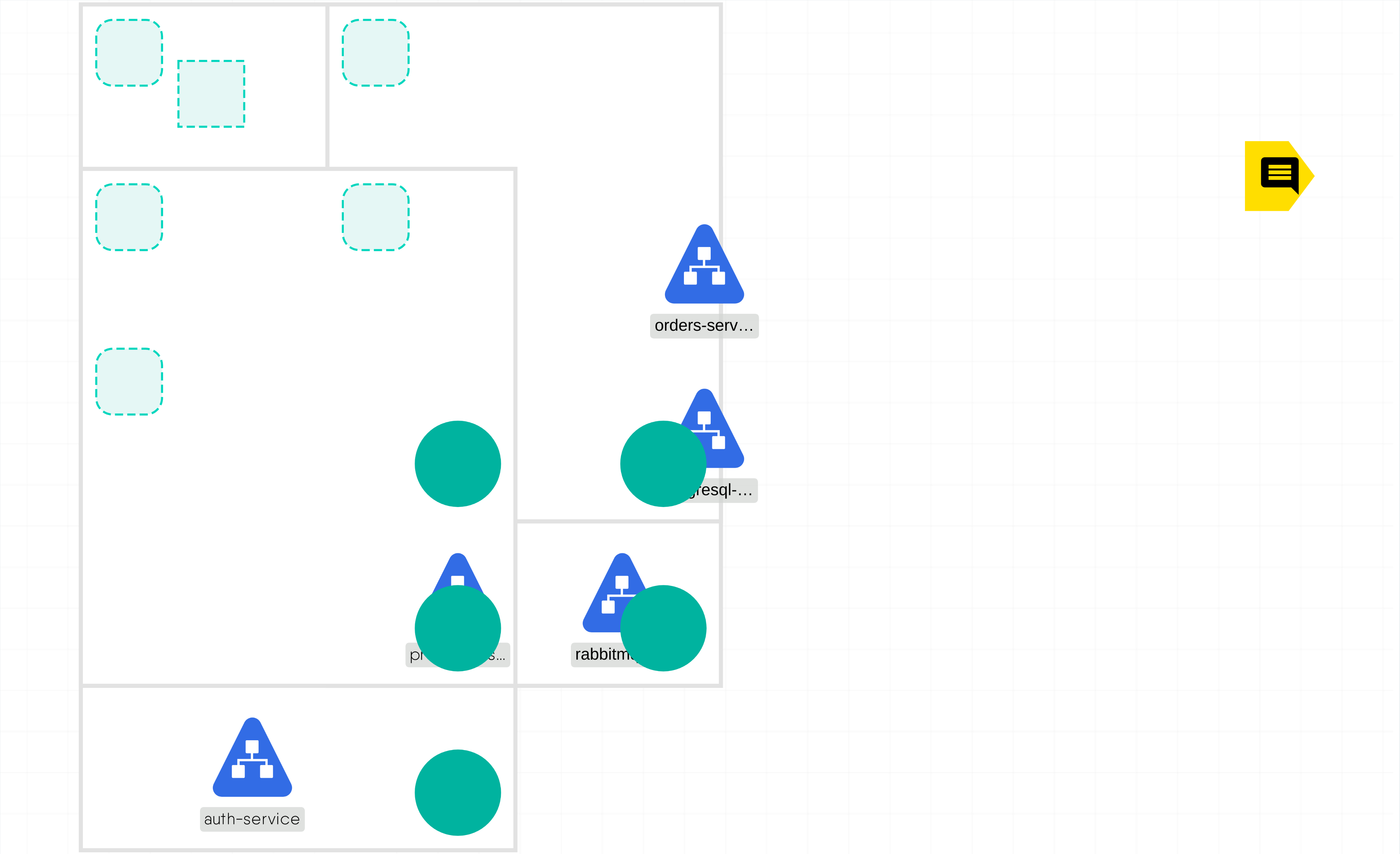

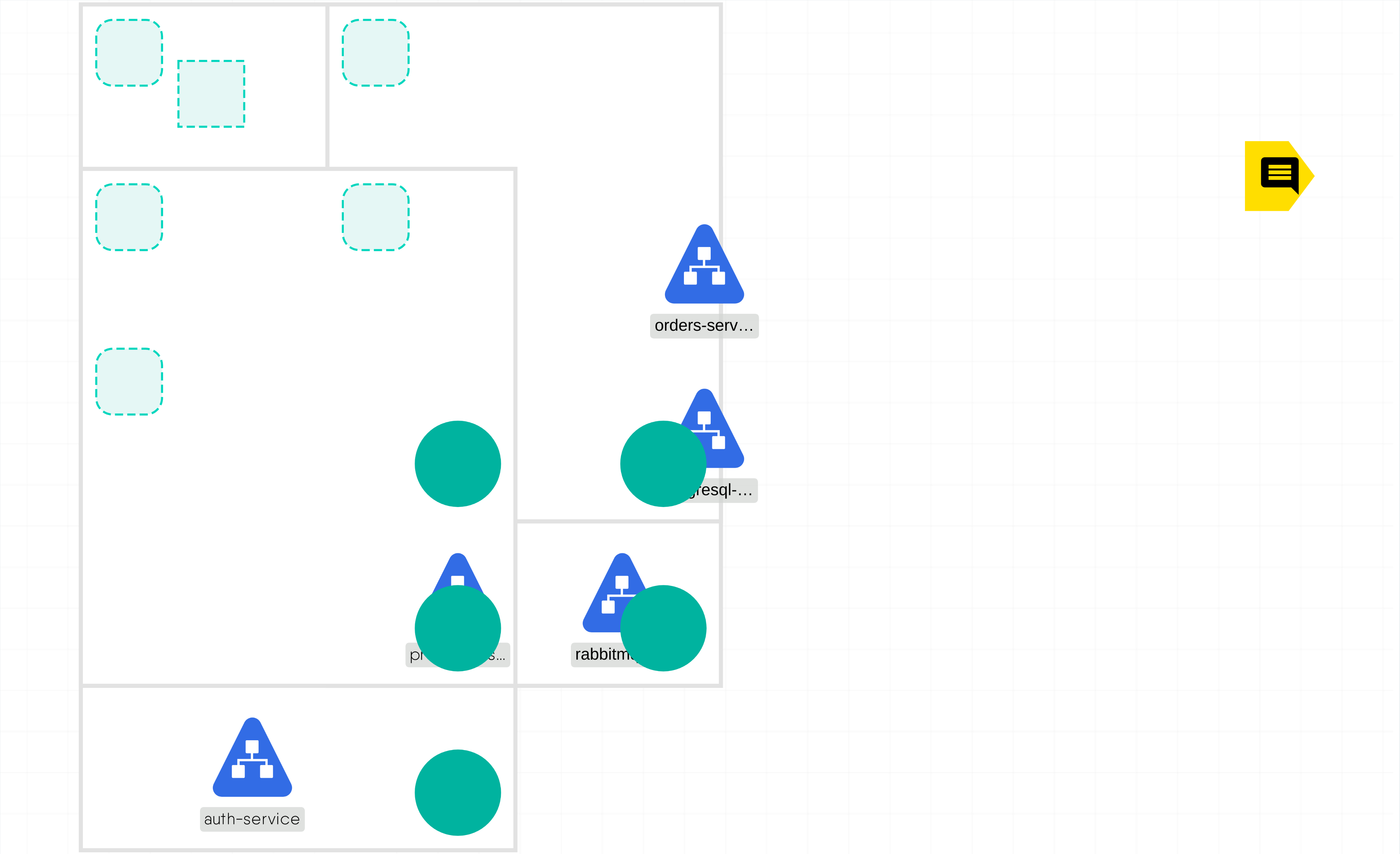

Apache Airflow (or simply Airflow) is a platform to programmatically author, schedule, and monitor workflows. When workflows are defined as code, they become more maintainable, versionable, testable, and collaborative. Use Airflow to author workflows as directed acyclic graphs (DAGs) of tasks. The Airflow scheduler executes your tasks on an array of workers while following the specified dependencies. Rich command line utilities make performing complex surgeries on DAGs a snap. The rich user interface makes it easy to visualize pipelines running in production, monitor progress, and troubleshoot issues when needed. Airflow works best with workflows that are mostly static and slowly changing. When the DAG structure is similar from one run to the next, it clarifies the unit of work and continuity. Other similar projects include Luigi, Oozie and Azkaban. Airflow is commonly used to process data, but has the opinion that tasks should ideally be idempotent (i.e., results of the task will be the same, and will not create duplicated data in a destination system), and should not pass large quantities of data from one task to the next (though tasks can pass metadata using Airflow's XCom feature). For high-volume, data-intensive tasks, a best practice is to delegate to external services specializing in that type of work. Airflow is not a streaming solution, but it is often used to process real-time data, pulling data off streams in batches. Principles Dynamic: Airflow pipelines are configuration as code (Python), allowing for dynamic pipeline generation. This allows for writing code that instantiates pipelines dynamically. Extensible: Easily define your own operators, executors and extend the library so that it fits the level of abstraction that suits your environment. Elegant: Airflow pipelines are lean and explicit. Parameterizing your scripts is built into the core of Airflow using the powerful Jinja templating engine. Scalable: Airflow has a modular architecture and uses a message queue to orchestrate an arbitrary number of workers.

Read moreCaveats and Considerations

Make sure to fill out your own postgres username ,password, host,port etc to see airflow working as per your database requirements. pass them as environment variables or create secrets for password and config map for ports ,host .

Technologies

Related Patterns

My first k8s app

MESHERY496d

Apache ShardingSphere Operator

MESHERY4803

RELATED PATTERNS

Delay Action for Chaos Mesh

MESHERY4dcc

APACHE SHARDINGSPHERE OPERATOR

Description

The ShardingSphere Kubernetes Operator automates provisioning, management, and operations of ShardingSphere Proxy clusters running on Kubernetes. Apache ShardingSphere is an ecosystem to transform any database into a distributed database system, and enhance it with sharding, elastic scaling, encryption features & more.

Read moreCaveats and Considerations

Ensure Apache ShardingSphere and Knative Service is registered as a MeshModel

Technologies

Related Patterns

Delay Action for Chaos Mesh

MESHERY4dcc

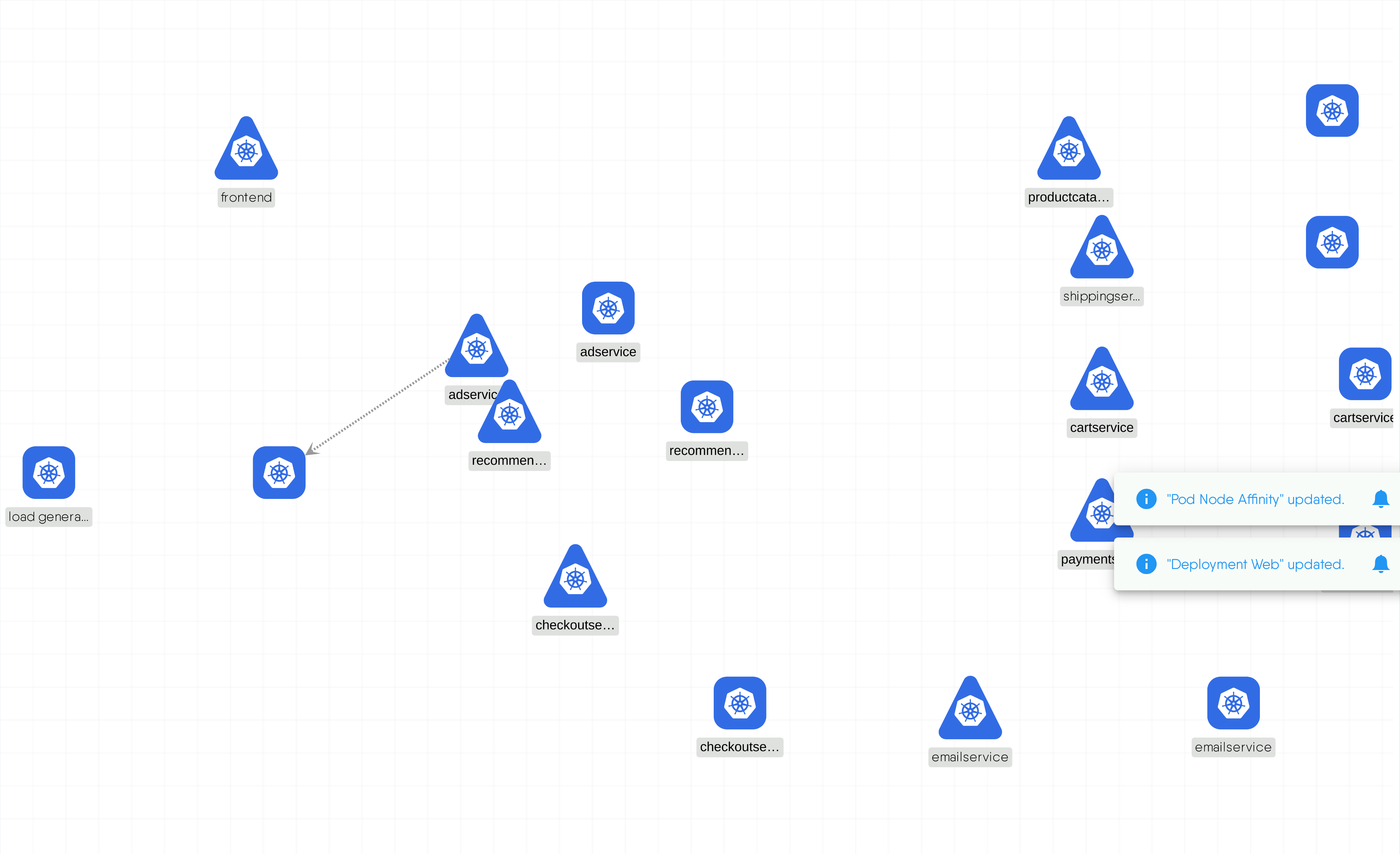

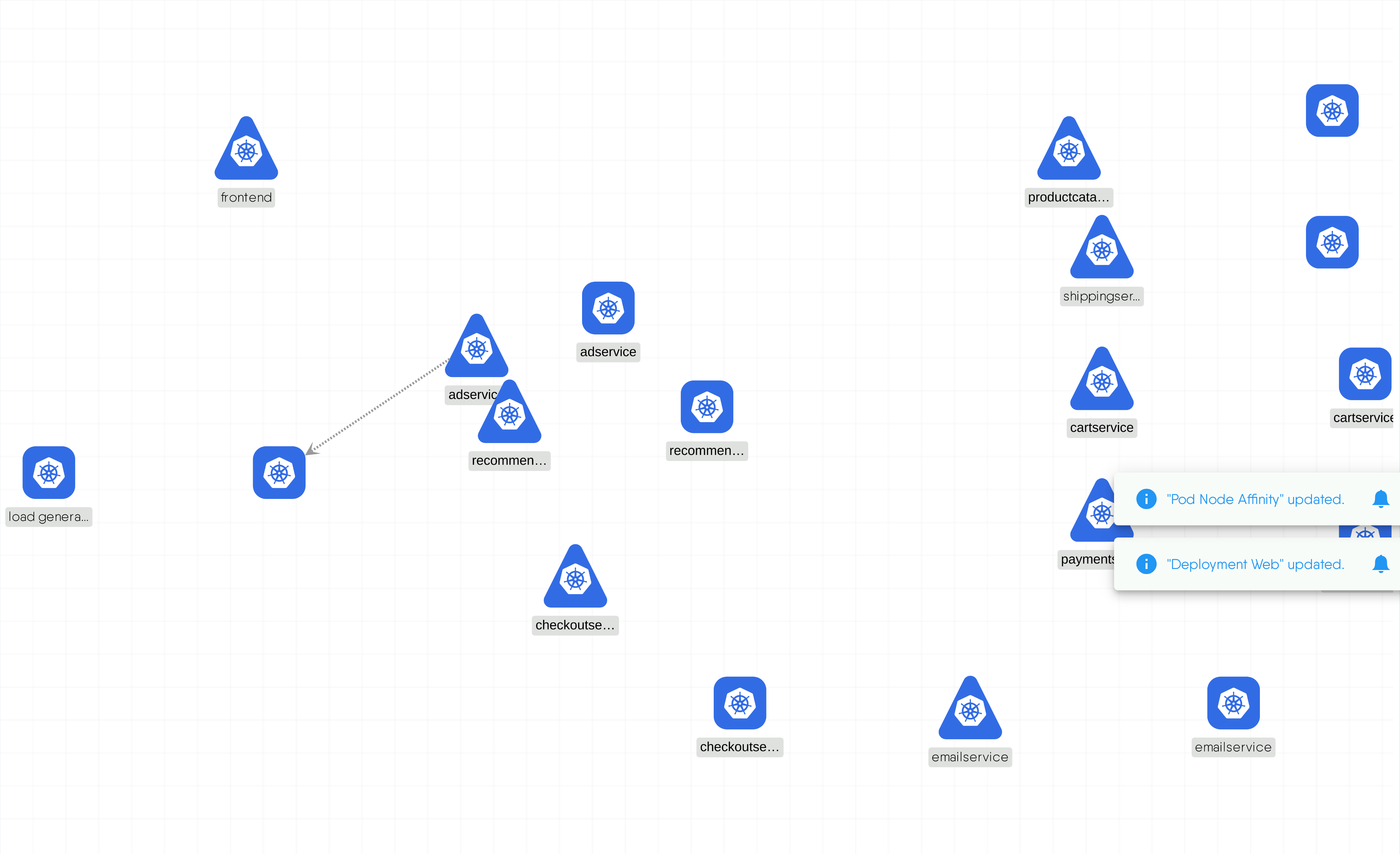

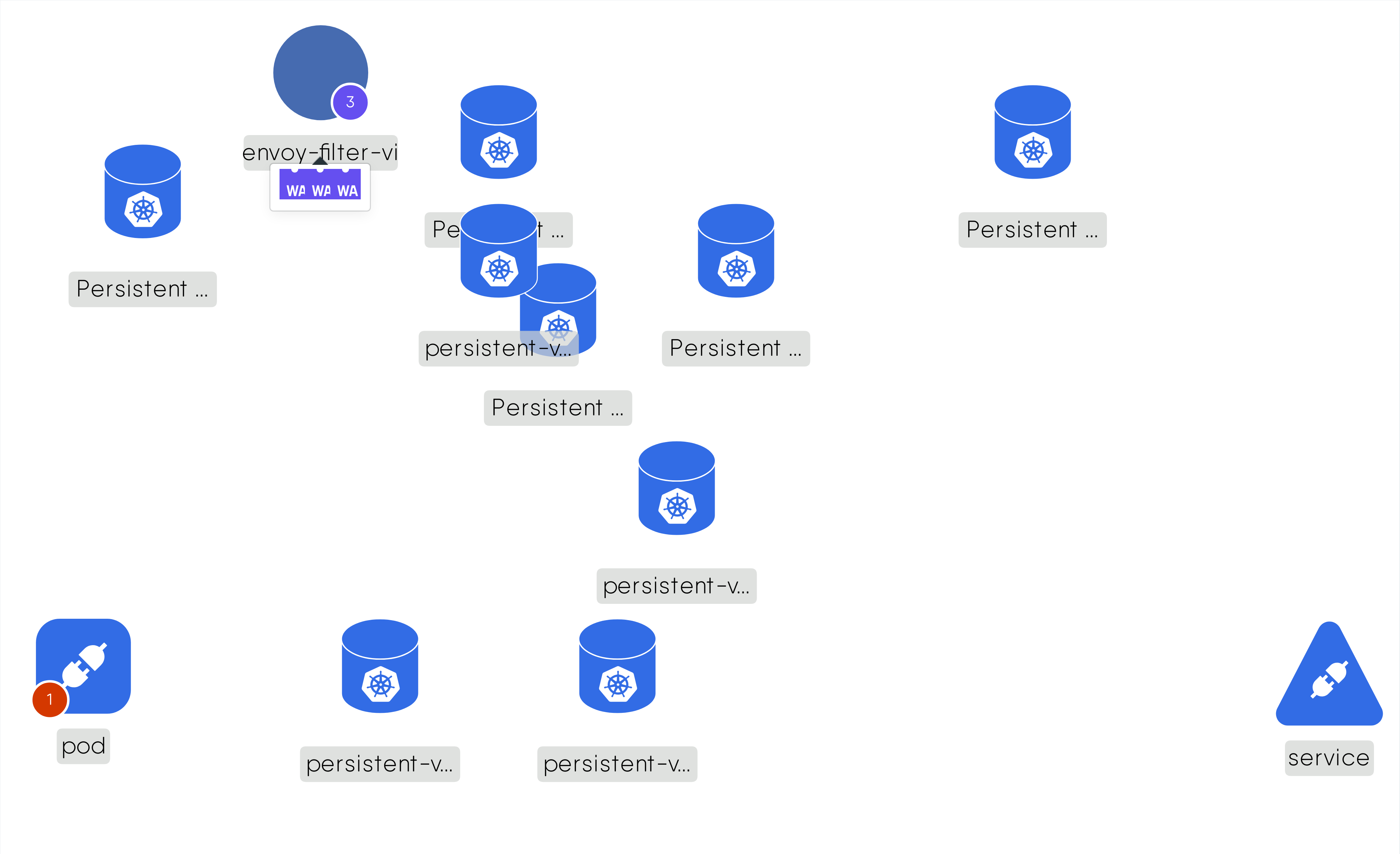

App-graph

MESHERY4f74

RELATED PATTERNS

Pod Readiness

MESHERY4b83

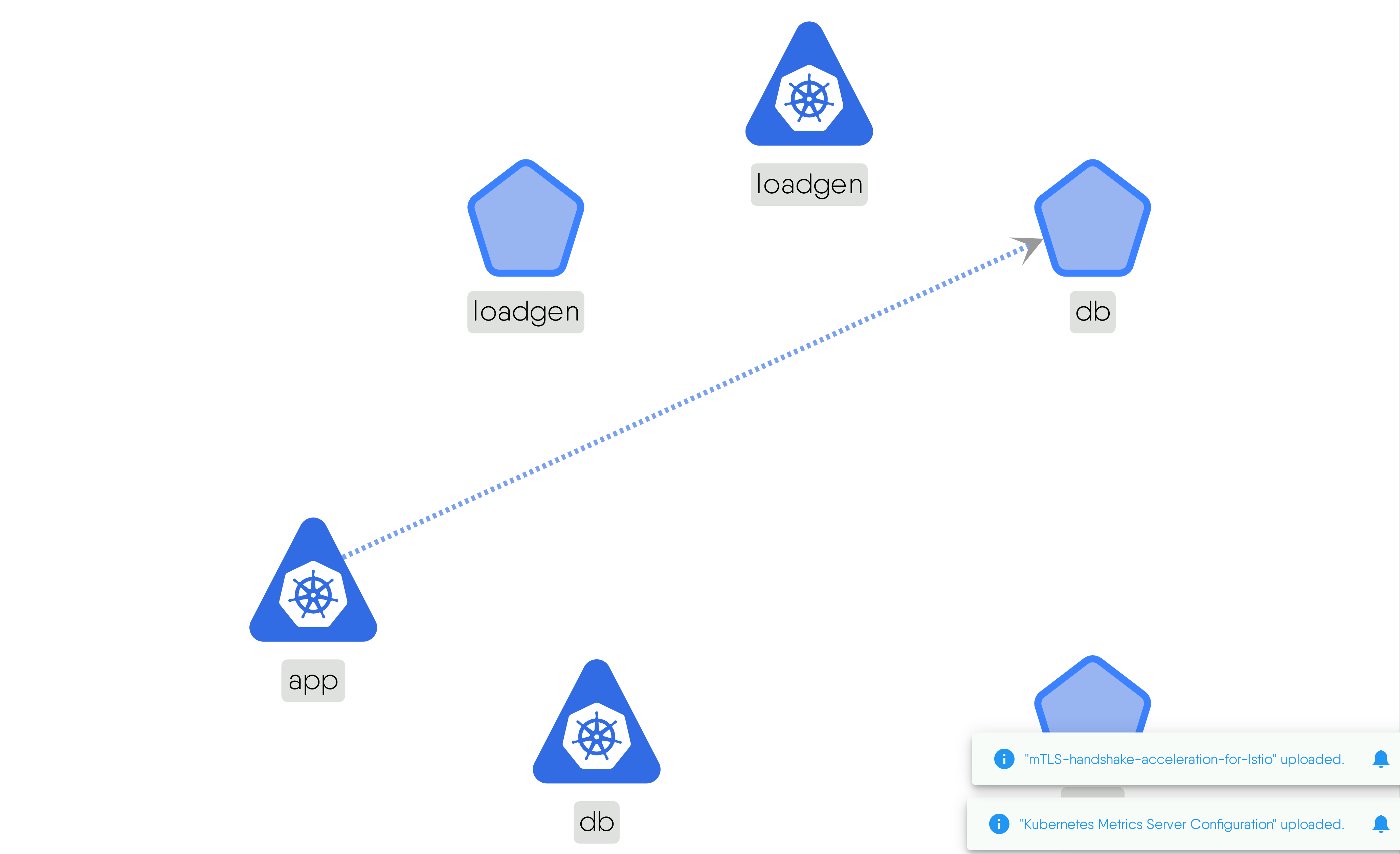

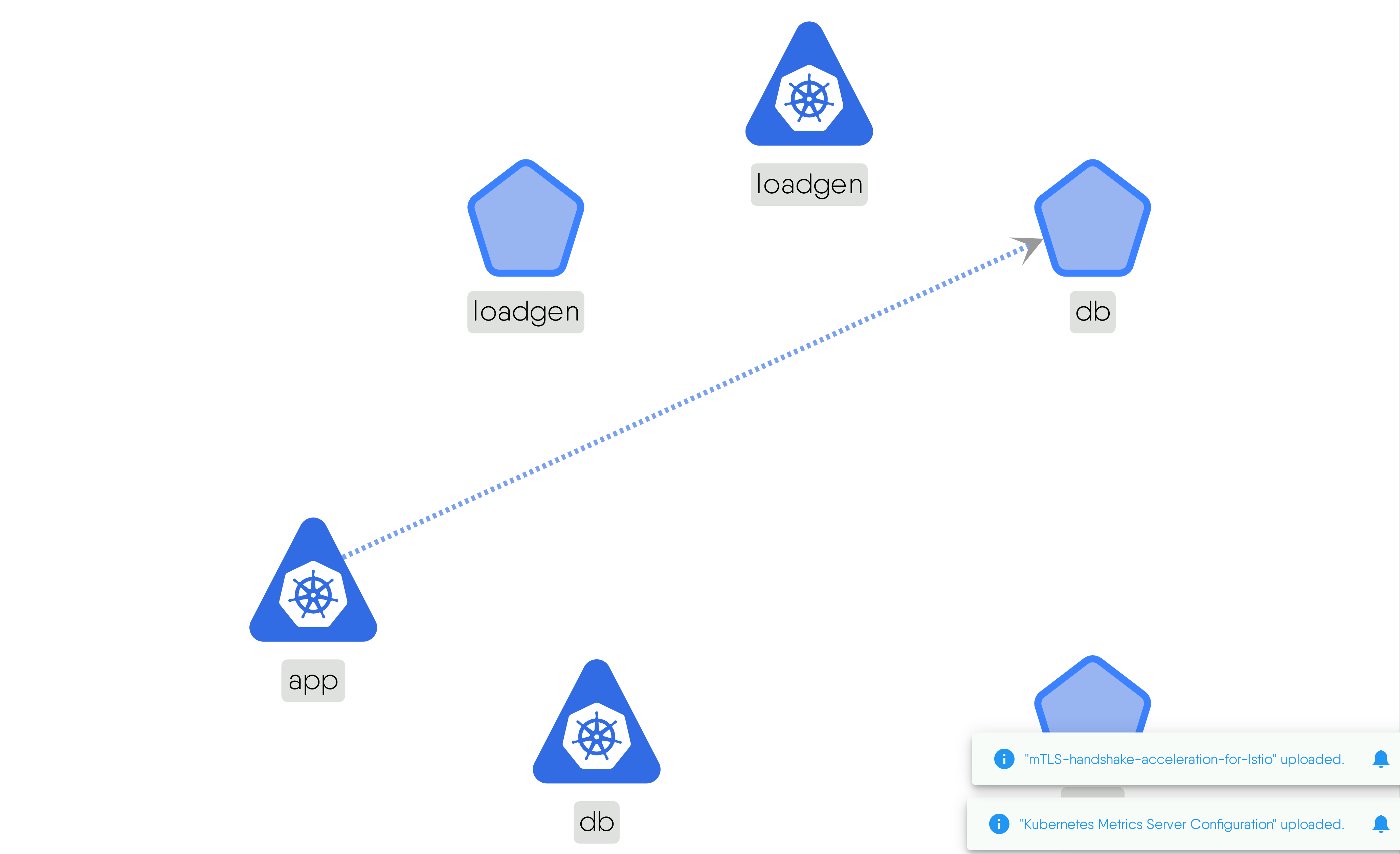

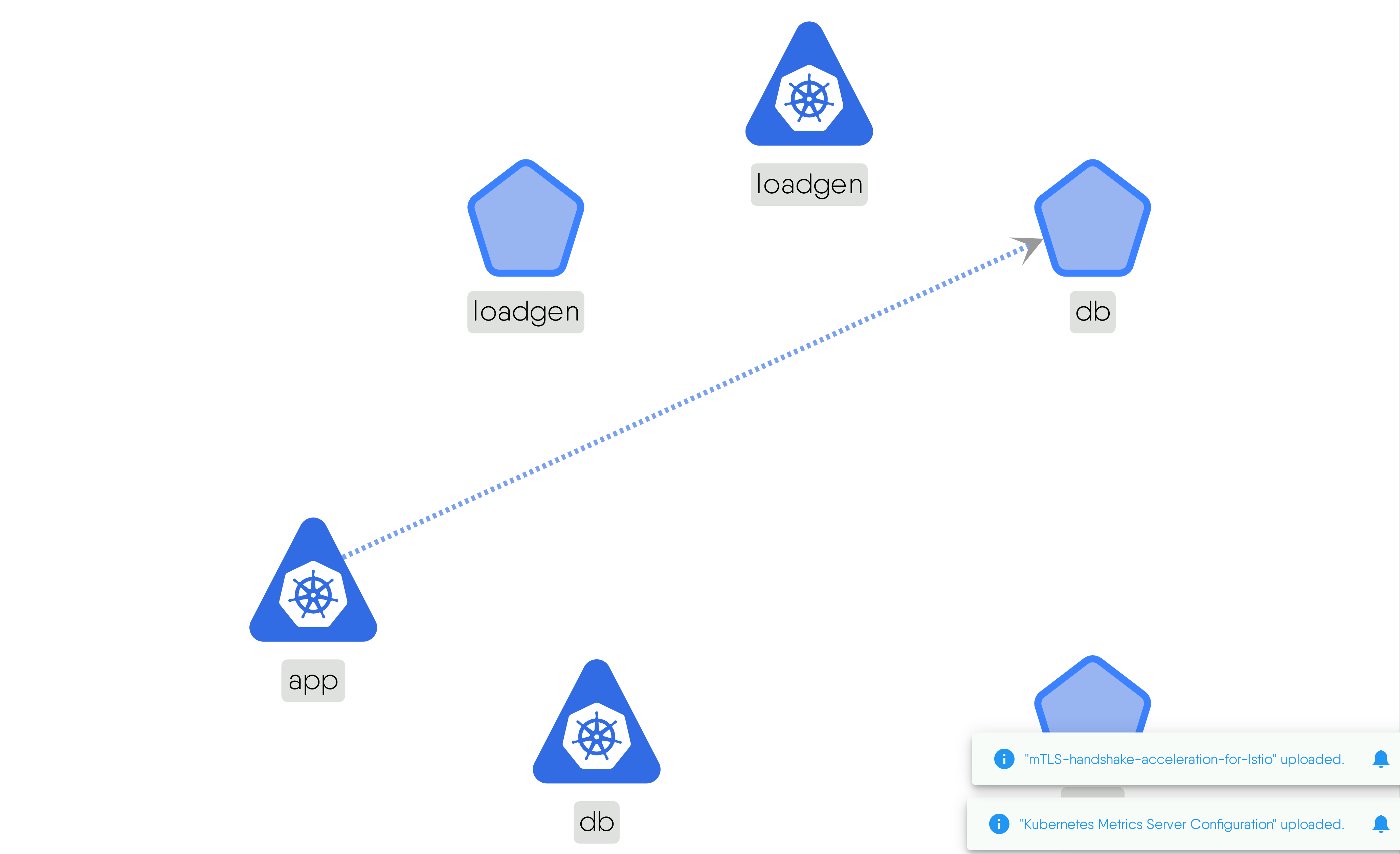

APP-GRAPH

Description

""

Caveats and Considerations

""

Technologies

Related Patterns

Pod Readiness

MESHERY4b83

Argo CD w/Dex

MESHERY4c82

RELATED PATTERNS

Delay Action for Chaos Mesh

MESHERY4dcc

ARGO CD W/DEX

Description

The Argo CD server component exposes the API and UI. The operator creates a Service to expose this component and can be accessed through the various methods available in Kubernetes.

Caveats and Considerations

Dex can be used to delegate authentication to external identity providers like GitHub, SAML and others. SSO configuration of Argo CD requires updating the Argo CD CR with Dex connector settings.

Technologies

Related Patterns

Delay Action for Chaos Mesh

MESHERY4dcc

ArgoCD application controller

MESHERY48a9

RELATED PATTERNS

Istio Operator

MESHERY4a76

ARGOCD APPLICATION CONTROLLER

Description

This YAML configuration describes a Kubernetes Deployment for the ArgoCD Application Controller. It includes metadata defining labels for identification purposes. The spec section outlines the deployment's details, including the desired number of replicas and a pod template. Within the pod template, there's a single container named argocd-application-controller, which runs the ArgoCD Application Controller binary. This container is configured with various environment variables sourced from ConfigMaps, defining parameters such as reconciliation timeouts, repository server details, logging settings, and affinity rules. Port 8082 is specified for readiness probes, and volumes are mounted for storing TLS certificates and temporary data. Additionally, the deployment specifies a service account and defines pod affinity rules for scheduling. These settings collectively ensure the reliable operation of the ArgoCD Application Controller within Kubernetes clusters, facilitating efficient management of applications within an ArgoCD instance.

Read moreCaveats and Considerations

1. Environment Configuration: Ensure that the environment variables configured for the application controller align with your deployment requirements. Review and adjust settings such as reconciliation timeouts, logging levels, and repository server details as needed. 2. Resource Requirements: Depending on your deployment environment and workload, adjust resource requests and limits for the container to ensure optimal performance and resource utilization. 3. Security: Pay close attention to security considerations, especially when handling sensitive data such as TLS certificates. Ensure that proper encryption and access controls are in place for any secrets used in the deployment. 4. High Availability: Consider strategies for achieving high availability and fault tolerance for the ArgoCD Application Controller. This may involve running multiple replicas of the controller across different nodes or availability zones. 5. Monitoring and Alerting: Implement robust monitoring and alerting mechanisms to detect and respond to any issues or failures within the ArgoCD Application Controller deployment. Utilize tools such as Prometheus and Grafana to monitor key metrics and set up alerts for critical events.

Read moreTechnologies

Related Patterns

Istio Operator

MESHERY4a76

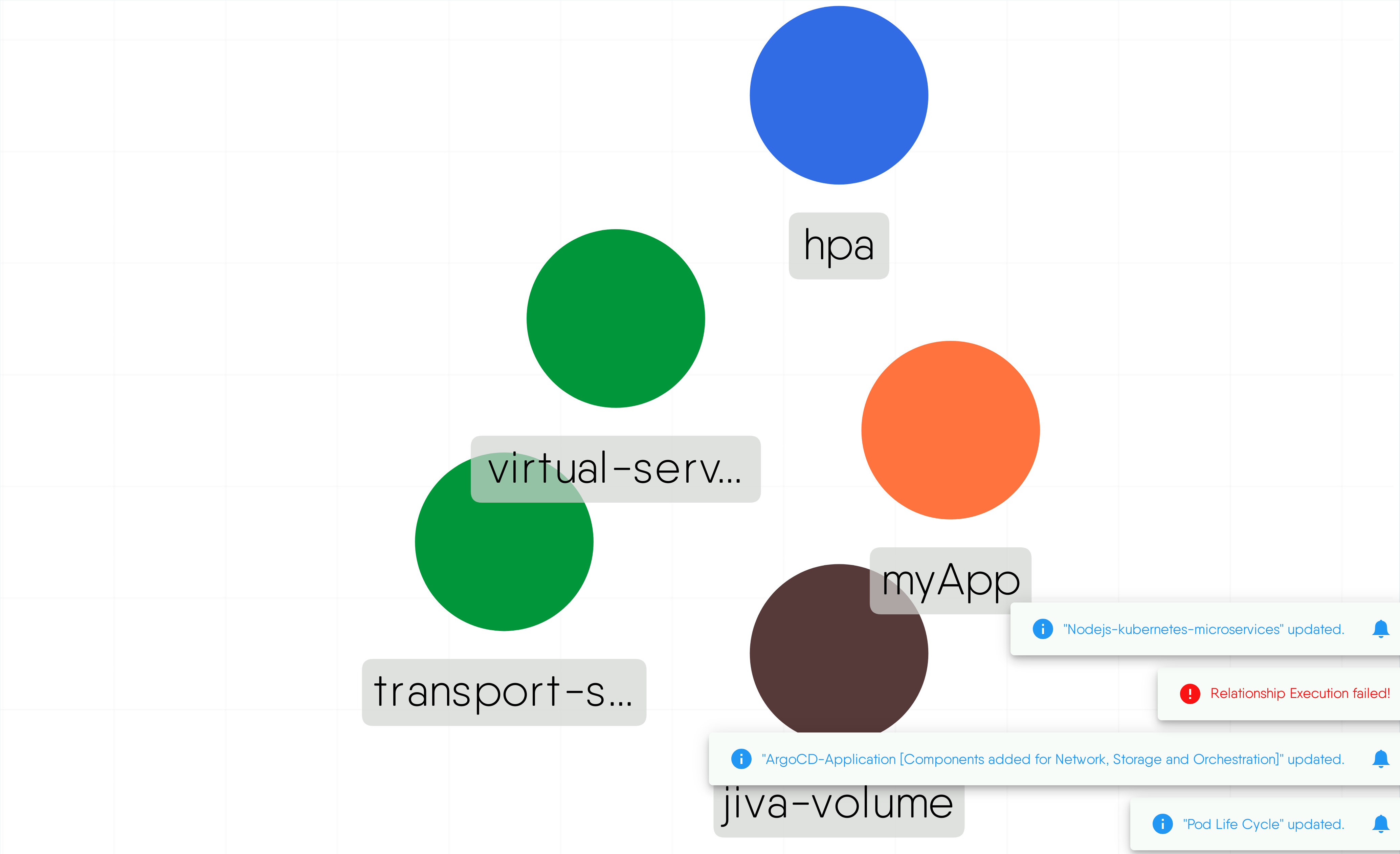

ArgoCD-Application [Components added for Network, Storage and Orchestration]

MESHERY41e0

RELATED PATTERNS

Istio Operator

MESHERY4a76

ARGOCD-APPLICATION [COMPONENTS ADDED FOR NETWORK, STORAGE AND ORCHESTRATION]

Description

This is design that deploys ArgoCD application that includes Nginx virtual service, Nginx server, K8s pod autoscaler, OpenEBS's Jiva volume, and a sample ArgoCD application listening on 127.0.0.4

Caveats and Considerations

Ensure networking is setup properly

Technologies

Related Patterns

Istio Operator

MESHERY4a76

Autogenerated

MESHERY4102

RELATED PATTERNS

Istio Operator

MESHERY4a76

AUTOGENERATED

Description

This YAML manifest defines a Kubernetes Deployment for the Thanos Operator, named "thanos-operator," with one replica. The deployment's pod template is labeled "app: thanos-operator" and includes security settings to run as a non-root user with specific user (1000) and group (2000) IDs. The main container, also named "thanos-operator," uses the "thanos-io/thanos:latest" image, runs with minimal privileges, and starts with the argument "--log.level=info." It listens on port 8080 for HTTP traffic and has liveness and readiness probes set to check the "/metrics" endpoint. Resource requests and limits are defined for CPU and memory. Additionally, the pod is scheduled on Linux nodes with specific node affinity rules and tolerations for certain node taints, ensuring appropriate node placement and scheduling.

Read moreCaveats and Considerations

1. Security Context: 1.1 The runAsUser: 1000 and fsGroup: 2000 settings are essential for running the container with non-root privileges. Ensure that these user IDs are correctly configured and have the necessary permissions within your environment. 1.2 Dropping all capabilities (drop: - ALL) enhances security but may limit certain functionalities. Verify that the Thanos container does not require any additional capabilities. 2. Image Tag: The image tag is set to "latest," which can introduce instability since it pulls the most recent image version that might not be thoroughly tested. Consider specifying a specific, stable version tag for better control over updates and rollbacks. 3. Resource Requests and Limits: The defined resource requests and limits (memory: "64Mi"/"128Mi", cpu: "250m"/"500m") might need adjustment based on the actual workload and performance characteristics of the Thanos Operator in your environment. Monitor resource usage and tweak these settings accordingly to prevent resource starvation or over-provisioning.

Read moreTechnologies

Related Patterns

Istio Operator

MESHERY4a76

Autoscaling based on Metrics in GKE

MESHERY400b

RELATED PATTERNS

HorizontalPodAutoscaler

MESHERY41d1

AUTOSCALING BASED ON METRICS IN GKE

Description

This design demonstrates how to automatically scale your Google Kubernetes Engine (GKE) workloads based on Prometheus-style metrics emitted by your application. It uses the [GKE workload metrics](https://cloud.google.com/stackdriver/docs/solutions/gke/managing-metrics#workload-metrics) pipeline to collect the metrics emitted from the example application and send them to [Cloud Monitoring](https://cloud.google.com/monitoring), and then uses the [HorizontalPodAutoscaler](https://cloud.google.com/kubernetes-engine/docs/concepts/horizontalpodautoscaler) along with the [Custom Metrics Adapter](https://github.com/GoogleCloudPlatform/k8s-stackdriver/tree/master/custom-metrics-stackdriver-adapter) to scale the application.

Read moreCaveats and Considerations

Add your own custom prometheus to GKE for better scaling of workloads

Technologies

Related Patterns

HorizontalPodAutoscaler

MESHERY41d1

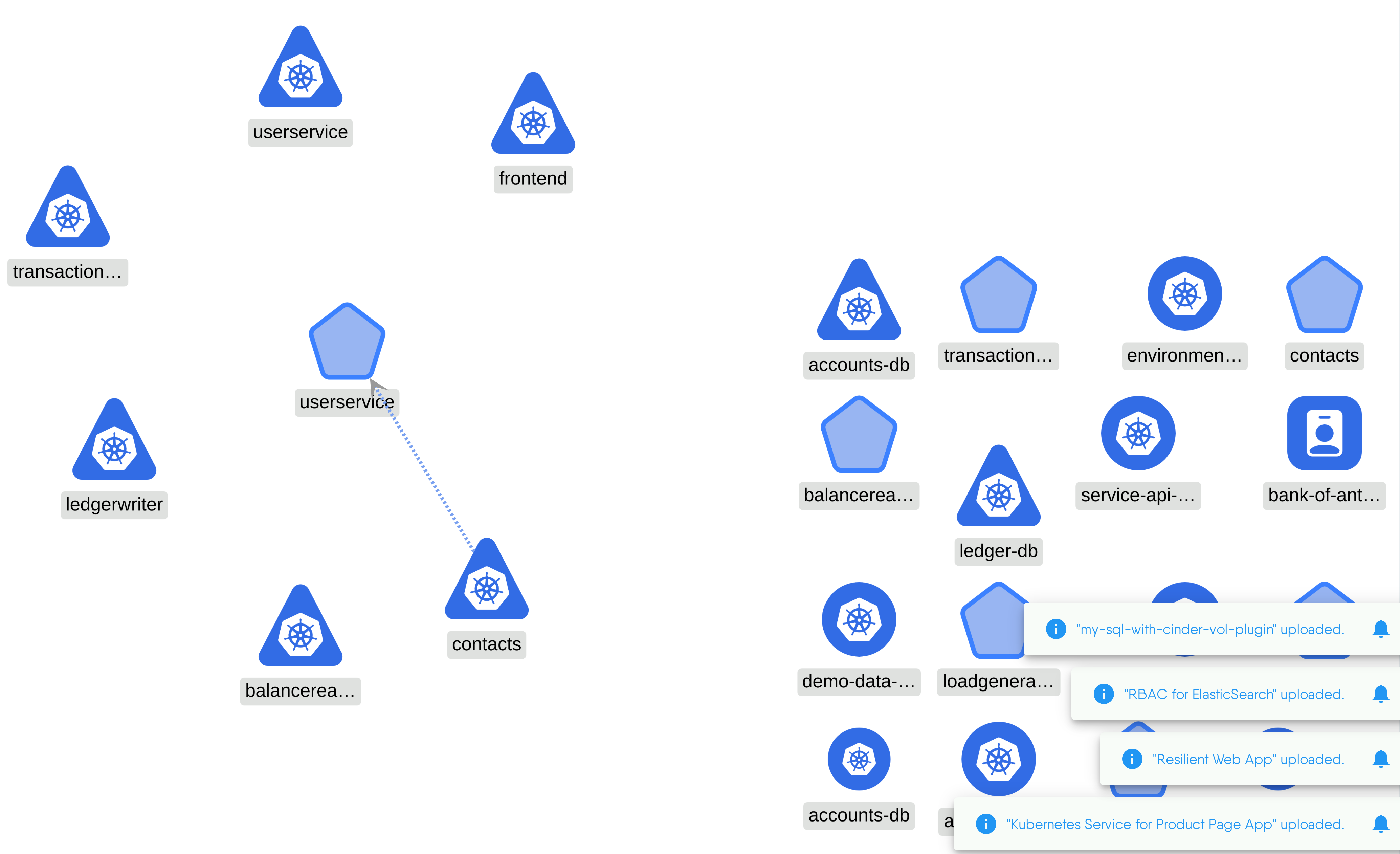

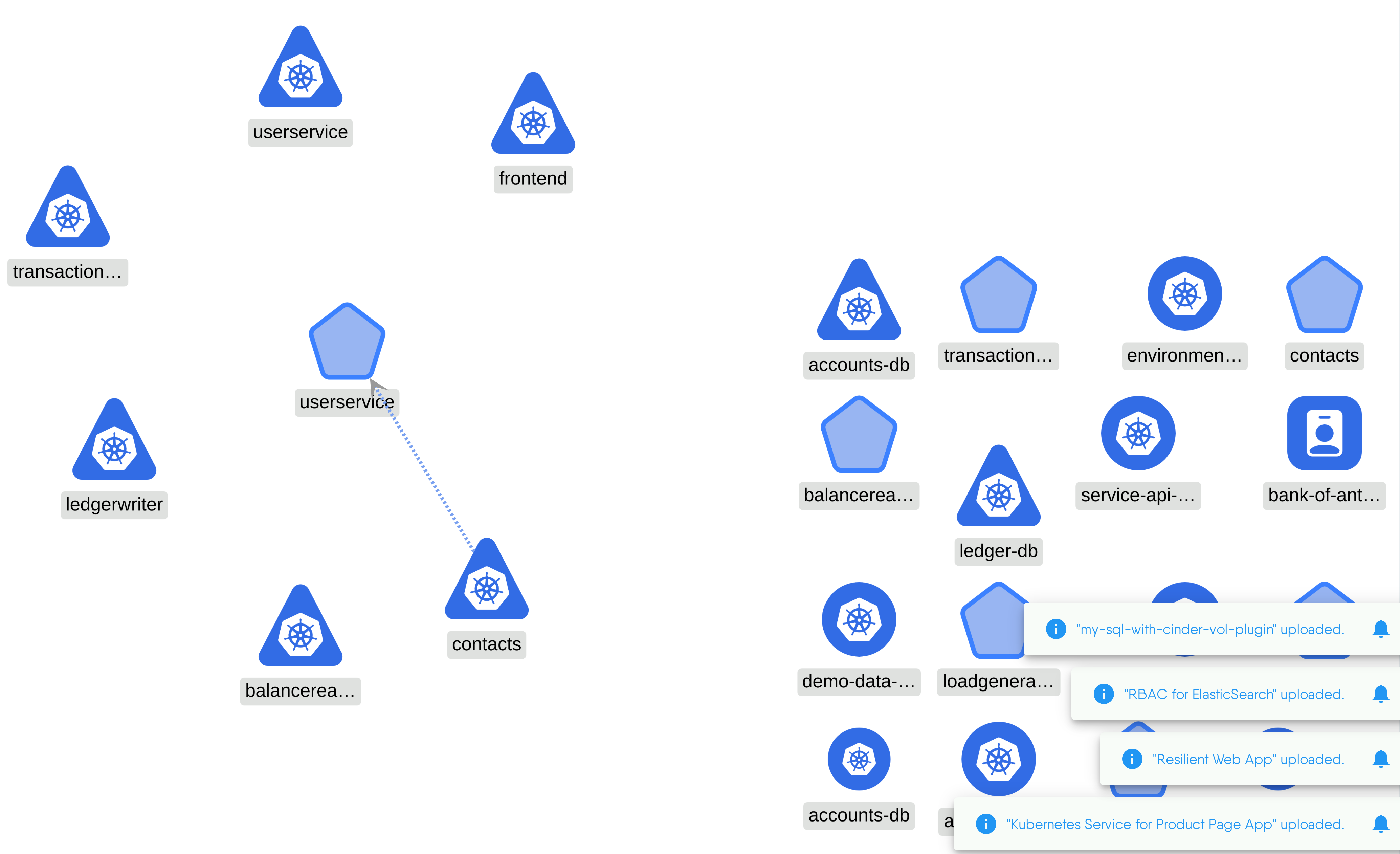

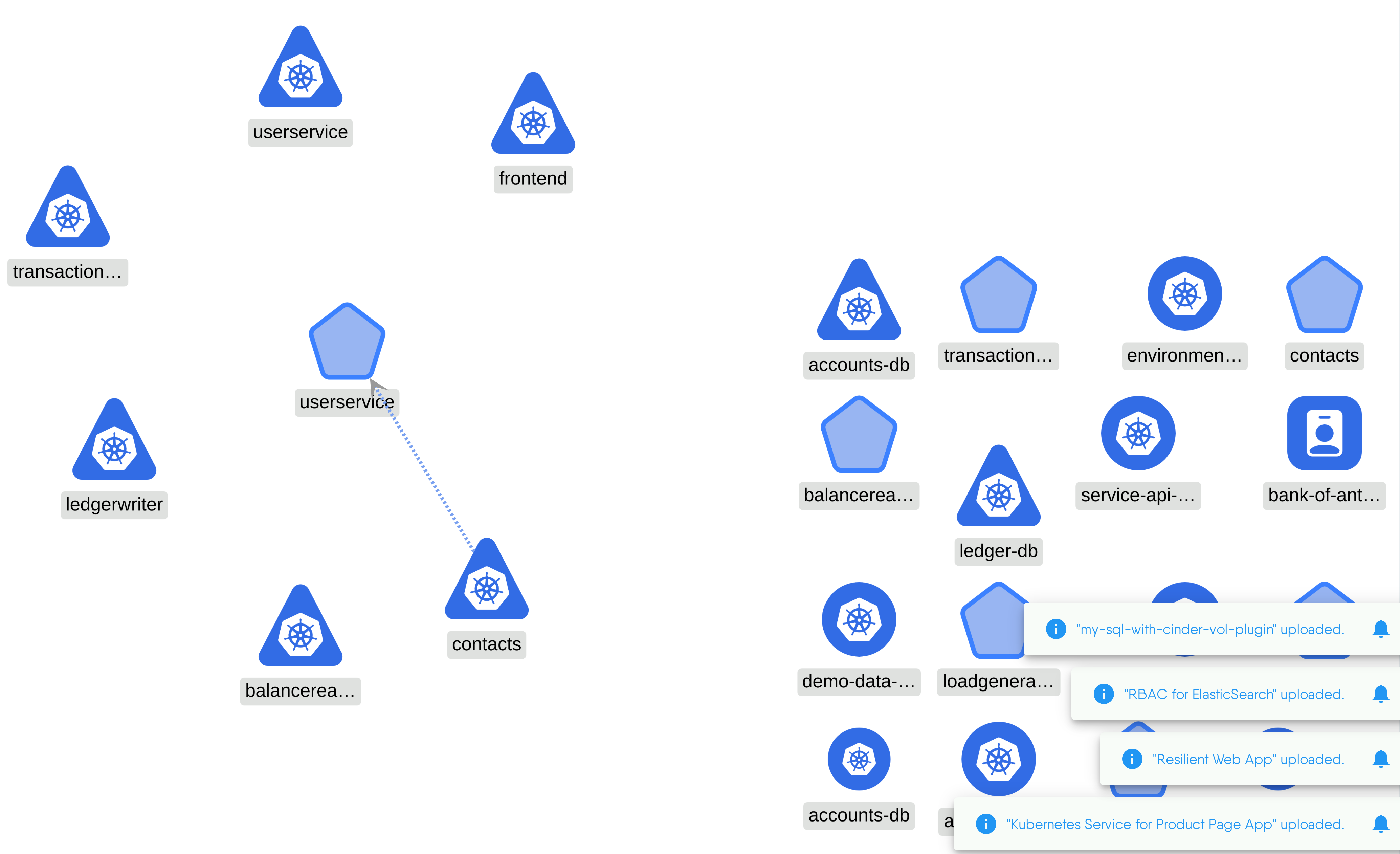

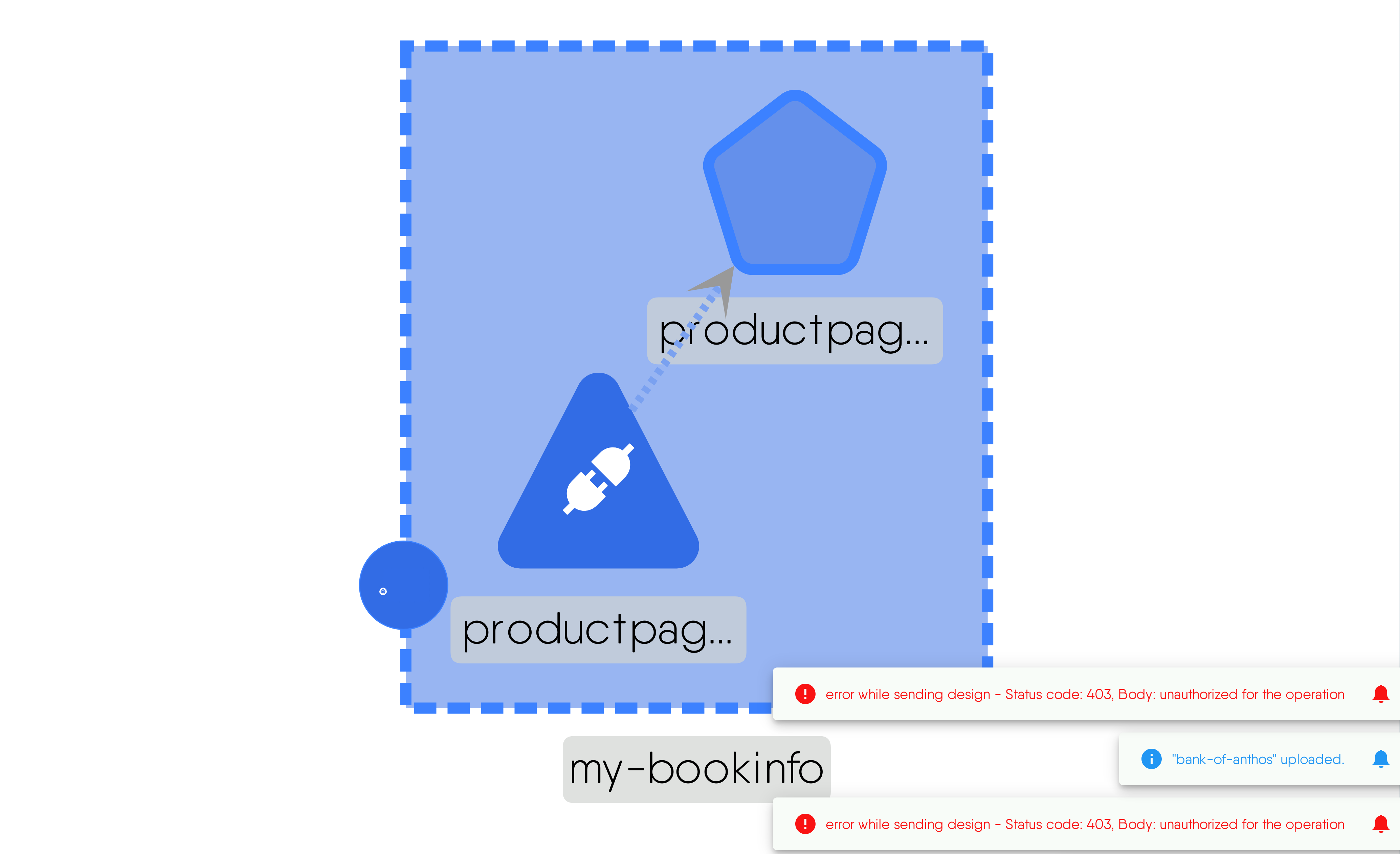

Bank of Anthos

MESHERY48be

RELATED PATTERNS

Pod Readiness

MESHERY4b83

BANK OF ANTHOS

Description

Bank of Anthos is a sample HTTP-based web app that simulates a bank's payment processing network, allowing users to create artificial bank accounts and complete transactions.

Caveats and Considerations

Ensure enough resources are available on the cluster.

Technologies

Related Patterns

Pod Readiness

MESHERY4b83

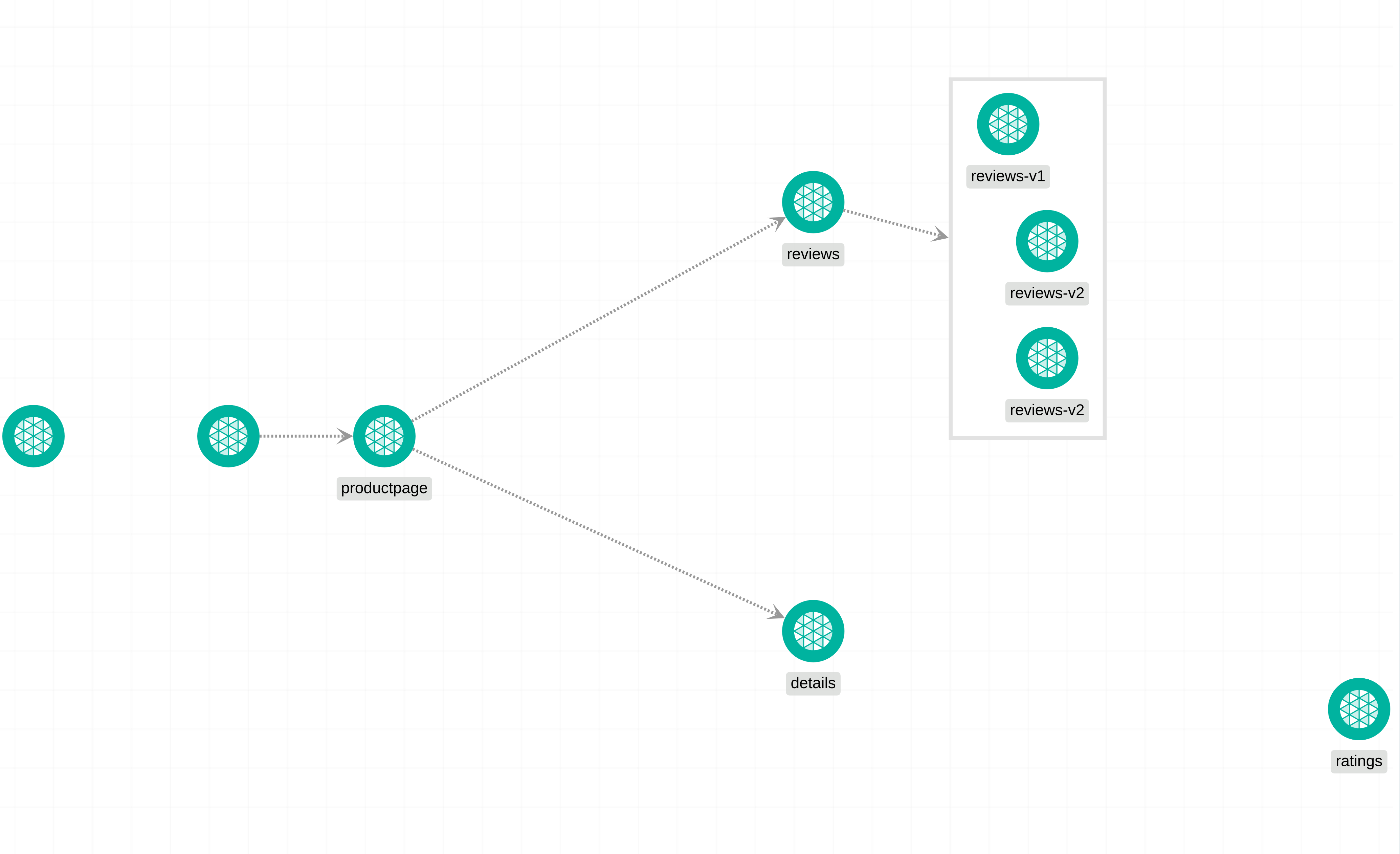

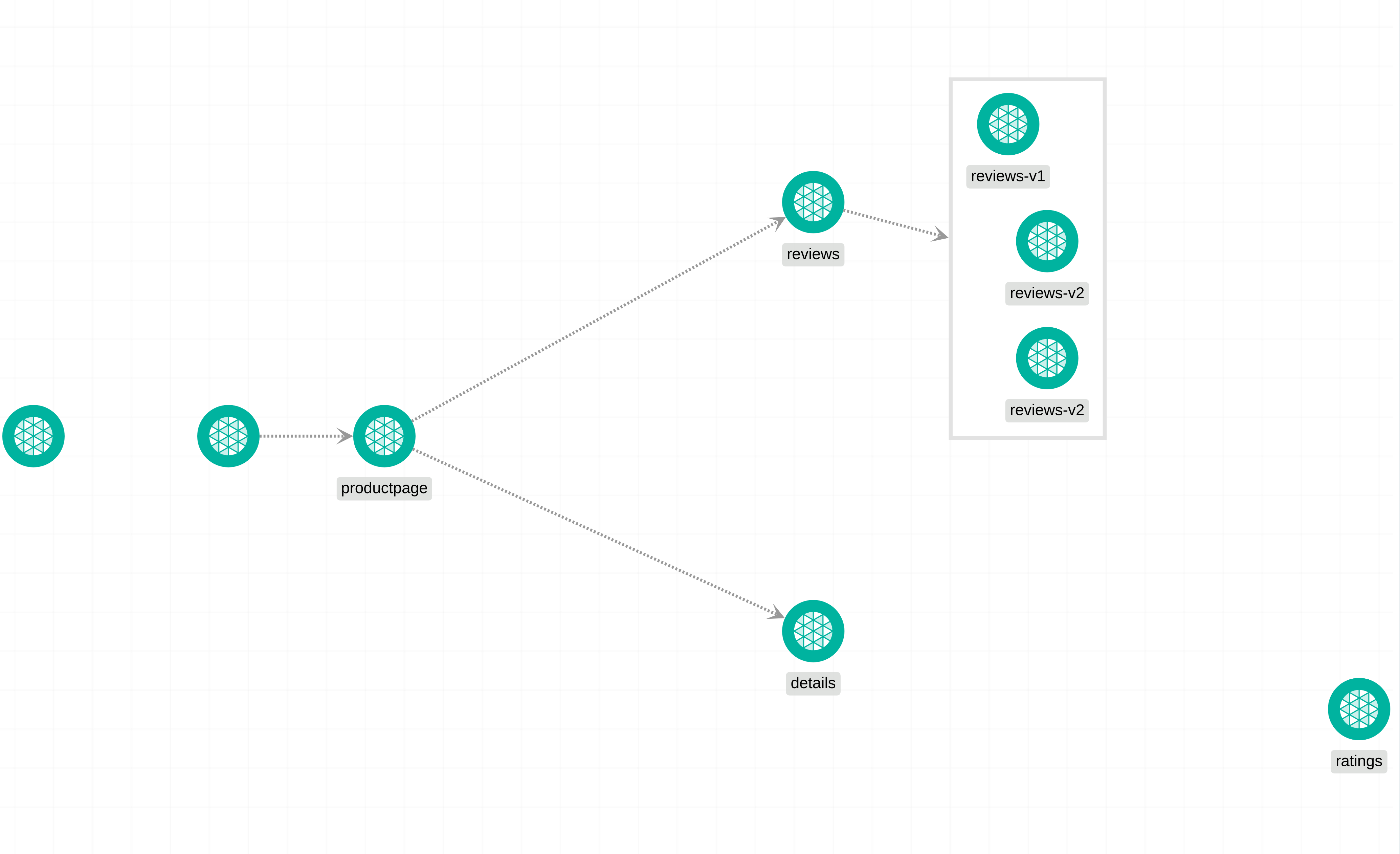

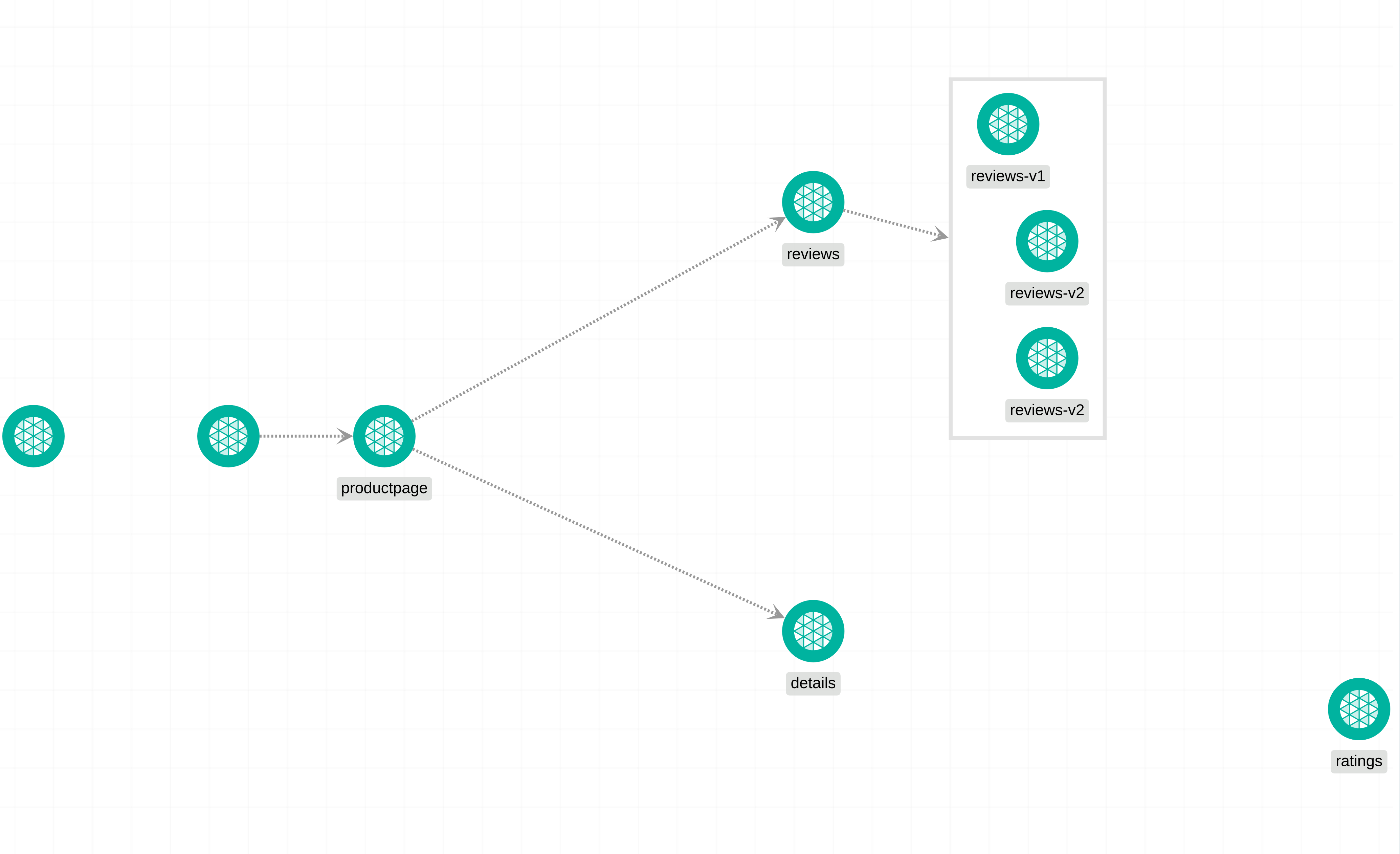

BookInfo App w/o Kubernetes

MESHERY47b4

RELATED PATTERNS

Istio Operator

MESHERY4a76

BOOKINFO APP W/O KUBERNETES

Description

The Bookinfo application is a collection of microservices that work together to display information about a book. The main microservice is called productpage, which fetches data from the details and reviews microservices to populate the book's page. The details microservice contains specific information about the book, such as its ISBN and number of pages. The reviews microservice contains reviews of the book and also makes use of the ratings microservice to retrieve ranking information for each review. The reviews microservice has three different versions: v1, v2, and v3. In v1, the microservice does not interact with the ratings service. In v2, it calls the ratings service and displays the rating using black stars, ranging from 1 to 5. In v3, it also calls the ratings service but displays the rating using red stars, again ranging from 1 to 5. These different versions allow for flexibility and experimentation with different ways of presenting the books ratings to users.

Read moreCaveats and Considerations

Users need to ensure that their cluster is properly configured with Istio, including the installation of the necessary components and enabling sidecar injection for the microservices. Ensure that Meshery Adapter for Istio service mesh is installed properly for easy installation/registration of Istio's MeshModels with Meshery Server. Another consideration is the resource requirements of the application. The Bookinfo application consists of multiple microservices, each running as a separate container. Users should carefully assess the resource needs of the application and ensure that their cluster has sufficient capacity to handle the workload. This includes considering factors such as CPU, memory, and network bandwidth requirements.

Read moreTechnologies

Related Patterns

Istio Operator

MESHERY4a76

Browerless Chrome

MESHERY4c4b

RELATED PATTERNS

Dapr OAuth Authorization to External Service

MESHERY4ce9

BROWERLESS CHROME

Description

Chrome as a service container. Bring your own hardware or cloud. Homepage: https://www.browserless.io ## Configuration Browserless can be configured via environment variables: ```yaml env: PREBOOT_CHROME: "true" ```

Read moreCaveats and Considerations

Check out the [official documentation](https://docs.browserless.io/docs/docker.html) for the available options. ## Values | Key | Type | Default | Description | |-----|------|---------|-------------| | replicaCount | int | `1` | Number of replicas (pods) to launch. | | image.repository | string | `"browserless/chrome"` | Name of the image repository to pull the container image from. | | image.pullPolicy | string | `"IfNotPresent"` | [Image pull policy](https://kubernetes.io/docs/concepts/containers/images/#updating-images) for updating already existing images on a node. | | image.tag | string | `""` | Image tag override for the default value (chart appVersion). | | imagePullSecrets | list | `[]` | Reference to one or more secrets to be used when [pulling images](https://kubernetes.io/docs/tasks/configure-pod-container/pull-image-private-registry/#create-a-pod-that-uses-your-secret) (from private registries). | | nameOverride | string | `""` | A name in place of the chart name for `app:` labels. | | fullnameOverride | string | `""` | A name to substitute for the full names of resources. | | volumes | list | `[]` | Additional storage [volumes](https://kubernetes.io/docs/concepts/storage/volumes/). See the [API reference](https://kubernetes.io/docs/reference/kubernetes-api/workload-resources/pod-v1/#volumes-1) for details. | | volumeMounts | list | `[]` | Additional [volume mounts](https://kubernetes.io/docs/tasks/configure-pod-container/configure-volume-storage/). See the [API reference](https://kubernetes.io/docs/reference/kubernetes-api/workload-resources/pod-v1/#volumes-1) for details. | | envFrom | list | `[]` | Additional environment variables mounted from [secrets](https://kubernetes.io/docs/concepts/configuration/secret/#using-secrets-as-environment-variables) or [config maps](https://kubernetes.io/docs/tasks/configure-pod-container/configure-pod-configmap/#configure-all-key-value-pairs-in-a-configmap-as-container-environment-variables). See the [API reference](https://kubernetes.io/docs/reference/kubernetes-api/workload-resources/pod-v1/#environment-variables) for details. | | env | object | `{}` | Additional environment variables passed directly to containers. See the [API reference](https://kubernetes.io/docs/reference/kubernetes-api/workload-resources/pod-v1/#environment-variables) for details. | | serviceAccount.create | bool | `true` | Enable service account creation. | | serviceAccount.annotations | object | `{}` | Annotations to be added to the service account. | | serviceAccount.name | string | `""` | The name of the service account to use. If not set and create is true, a name is generated using the fullname template. | | podAnnotations | object | `{}` | Annotations to be added to pods. | | podSecurityContext | object | `{}` | Pod [security context](https://kubernetes.io/docs/tasks/configure-pod-container/security-context/#set-the-security-context-for-a-pod). See the [API reference](https://kubernetes.io/docs/reference/kubernetes-api/workload-resources/pod-v1/#security-context) for details. | | securityContext | object | `{}` | Container [security context](https://kubernetes.io/docs/tasks/configure-pod-container/security-context/#set-the-security-context-for-a-container). See the [API reference](https://kubernetes.io/docs/reference/kubernetes-api/workload-resources/pod-v1/#security-context-1) for details. | | service.annotations | object | `{}` | Annotations to be added to the service. | | service.type | string | `"ClusterIP"` | Kubernetes [service type](https://kubernetes.io/docs/concepts/services-networking/service/#publishing-services-service-types). | | service.loadBalancerIP | string | `nil` | Only applies when the service type is LoadBalancer. Load balancer will get created with the IP specified in this field. | | service.loadBalancerSourceRanges | list | `[]` | If specified (and supported by the cloud provider), traffic through the load balancer will be restricted to the specified client IPs. Valid values are IP CIDR blocks. | | service.port | int | `80` | Service port. | | service.nodePort | int | `nil` | Service node port (when applicable). | | service.externalTrafficPolicy | string | `nil` | Route external traffic to node-local or cluster-wide endoints. Useful for [preserving the client source IP](https://kubernetes.io/docs/tasks/access-application-cluster/create-external-load-balancer/#preserving-the-client-source-ip). | | resources | object | No requests or limits. | Container resource [requests and limits](https://kubernetes.io/docs/concepts/configuration/manage-resources-containers/). See the [API reference](https://kubernetes.io/docs/reference/kubernetes-api/workload-resources/pod-v1/#resources) for details. | | autoscaling | object | Disabled by default. | Autoscaling configuration (see [values.yaml](values.yaml) for details). | | nodeSelector | object | `{}` | [Node selector](https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/#nodeselector) configuration. | | tolerations | list | `[]` | [Tolerations](https://kubernetes.io/docs/concepts/scheduling-eviction/taint-and-toleration/) for node taints. See the [API reference](https://kubernetes.io/docs/reference/kubernetes-api/workload-resources/pod-v1/#scheduling) for details. | | affinity | object | `{}` | [Affinity](https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/#affinity-and-anti-affinity) configuration. See the [API reference](https://kubernetes.io/docs/reference/kubernetes-api/workload-resources/pod-v1/#scheduling) for details. |

Read moreTechnologies

Related Patterns

Dapr OAuth Authorization to External Service

MESHERY4ce9

Busybox (single)

MESHERY4c98

RELATED PATTERNS

Istio Operator

MESHERY4a76

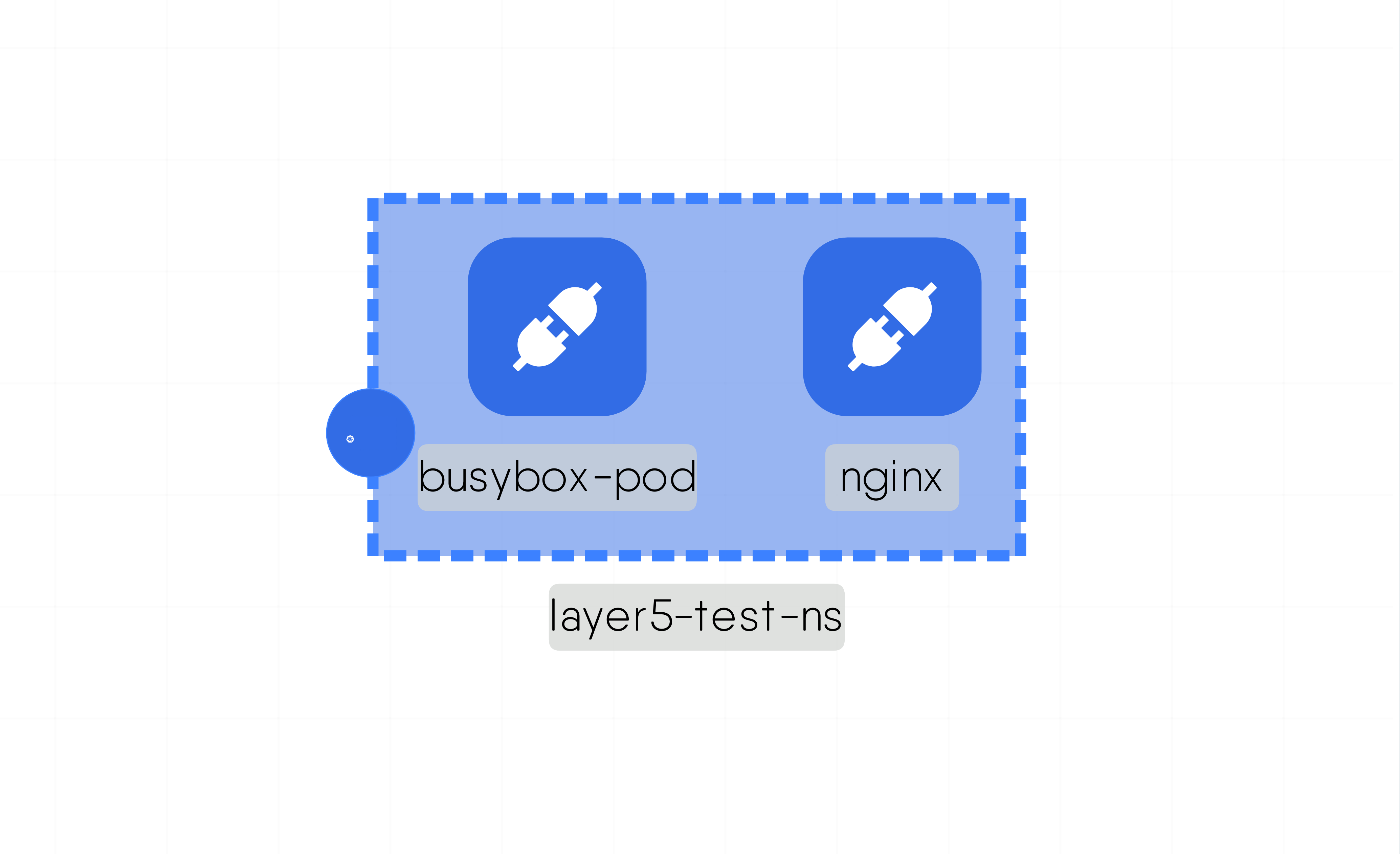

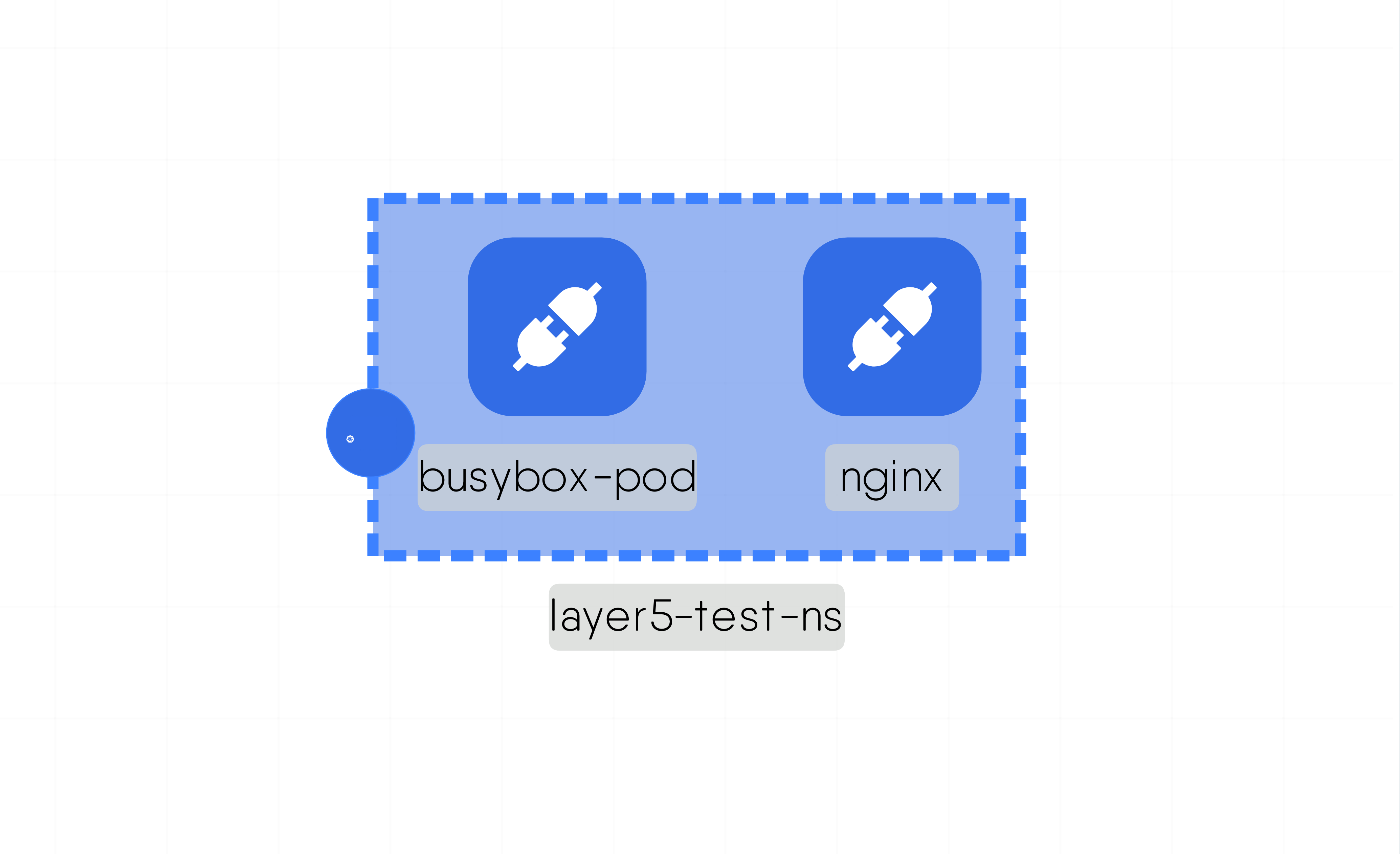

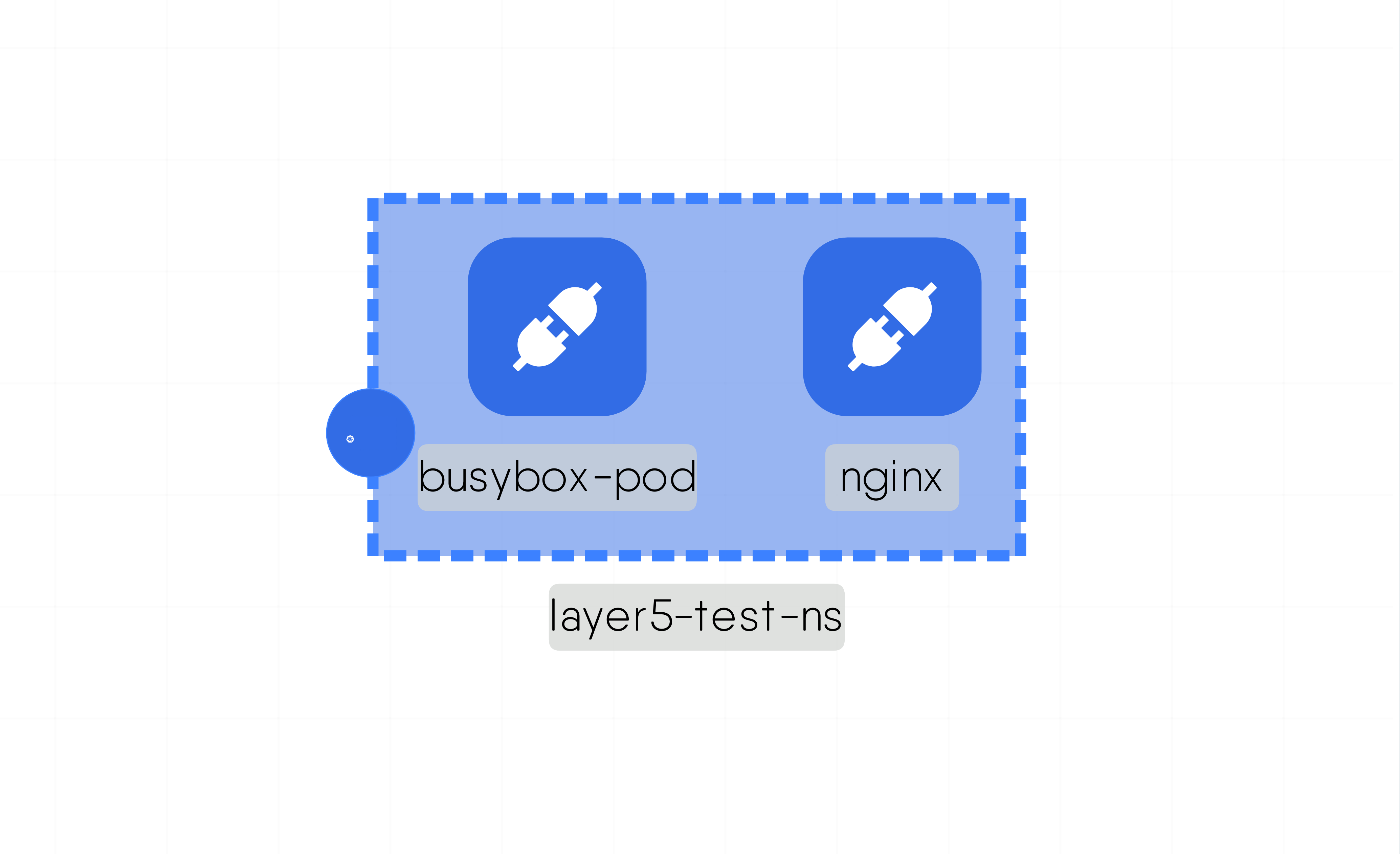

BUSYBOX (SINGLE)

Description

This design deploys simple busybox app inside Layer5-test namespace

Caveats and Considerations

None

Technologies

Related Patterns

Istio Operator

MESHERY4a76

Busybox (single) (fresh)

MESHERY4db7

RELATED PATTERNS

Istio Operator

MESHERY4a76

BUSYBOX (SINGLE) (FRESH)

Description

This design deploys simple busybox app inside Layer5-test namespace

Caveats and Considerations

None

Technologies

Related Patterns

Istio Operator

MESHERY4a76

Catalog Design2

MESHERY41bb

RELATED PATTERNS

Pod Readiness

MESHERY4b83

CATALOG DESIGN2

Description

""

Caveats and Considerations

""

Technologies

Related Patterns

Pod Readiness

MESHERY4b83

Consul on kubernetes

MESHERY429d

RELATED PATTERNS

Apache Airflow

MESHERY41d4

CONSUL ON KUBERNETES

Description

Consul is a tool for discovering, configuring, and managing services in distributed systems. It provides features like service discovery, health checking, key-value storage, and distributed coordination. In Kubernetes, Consul can be useful in several ways: 1. Service Discovery: Kubernetes already has built-in service discovery through DNS and environment variables. However, Consul provides more advanced features such as service registration, DNS-based service discovery, and health checking. This can be particularly useful if you have services deployed both within and outside of Kubernetes, as Consul can provide a unified service discovery mechanism across your entire infrastructure. 2. Configuration Management: Consul includes a key-value store that can be used to store configuration data. This can be used to configure applications dynamically at runtime, allowing for more flexible and dynamic deployments. 3. Health Checking Consul can perform health checks on services to ensure they are functioning correctly. If a service fails its health check, Consul can automatically remove it from the pool of available instances, preventing traffic from being routed to it until it recovers. 4. Service Mesh: Consul can also be used as a service mesh in Kubernetes, providing features like traffic splitting, encryption, and observability. This can help you to manage communication between services within your Kubernetes cluster more effectively. Overall, Consul can complement Kubernetes by providing additional features and capabilities for managing services in distributed systems. It can help to simplify and streamline the management of complex microservices architectures, providing greater visibility, resilience, and flexibility.

Read moreCaveats and Considerations

customize the design according to your requirements and the image is pulled from docker hub

Technologies

Related Patterns

Apache Airflow

MESHERY41d4

CryptoMB

MESHERY441b

RELATED PATTERNS

Pod Readiness

MESHERY4b83

CRYPTOMB

Description

""

Caveats and Considerations

""

Technologies

Related Patterns

Pod Readiness

MESHERY4b83

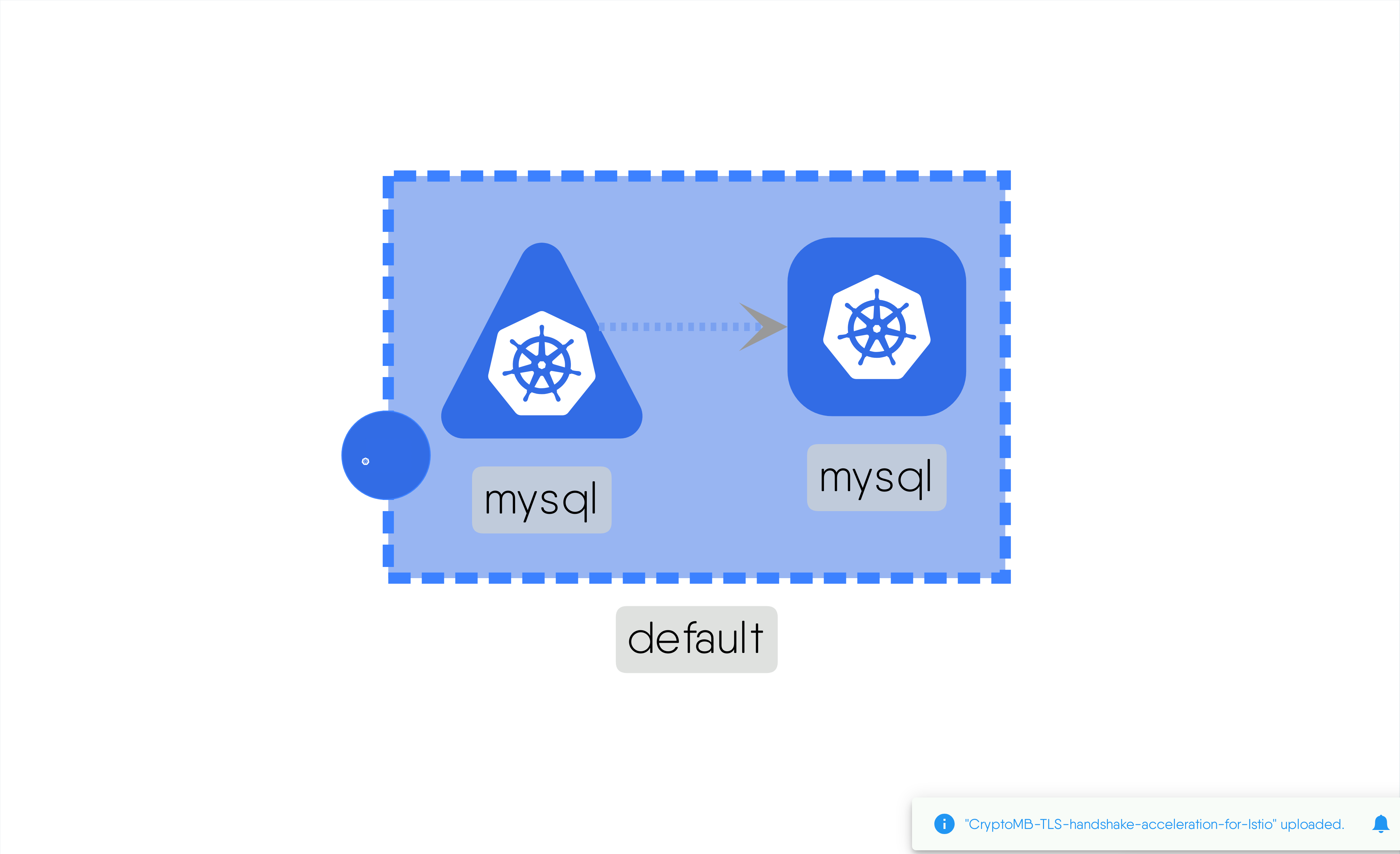

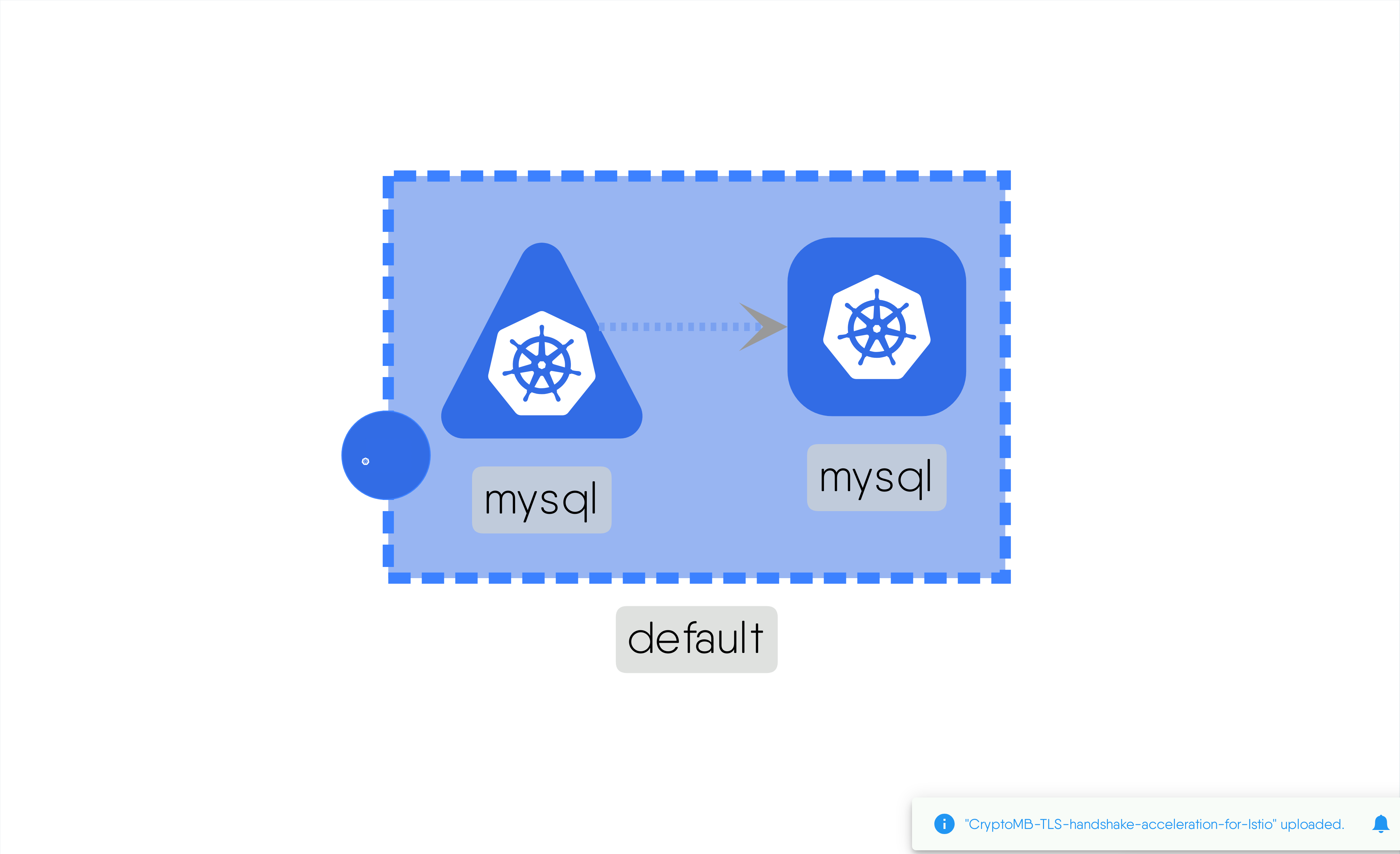

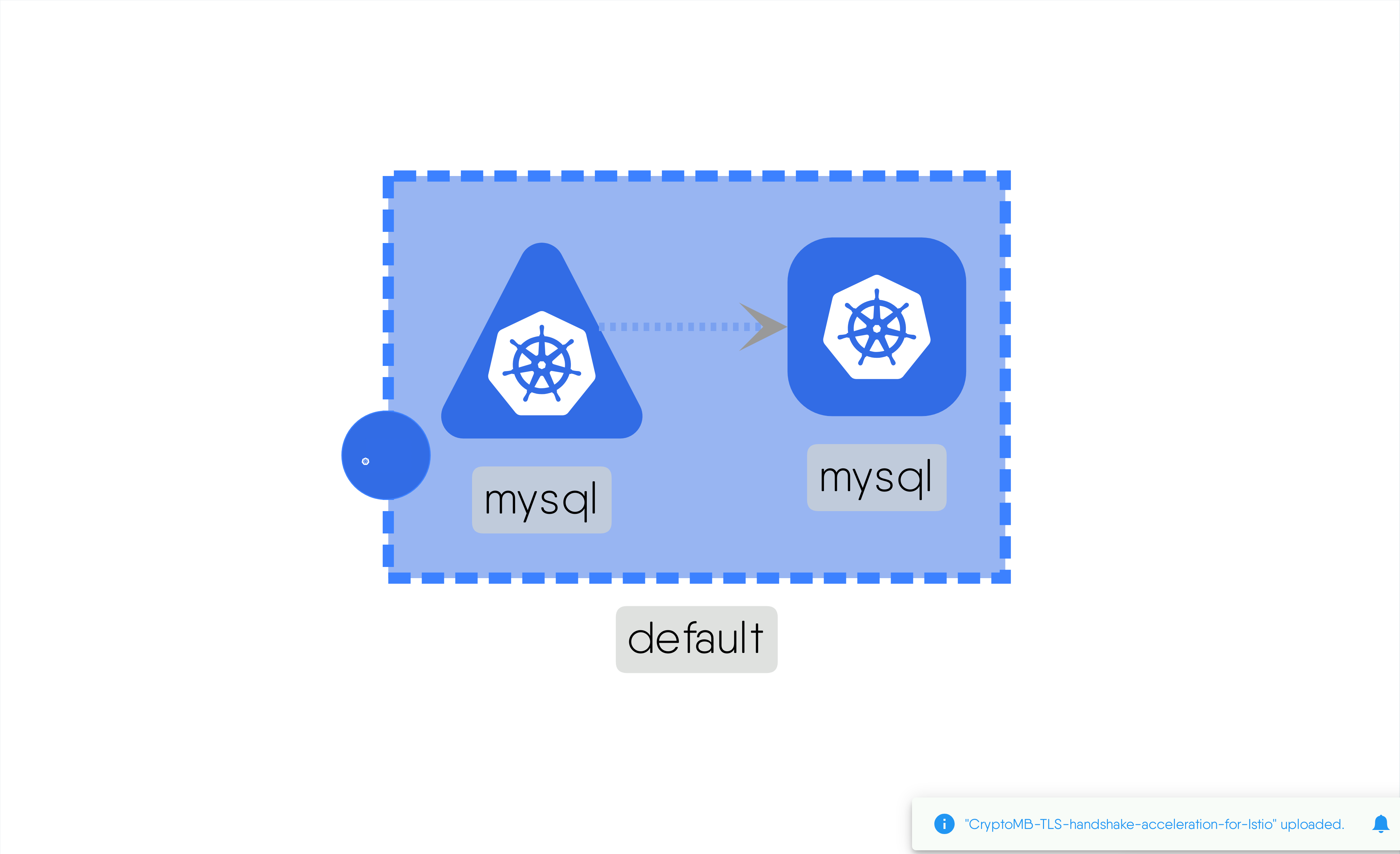

CryptoMB-TLS-handshake-acceleration-for-Istio

MESHERY4f96

RELATED PATTERNS

Istio Operator

MESHERY4a76

CRYPTOMB-TLS-HANDSHAKE-ACCELERATION-FOR-ISTIO

Description

Depending upon use cases, when an ingress gateway must handle a large number of incoming TLS and secured service-to-service connections through sidecar proxies, the load on Envoy increases. The potential performance depends on many factors, such as size of the cpuset on which Envoy is running, incoming traffic patterns, and key size. These factors can impact Envoy serving many new incoming TLS requests. To achieve performance improvements and accelerated handshakes, a new feature was introduced in Envoy 1.20 and Istio 1.14. It can be achieved with 3rd Gen Intel® Xeon® Scalable processors, the Intel® Integrated Performance Primitives (Intel® IPP) crypto library, CryptoMB Private Key Provider Method support in Envoy, and Private Key Provider configuration in Istio using ProxyConfig.\\\\\\\\\\\\\\

\\\\\\\\\\\\\\

Envoy uses BoringSSL as the default TLS library. BoringSSL supports setting private key methods for offloading asynchronous private key operations, and Envoy implements a private key provider framework to allow creation of Envoy extensions which handle TLS handshakes private key operations (signing and decryption) using the BoringSSL hooks.\\\\\\\\\\\\\\

\\\\\\\\\\\\\\

CryptoMB private key provider is an Envoy extension which handles BoringSSL TLS RSA operations using Intel AVX-512 multi-buffer acceleration. When a new handshake happens, BoringSSL invokes the private key provider to request the cryptographic operation, and then the control returns to Envoy. The RSA requests are gathered in a buffer. When the buffer is full or the timer expires, the private key provider invokes Intel AVX-512 processing of the buffer. When processing is done, Envoy is notified that the cryptographic operation is done and that it may continue with the handshakes.

Caveats and Considerations

None

Technologies

Related Patterns

Istio Operator

MESHERY4a76

CryptoMB-TLS-handshake-acceleration-for-Istio

MESHERY42b7

RELATED PATTERNS

Istio Operator

MESHERY4a76

CRYPTOMB-TLS-HANDSHAKE-ACCELERATION-FOR-ISTIO

Description

Envoy uses BoringSSL as the default TLS library. BoringSSL supports setting private key methods for offloading asynchronous private key operations, and Envoy implements a private key provider framework to allow creation of Envoy extensions which handle TLS handshakes private key operations (signing and decryption) using the BoringSSL hooks.\\

\\

CryptoMB private key provider is an Envoy extension which handles BoringSSL TLS RSA operations using Intel AVX-512 multi-buffer acceleration. When a new handshake happens, BoringSSL invokes the private key provider to request the cryptographic operation, and then the control returns to Envoy. The RSA requests are gathered in a buffer. When the buffer is full or the timer expires, the private key provider invokes Intel AVX-512 processing of the buffer. When processing is done, Envoy is notified that the cryptographic operation is done and that it may continue with the handshakes.\\

Envoy uses BoringSSL as the default TLS library. BoringSSL supports setting private key methods for offloading asynchronous private key operations, and Envoy implements a private key provider framework to allow creation of Envoy extensions which handle TLS handshakes private key operations (signing and decryption) using the BoringSSL hooks.\\

\\

CryptoMB private key provider is an Envoy extension which handles BoringSSL TLS RSA operations using Intel AVX-512 multi-buffer acceleration. When a new handshake happens, BoringSSL invokes the private key provider to request the cryptographic operation, and then the control returns to Envoy. The RSA requests are gathered in a buffer. When the buffer is full or the timer expires, the private key provider invokes Intel AVX-512 processing of the buffer. When processing is done, Envoy is notified that the cryptographic operation is done and that it may continue with the handshakes.\\

\\

\\

Caveats and Considerations

None

Technologies

Related Patterns

Istio Operator

MESHERY4a76

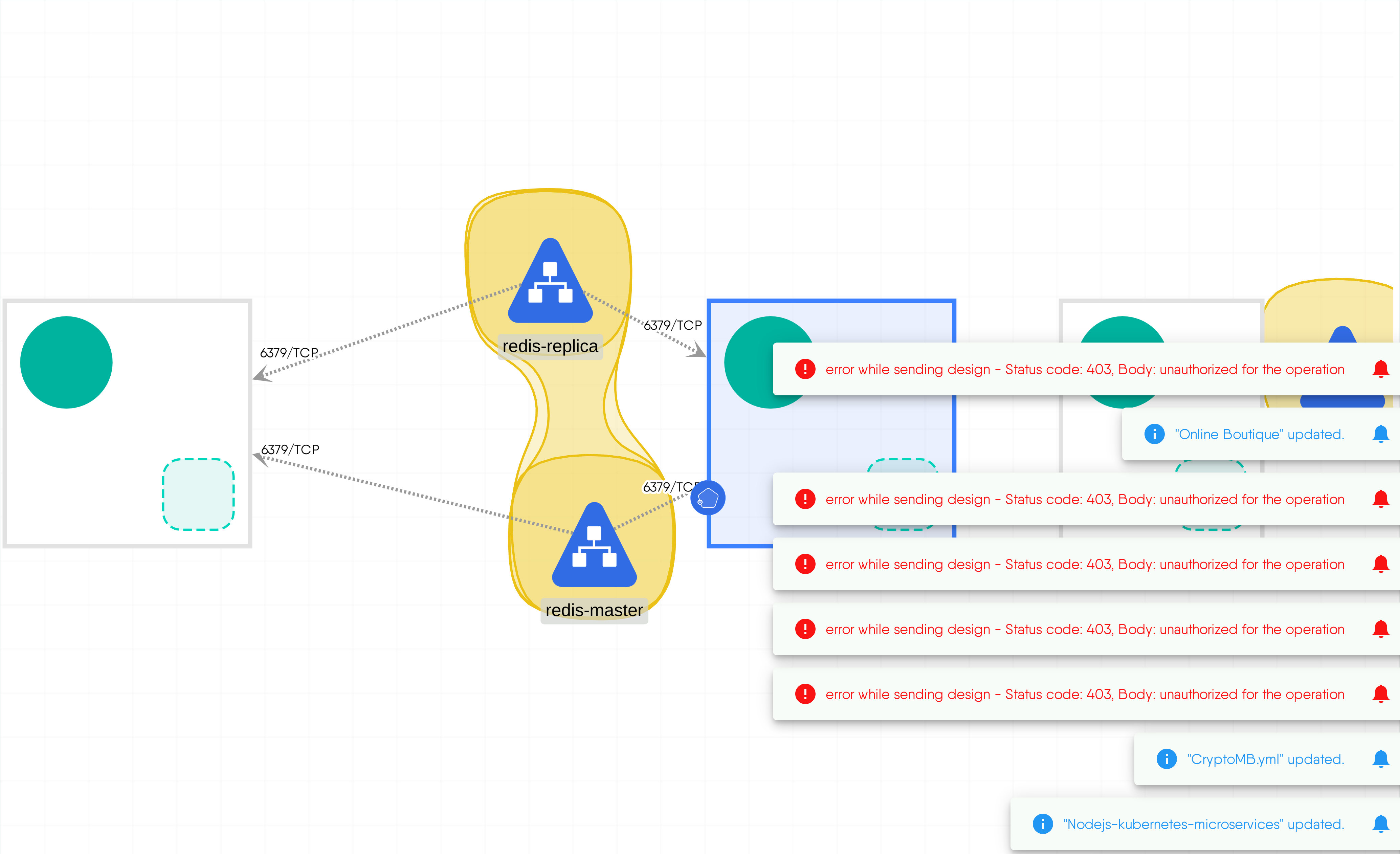

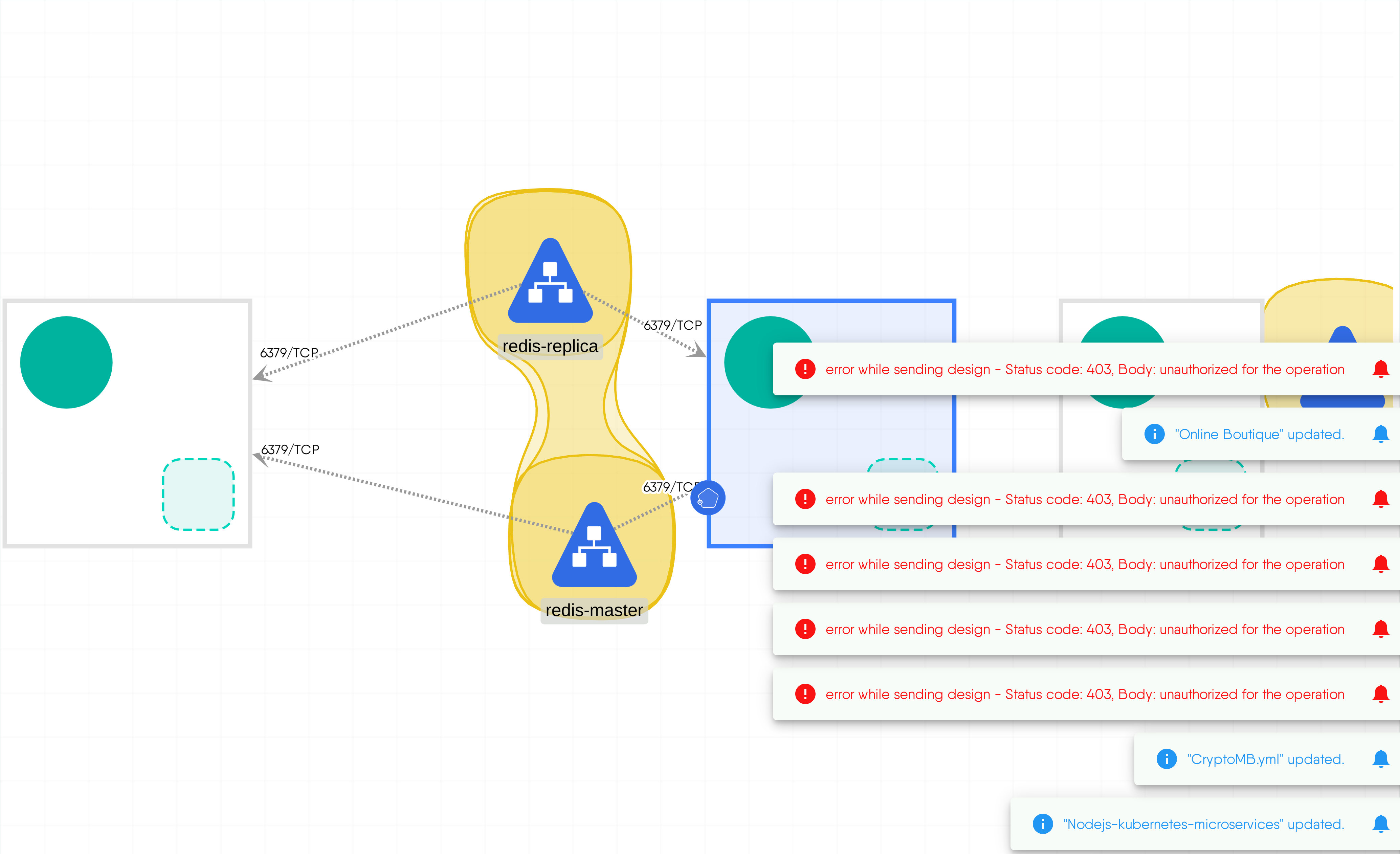

CryptoMB.yml

MESHERY4c1f

RELATED PATTERNS

Pod Readiness

MESHERY4b83

CRYPTOMB.YML

Description

Cryptographic operations are among the most compute-intensive and critical operations when it comes to secured connections. Istio uses Envoy as the “gateways/sidecar” to handle secure connections and intercept the traffic. Depending upon use cases, when an ingress gateway must handle a large number of incoming TLS and secured service-to-service connections through sidecar proxies, the load on Envoy increases. The potential performance depends on many factors, such as size of the cpuset on which Envoy is running, incoming traffic patterns, and key size. These factors can impact Envoy serving many new incoming TLS requests. To achieve performance improvements and accelerated handshakes, a new feature was introduced in Envoy 1.20 and Istio 1.14. It can be achieved with 3rd Gen Intel® Xeon® Scalable processors, the Intel® Integrated Performance Primitives (Intel® IPP) crypto library, CryptoMB Private Key Provider Method support in Envoy, and Private Key Provider configuration in Istio using ProxyConfig.

Read moreCaveats and Considerations

Ensure networking is setup properly and correct annotation are applied to each resource for custom Intel configuration

Technologies

Related Patterns

Pod Readiness

MESHERY4b83

Dapr OAuth Authorization to External Service

MESHERY4ce9

RELATED PATTERNS

Browerless Chrome

MESHERY4c4b

DAPR OAUTH AUTHORIZATION TO EXTERNAL SERVICE

Description

This design walks you through the steps of setting up the OAuth middleware to enable a service to interact with external services requiring authentication. This design seperates the authentication/authorization concerns from the application. checkout this https://github.com/dapr/samples/tree/master/middleware-oauth-microsoftazure for more inoformation and try out in your own environment.

Read moreCaveats and Considerations

Certainly! Here's how you would replace the placeholders with actual values and apply the configuration to your Kubernetes cluster: 1. Replace `"YOUR_APPLICATION_ID"`, `"YOUR_CLIENT_SECRET"`, and `"YOUR_TENANT_ID"` with your actual values in the `msgraphsp` component metadata: ```yaml metadata: # OAuth2 ClientID, for Microsoft Identity Platform it is the AAD Application ID - name: clientId value: "your_actual_application_id" # OAuth2 Client Secret - name: clientSecret value: "your_actual_client_secret" # Application Scope for Microsoft Graph API (vs. User Scope) - name: scopes value: "https://graph.microsoft.com/.default" # Token URL for Microsoft Identity Platform, TenantID is the Tenant (also sometimes called Directory) ID of the AAD - name: tokenURL value: "https://login.microsoftonline.com/your_actual_tenant_id/oauth2/v2.0/token" ``` 2. Apply the modified YAML configuration to your Kubernetes cluster using `kubectl apply -f your_file.yaml`. Ensure you've replaced `"your_actual_application_id"`, `"your_actual_client_secret"`, and `"your_actual_tenant_id"` with the appropriate values corresponding to your Microsoft Graph application and Azure Active Directory configuration before applying the configuration to your cluster.

Read moreTechnologies

Related Patterns

Browerless Chrome

MESHERY4c4b

Dapr with Kubernetes events

MESHERY4215

RELATED PATTERNS

Robot Shop Sample App

MESHERY4c4e

DAPR WITH KUBERNETES EVENTS

Description

This design will show an example of running Dapr with a Kubernetes events input binding. You'll be deploying the Node application and will require a component definition with a Kubernetes event binding component. checkout this https://github.com/dapr/samples/tree/master/read-kubernetes-events#read-kubernetes-events for more info .

Read moreCaveats and Considerations

make sure to replace some things like docker images ,credentials to try out on your local cluster .

Technologies

Related Patterns

Robot Shop Sample App

MESHERY4c4e

Datadog agent on k8's

MESHERY465c

RELATED PATTERNS

Robot Shop Sample App

MESHERY4c4e

DATADOG AGENT ON K8'S

Description

The Datadog Agent is a lightweight software component deployed within Kubernetes clusters to collect metrics, traces, and logs. It automatically monitors Kubernetes resources, including pods and nodes, providing visibility into system performance and application behavior. With features like autodiscovery, tracing, log collection, and extensive integrations, the Datadog Agent helps teams efficiently monitor, troubleshoot, and optimize their Kubernetes-based applications and infrastructure.

Read moreCaveats and Considerations

This is an basic example to deploy datadog agent on kubernetes for more please refer offical docs https://docs.datadoghq.com/containers/kubernetes/installation/?tab=operator

Technologies

Related Patterns

Robot Shop Sample App

MESHERY4c4e

Delay Action for Chaos Mesh

MESHERY4dcc

RELATED PATTERNS

Postgres Deployment

MESHERY49ba

DELAY ACTION FOR CHAOS MESH

Description

A simple example

Caveats and Considerations

An example the delay action

Technologies

Related Patterns

Postgres Deployment

MESHERY49ba

Deployment Web

MESHERY477c

RELATED PATTERNS

Pod Readiness

MESHERY4b83

DEPLOYMENT WEB

Description

""

Caveats and Considerations

""

Technologies

Related Patterns

Pod Readiness

MESHERY4b83

Distributed Database w/ Shardingshpere

MESHERY4ba3

RELATED PATTERNS

Pod Readiness

MESHERY4b83

DISTRIBUTED DATABASE W/ SHARDINGSHPERE

Description

""

Caveats and Considerations

""

Technologies

Related Patterns

Pod Readiness

MESHERY4b83

ELK stack

MESHERY4b16

RELATED PATTERNS

Robot Shop Sample App

MESHERY4c4e

ELK STACK

Description

ELK stack in kubernetes deployed with simple python app using logstash ,kibana , filebeat ,elastic search.

Caveats and Considerations

here technologies included are kubernetes , elastic search ,log stash ,log stash ,kibana ,python etc

Technologies

Related Patterns

Robot Shop Sample App

MESHERY4c4e

Edge Permission Relationship

MESHERY4ce5

RELATED PATTERNS

Istio Operator

MESHERY4a76

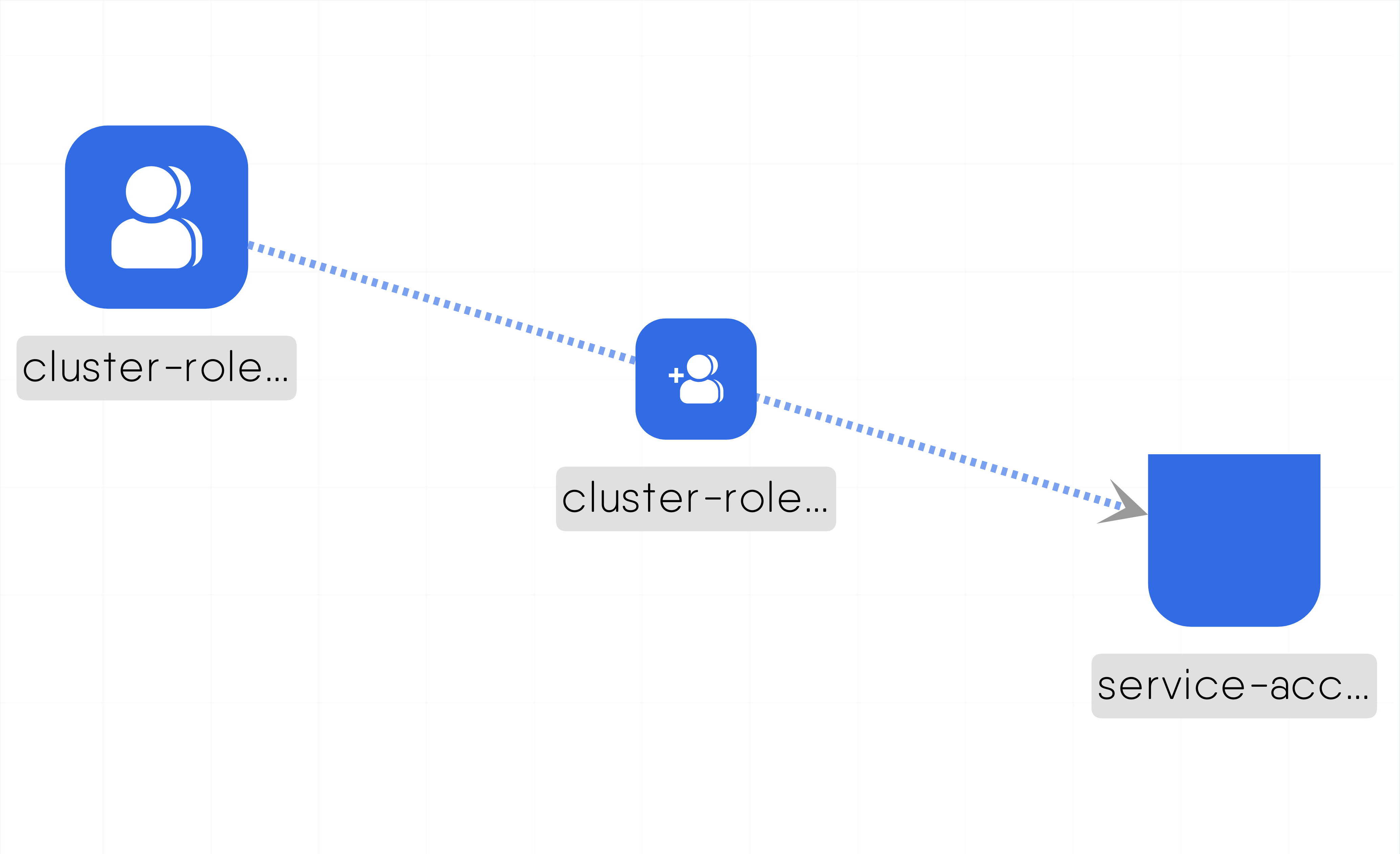

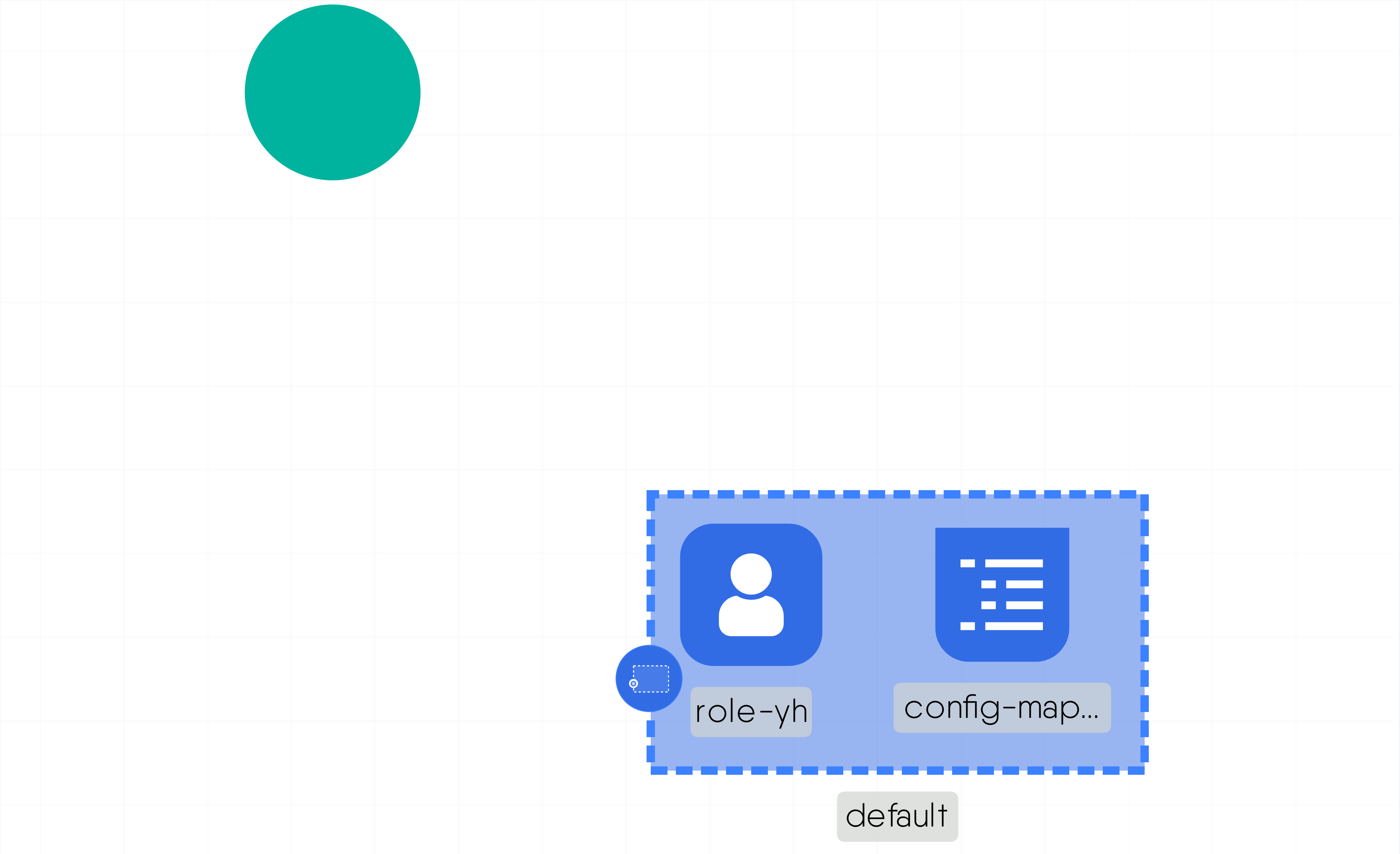

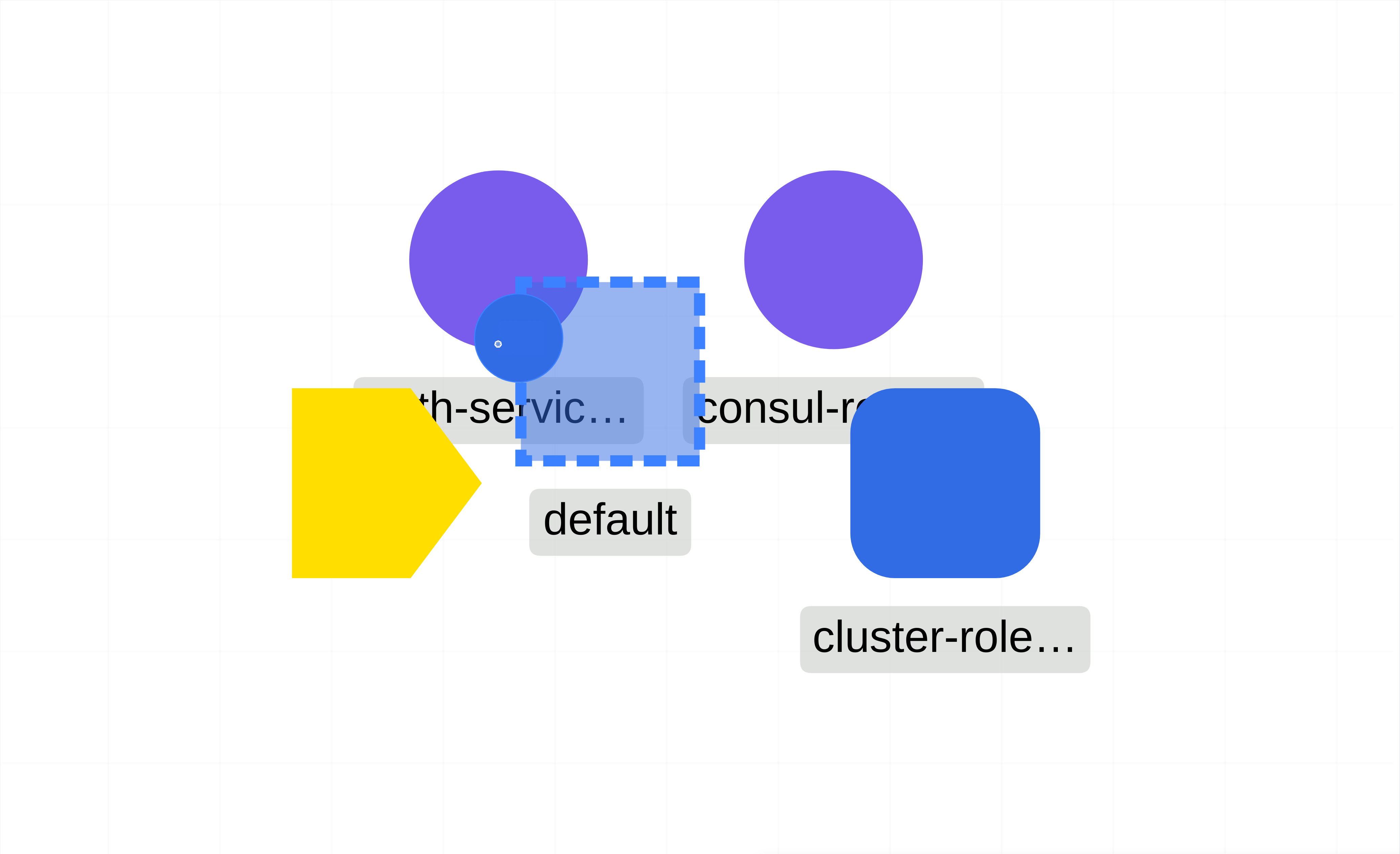

EDGE PERMISSION RELATIONSHIP

Description

A relationship that binds permission between components. Eg: ClusterRole defines a set of permissions, ClusterRoleBinding binds those permissions to subjects like service accounts.

Caveats and Considerations

NA

Technologies

Related Patterns

Istio Operator

MESHERY4a76

ElasticSearch

MESHERY4654

RELATED PATTERNS

ELASTICSEARCH

Description

Kubernetes makes it trivial for anyone to easily build and scale Elasticsearch clusters. Here, you'll find how to do so. Current Elasticsearch version is 5.6.2.

Caveats and Considerations

Elasticsearch for Kubernetes: Current pod descriptors use an emptyDir for storing data in each data node container. This is meant to be for the sake of simplicity and should be adapted according to your storage needs.

Technologies

Related Patterns

Emojivoto Application

MESHERY4c01

RELATED PATTERNS

Pod Readiness

MESHERY4b83

EMOJIVOTO APPLICATION

Description

""

Caveats and Considerations

""

Technologies

Related Patterns

Pod Readiness

MESHERY4b83

Envoy using BoringSSL

MESHERY447c

ENVOY USING BORINGSSL

Description

Envoy uses BoringSSL as the default TLS library. BoringSSL supports setting private key methods for offloading asynchronous private key operations, and Envoy implements a private key provider framework to allow creation of Envoy extensions which handle TLS handshakes private key operations (signing and decryption) using the BoringSSL hooks.

CryptoMB private key provider is an Envoy extension which handles BoringSSL TLS RSA operations using Intel AVX-512 multi-buffer acceleration. When a new handshake happens, BoringSSL invokes the private key provider to request the cryptographic operation, and then the control returns to Envoy. The RSA requests are gathered in a buffer. When the buffer is full or the timer expires, the private key provider invokes Intel AVX-512 processing of the buffer. When processing is done, Envoy is notified that the cryptographic operation is done and that it may continue with the handshakes.

Envoy uses BoringSSL as the default TLS library. BoringSSL supports setting private key methods for offloading asynchronous private key operations, and Envoy implements a private key provider framework to allow creation of Envoy extensions which handle TLS handshakes private key operations (signing and decryption) using the BoringSSL hooks.

CryptoMB private key provider is an Envoy extension which handles BoringSSL TLS RSA operations using Intel AVX-512 multi-buffer acceleration. When a new handshake happens, BoringSSL invokes the private key provider to request the cryptographic operation, and then the control returns to Envoy. The RSA requests are gathered in a buffer. When the buffer is full or the timer expires, the private key provider invokes Intel AVX-512 processing of the buffer. When processing is done, Envoy is notified that the cryptographic operation is done and that it may continue with the handshakes.

Caveats and Considerations

test

Technologies

Example Edge-Firewall Relationship

MESHERY490f

RELATED PATTERNS

Istio Operator

MESHERY4a76

EXAMPLE EDGE-FIREWALL RELATIONSHIP

Description

A relationship that act as a firewall for ingress and egress traffic from Pods.

Caveats and Considerations

NA

Technologies

Related Patterns

Istio Operator

MESHERY4a76

Example Edge-Network Relationship

MESHERY4ee9

RELATED PATTERNS

Istio Operator

MESHERY4a76

EXAMPLE EDGE-NETWORK RELATIONSHIP

Description

The design showcases the operational dynamics of the Edge-Network Relationship. There are two ways you can use this design in your architecture design. 1. Cloning this design by clicking the clone button. 2. Start from scratch by creating an edge-network relationship on your own. How to create an Edge-Network relationship on your own? 1. Navigate to MeshMap. 2. Click on the Kubernetes icon inside the dock it will open a Kubernetes drawer from where you can select any component that Kubernetes supports. 3. Search for the Ingress and Service component from the search bar provided in the drawer. 4. Drag-n-drop both the components on the canvas. 5. Hover over the Ingress component, Some handlebars will show up on four sides of the component. 6. Move the cursor close to either of the handlebars, an arrow will show up, click on that arrow. This will open up two options: 1. Question mark: Opens the Help Center 2. Arrow (Edge handle): This edge handle is used for creating the edge relationship 7. Click on the Edge handle and move your cursor close to the Service component. An edge will appear going from the Ingress to Service component which represents the edge relationship between the two components. 8. Congratulations! You just created a relationship between Ingress and Service.

Read moreCaveats and Considerations

No Caveats or Considerations

Technologies

Related Patterns

Istio Operator

MESHERY4a76

Example Edge-Permission Relationship

MESHERY4f9f

RELATED PATTERNS

Istio Operator

MESHERY4a76

EXAMPLE EDGE-PERMISSION RELATIONSHIP

Description

The design showcases the operational dynamics of the Edge-Permission relationship. To engage with its functionality, adhere to the sequential steps below: 1. Duplicate this design by cloning it. 2. Modify the name of the service account. Upon completion, you'll notice that the connection visually represented by the edge vanishes, and the ClusterRoleBinding (CRB) is disassociated from both the ClusterRole (CR) and Service Account (SA). To restore this relationship, you can either, 1. Drag the CRB from the CR to the SA, then release the mouse click. This action triggers the recreation of the relationship, as the relationship constraints get satisfied. 2. Or, revert the name of the SA. This automatically recreates the relationship, as the relationship constraints get satisfied. These are a few of the ways to experience this relationship.

Read moreCaveats and Considerations

NA

Technologies

Related Patterns

Istio Operator

MESHERY4a76

Example Labels and Annotations

MESHERY4649

RELATED PATTERNS

Pod Readiness

MESHERY4b83

EXAMPLE LABELS AND ANNOTATIONS

Description

""

Caveats and Considerations

""

Technologies

Related Patterns

Pod Readiness

MESHERY4b83

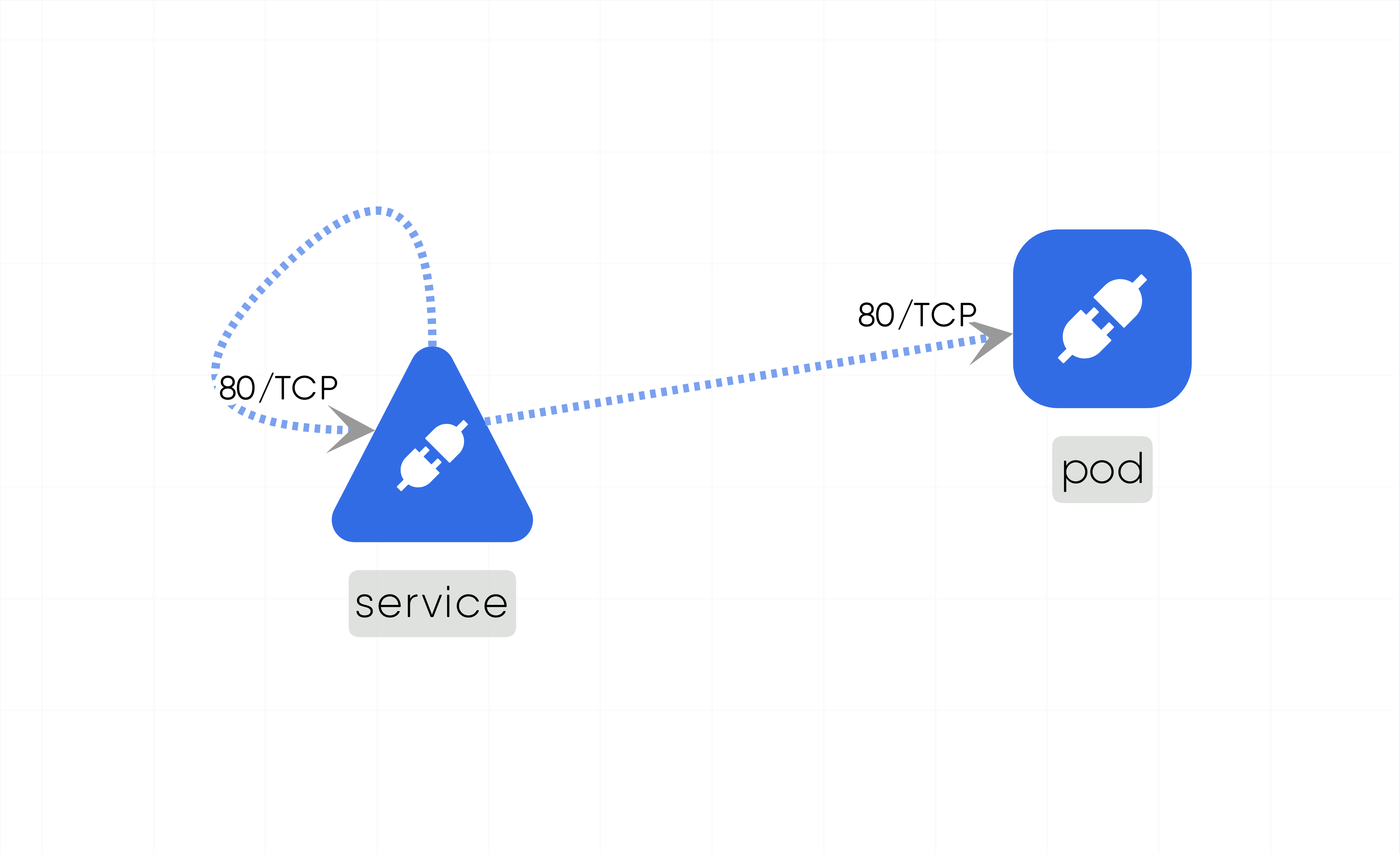

Exploring Kubernetes Pods With Meshery

MESHERY44f5

RELATED PATTERNS

Istio Operator

MESHERY4a76

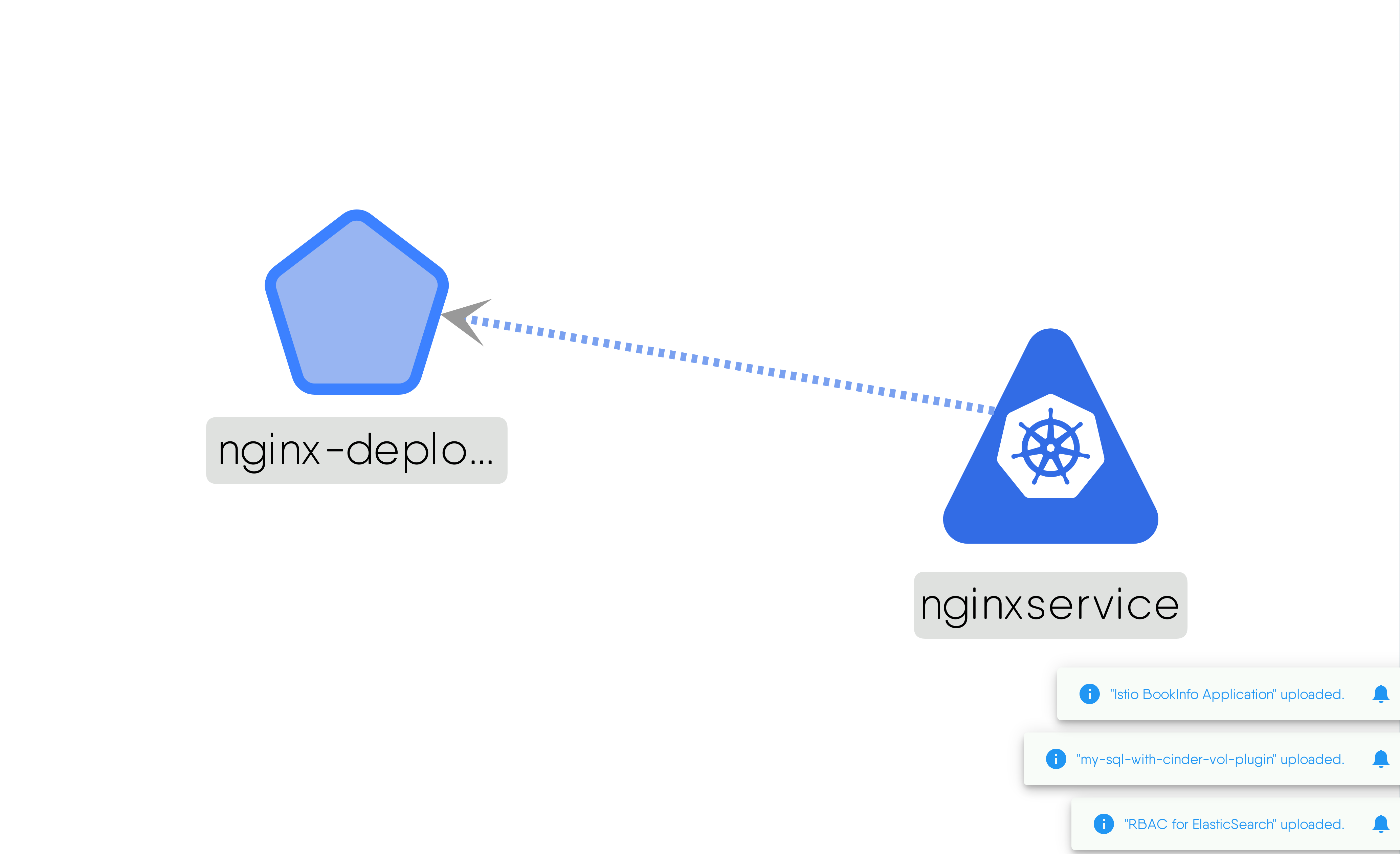

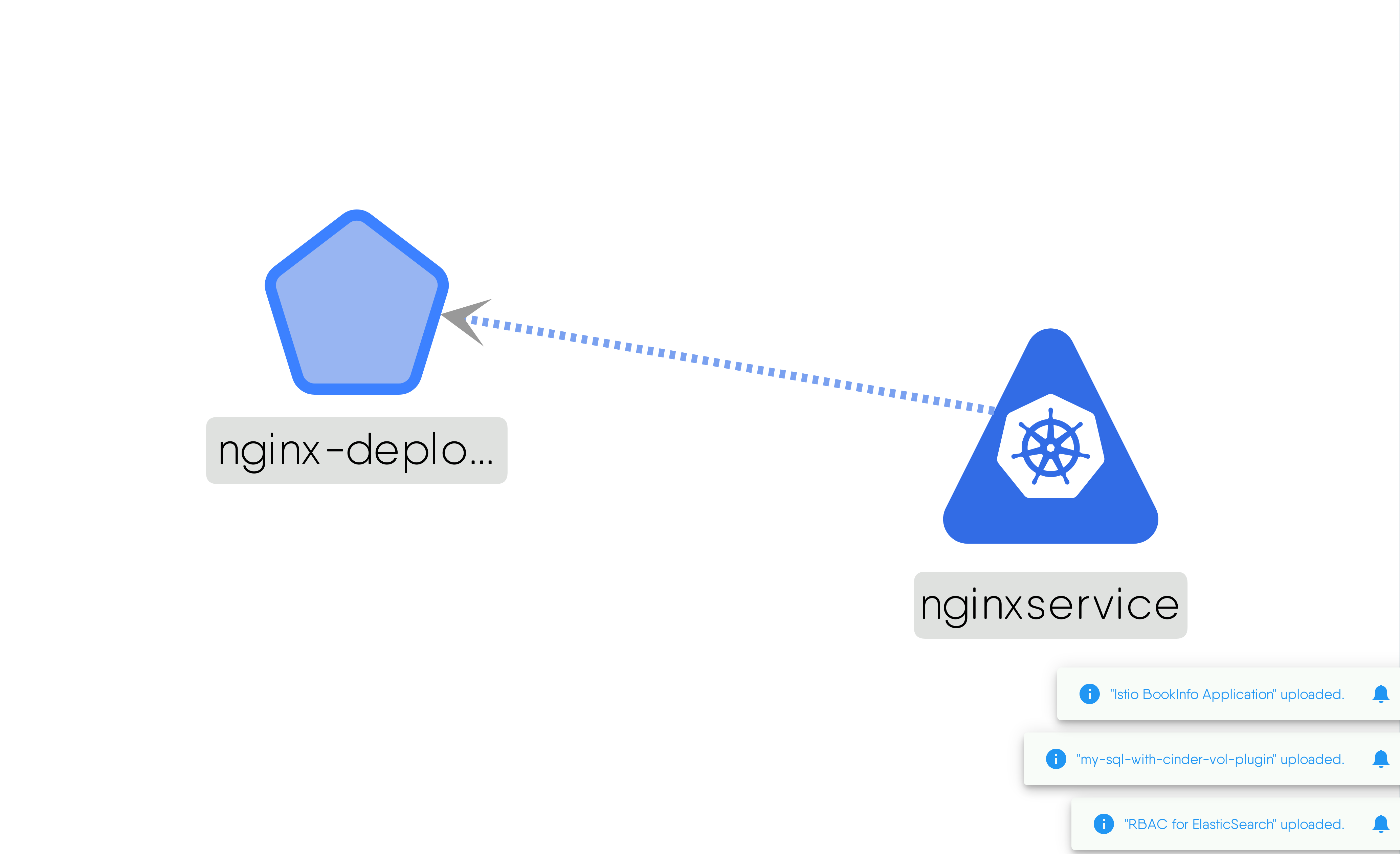

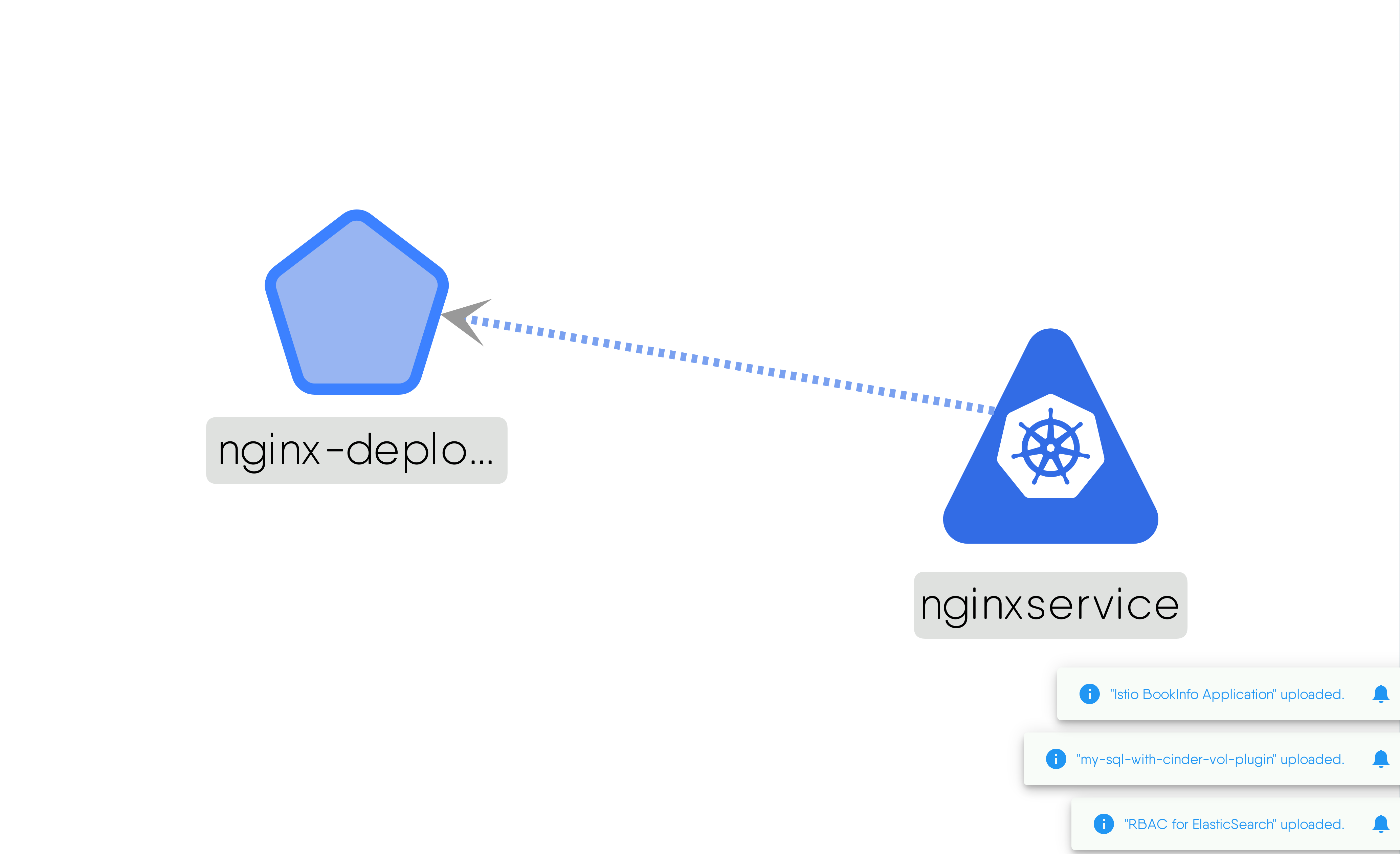

EXPLORING KUBERNETES PODS WITH MESHERY

Description

This design maps to the "Exploring Kubernetes Pods with Meshery" tutorial and is the end result of the design. It can be used to quickly deploy an nginx pod exposed through a service.

Caveats and Considerations

Service type is NodePort.

Technologies

Related Patterns

Istio Operator

MESHERY4a76

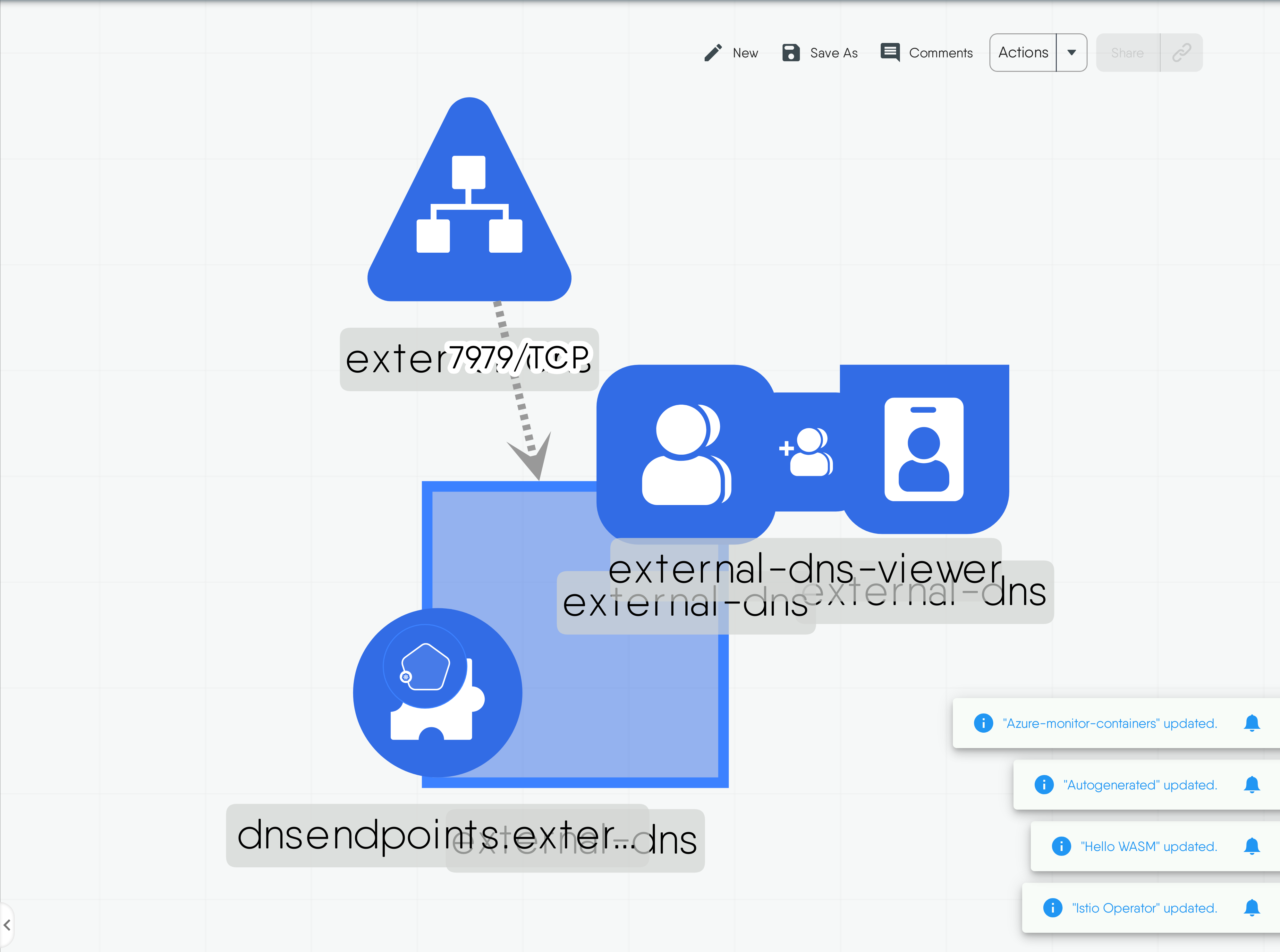

External-Dns for Kubernetes

MESHERY4db0

RELATED PATTERNS

Service Internal Traffic Policy

MESHERY41b6

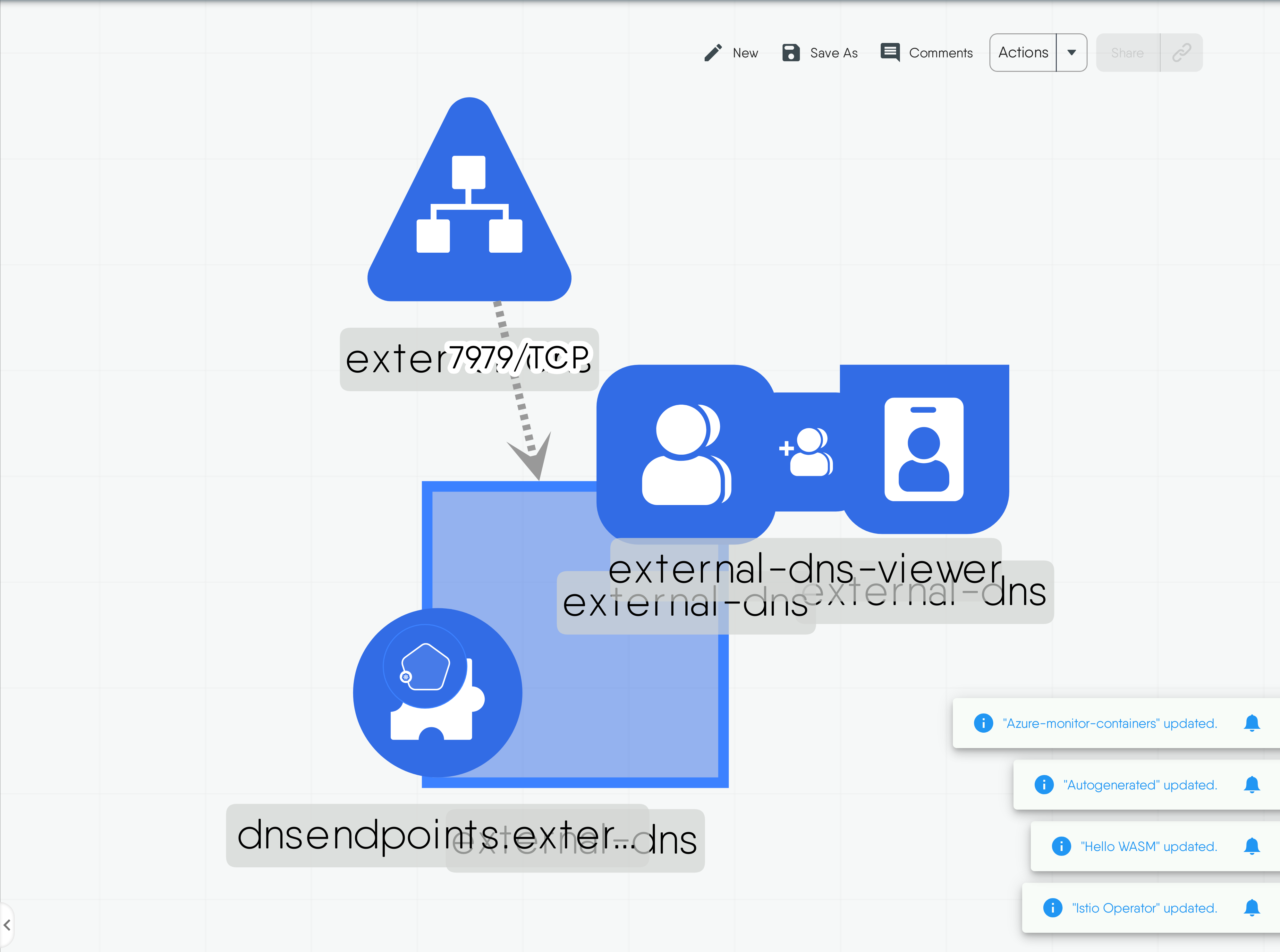

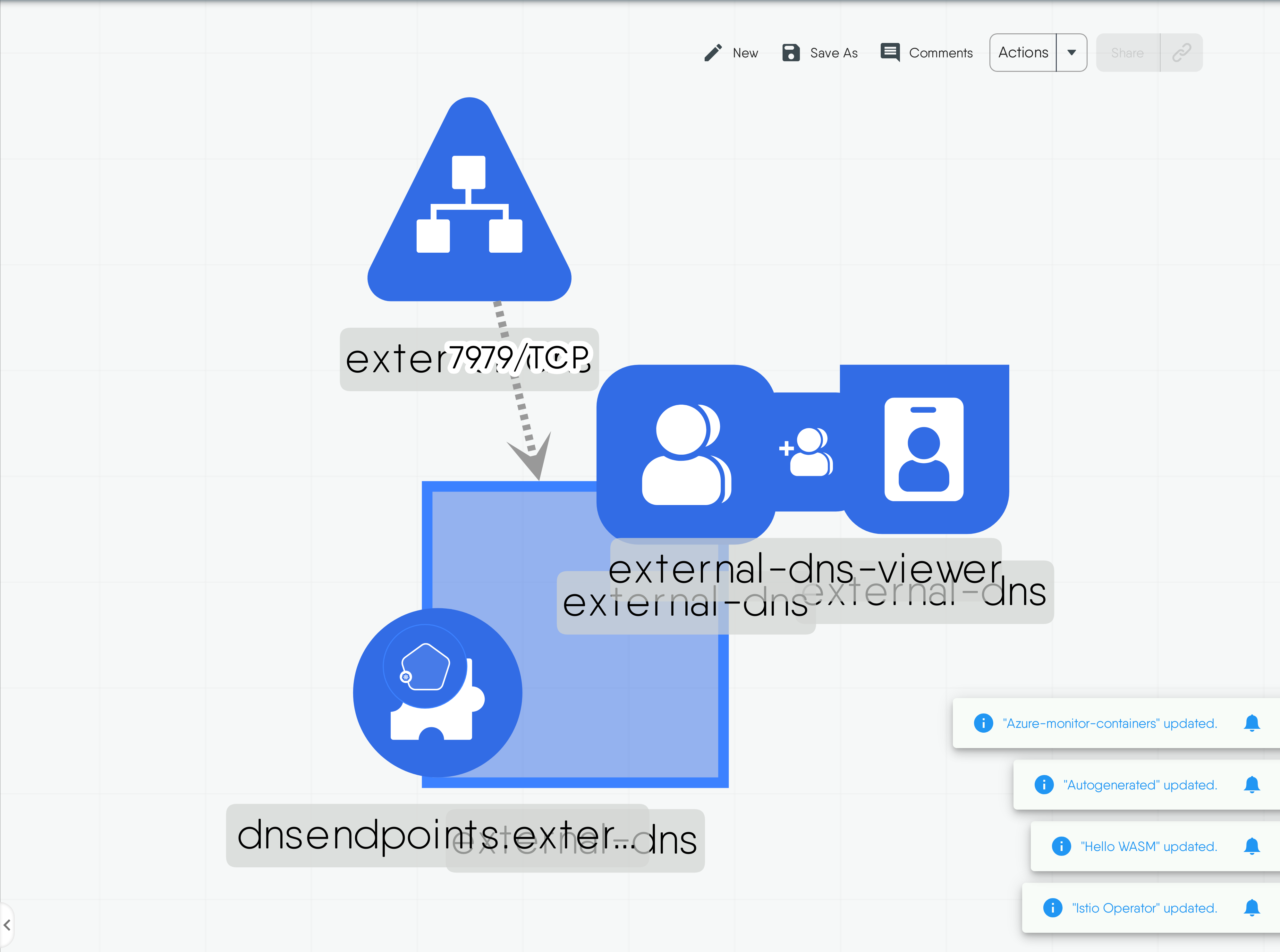

EXTERNAL-DNS FOR KUBERNETES

Description

ExternalDNS synchronizes exposed Kubernetes Services and Ingresses with DNS providers. Kubernetes' cluster-internal DNS server, ExternalDNS makes Kubernetes resources discoverable via public DNS servers. Like KubeDNS, it retrieves a list of resources (Services, Ingresses, etc.) from the Kubernetes API to determine a desired list of DNS records. Unlike KubeDNS, however, it's not a DNS server itself, but merely configures other DNS providers accordingly—e.g. AWS Route 53 or Google Cloud DNS. In a broader sense, ExternalDNS allows you to control DNS records dynamically via Kubernetes resources in a DNS provider-agnostic way.

Read moreCaveats and Considerations

For more information and considerations checkout this repo https://github.com/kubernetes-sigs/external-dns/?tab=readme-ov-file

Technologies

Related Patterns

Service Internal Traffic Policy

MESHERY41b6

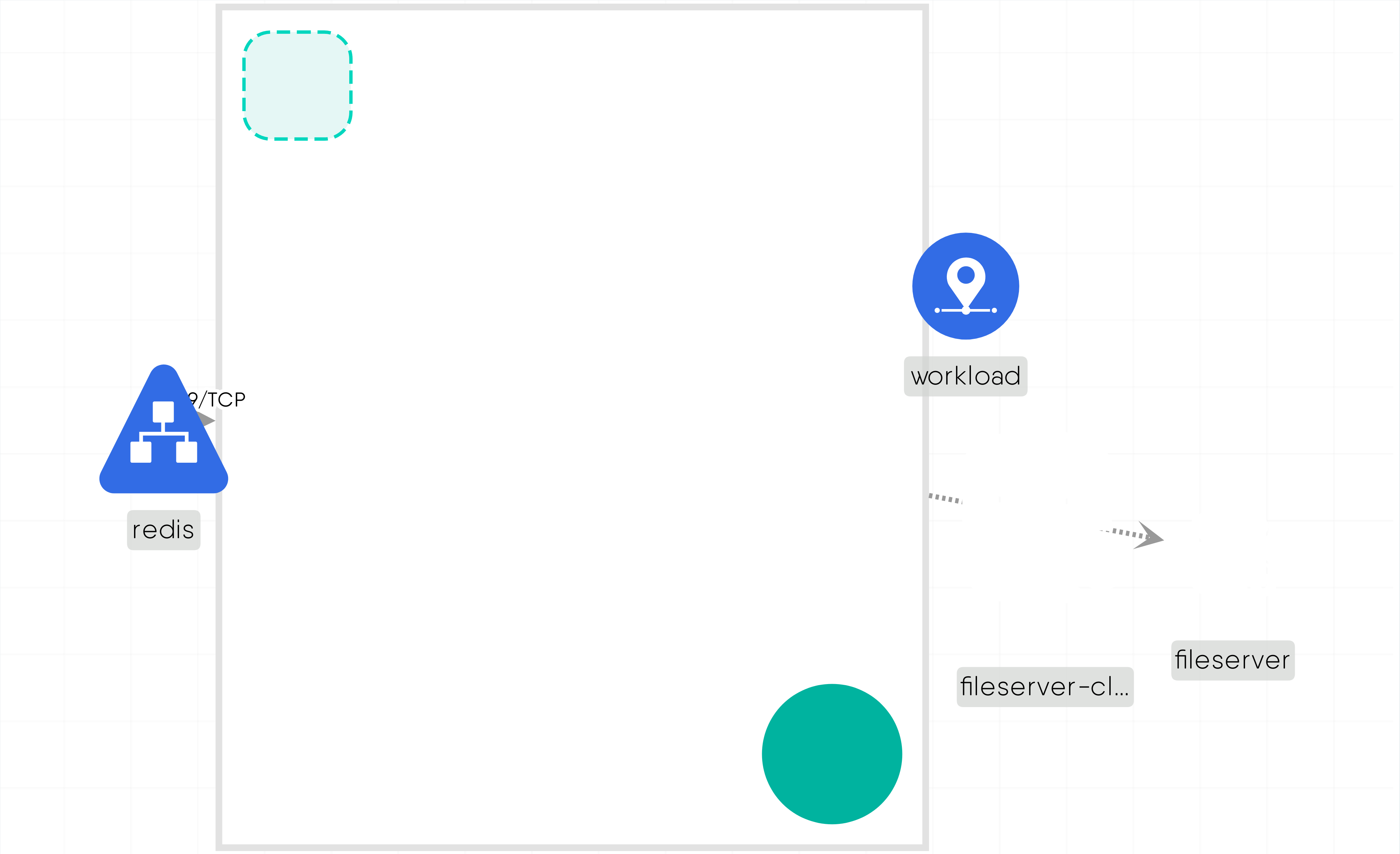

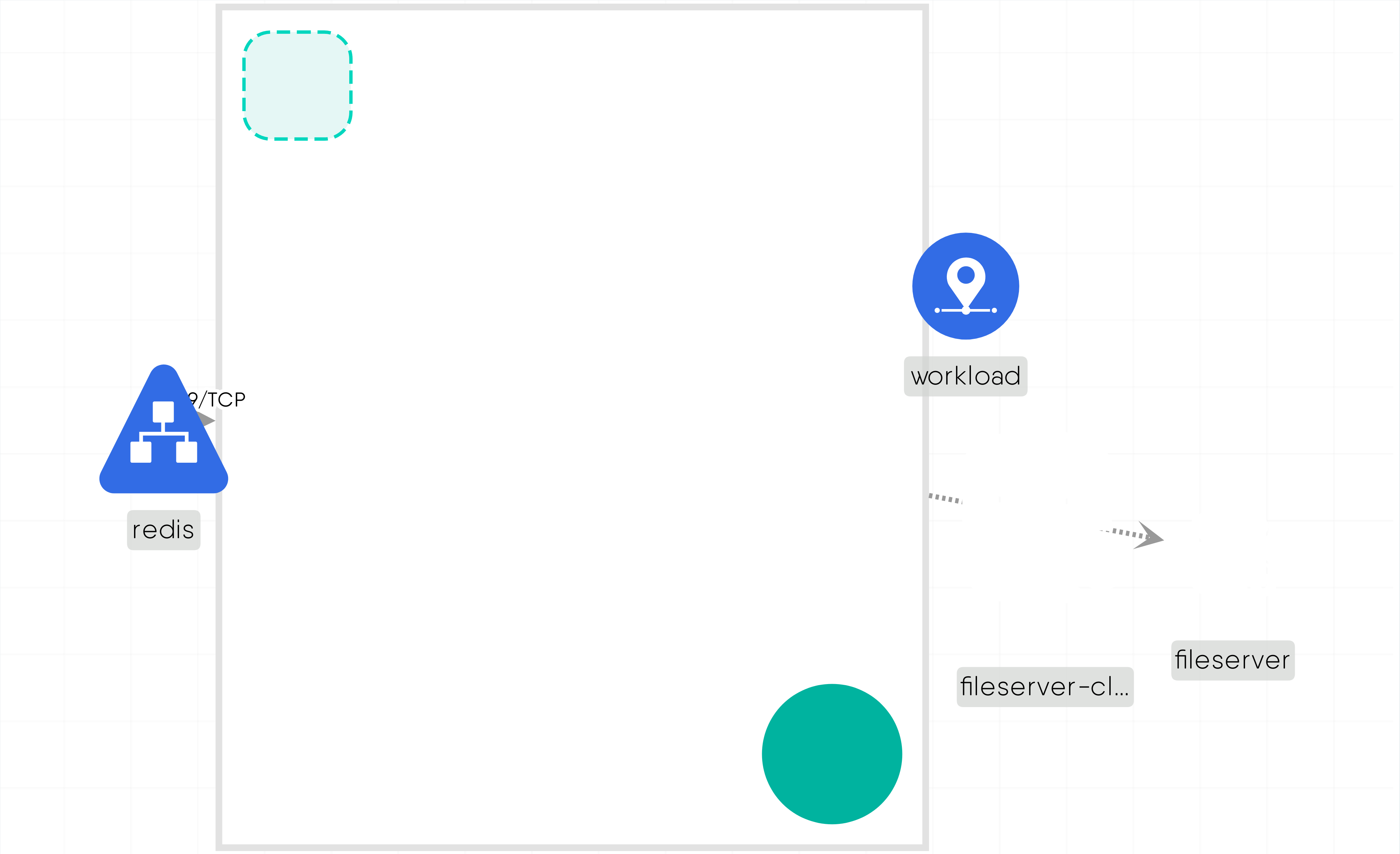

Fault-tolerant batch workloads on GKE

MESHERY4b55

RELATED PATTERNS

Istio Operator

MESHERY4a76

FAULT-TOLERANT BATCH WORKLOADS ON GKE

Description

A batch workload is a process typically designed to have a start and a completion point. You should consider batch workloads on GKE if your architecture involves ingesting, processing, and outputting data instead of using raw data. Areas like machine learning, artificial intelligence, and high performance computing (HPC) feature different kinds of batch workloads, such as offline model training, batched prediction, data analytics, simulation of physical systems, and video processing. By designing containerized batch workloads, you can leverage the following GKE benefits: An open standard, broad community, and managed service. Cost efficiency from effective workload and infrastructure orchestration and specialized compute resources. Isolation and portability of containerization, allowing the use of cloud as overflow capacity while maintaining data security. Availability of burst capacity, followed by rapid scale down of GKE clusters.

Read moreCaveats and Considerations

Ensure proper networking of components for efficient functioning

Technologies

Related Patterns

Istio Operator

MESHERY4a76

Fortio Server

MESHERY4614

RELATED PATTERNS

Istio Operator

MESHERY4a76

FORTIO SERVER

Description

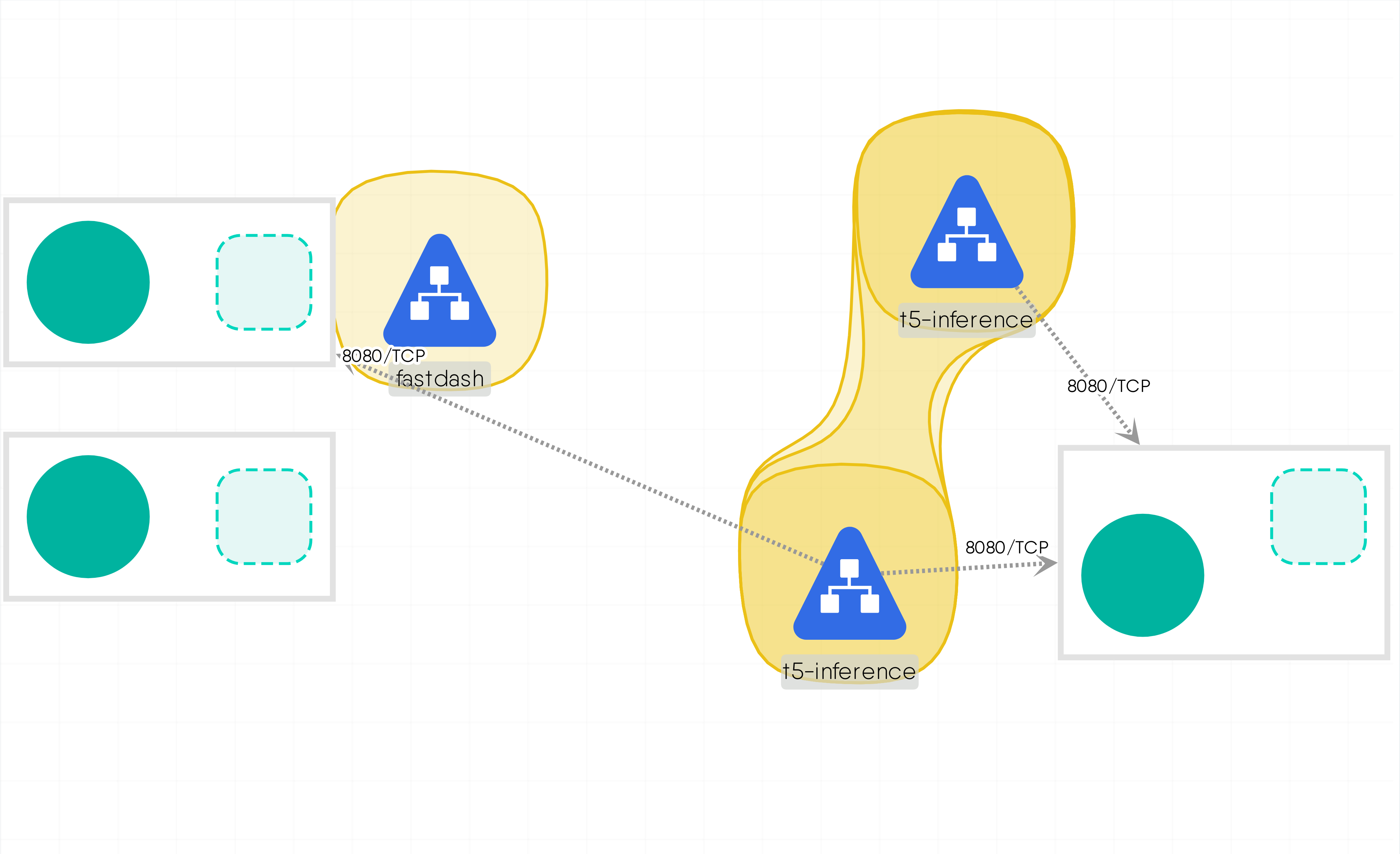

This infrastructure design defines a service and a deployment for a component called Fortio-server **Service: fortio-server-service**- Type: Kubernetes Service - Namespace: Default - Port: Exposes port 8080 - Selector: Routes traffic to pods with the label app: fortio-server - Session Affinity: None - Service Type: ClusterIP - MeshMap Metadata: Describes its relationship with Kubernetes and its category as Scheduling & Orchestration. - Position: Positioned within a graphical representation of infrastructure. **Deployment: fortio-server-deployment** - Type: Kubernetes Deployment - Namespace: Default - Replicas: 1 - Selector: Matches pods with the label app: fortio-server - Pod Template: Specifies a container image for Fortio-server, its resource requests, and a service account. - Container Image: Uses the fortio/fortio:1.32.1 image - MeshMap Metadata: Specifies its parent-child relationship with the fortio-server-service and provides styling information. - Position: Positioned relative to the service within the infrastructure diagram. This configuration sets up a service and a corresponding deployment for Fortio-server in a Kubernetes environment. The service exposes port 8080, while the deployment runs a container with the Fortio-server image. These components are visualized using MeshMap for tracking and visualization purposes.

Read moreCaveats and Considerations

Ensure networking is setup properly and enuough resources are available

Technologies

Related Patterns

Istio Operator

MESHERY4a76

Gerrit operator

MESHERY4f6c

RELATED PATTERNS

Istio Operator

MESHERY4a76

GERRIT OPERATOR

Description

This YAML configuration defines a Kubernetes Deployment named "gerrit-operator-deployment" for managing a containerized application called "gerrit-operator". It specifies that one replica of the application should be deployed. The Deployment ensures that the application is always running by managing pod replicas based on the provided selector labels. The template section describes the pod specification, including labels, service account, security context, and container configuration. The container named "gerrit-operator-container" is configured with an image from a container registry, with resource limits and requests defined for CPU and memory. Environment variables are set for various parameters like the namespace, pod name, and platform type. Additionally, specific intervals for syncing Gerrit projects and group members are defined. Further configuration options can be added as needed, such as volumes and initContainers.

Read moreCaveats and Considerations

1. Resource Requirements: Ensure that the resource requests and limits specified for CPU and memory are appropriate for the workload and the cluster's capacity to prevent performance issues or resource contention. 2. Image Pull Policy: The imagePullPolicy set to "Always" ensures that the latest image version is always pulled from the container registry. This may increase deployment time and consume more network bandwidth, so consider the trade-offs based on your deployment requirements. 3. Security Configuration: The security context settings, such as runAsNonRoot and allowPrivilegeEscalation: false, enhance pod security by enforcing non-root user execution and preventing privilege escalation. Verify that these settings align with your organization's security policies. 4. Environment Variables: Review the environment variables set for WATCH_NAMESPACE, POD_NAME, PLATFORM_TYPE, GERRIT_PROJECT_SYNC_INTERVAL, and GERRIT_GROUP_MEMBER_SYNC_INTERVAL to ensure they are correctly configured for your deployment environment and application requirements.

Read moreTechnologies

Related Patterns

Istio Operator

MESHERY4a76

GlusterFS Service

MESHERY4aa9

RELATED PATTERNS

Pod Readiness

MESHERY4b83

GLUSTERFS SERVICE

Description

""

Caveats and Considerations

""

Technologies

Related Patterns

Pod Readiness

MESHERY4b83

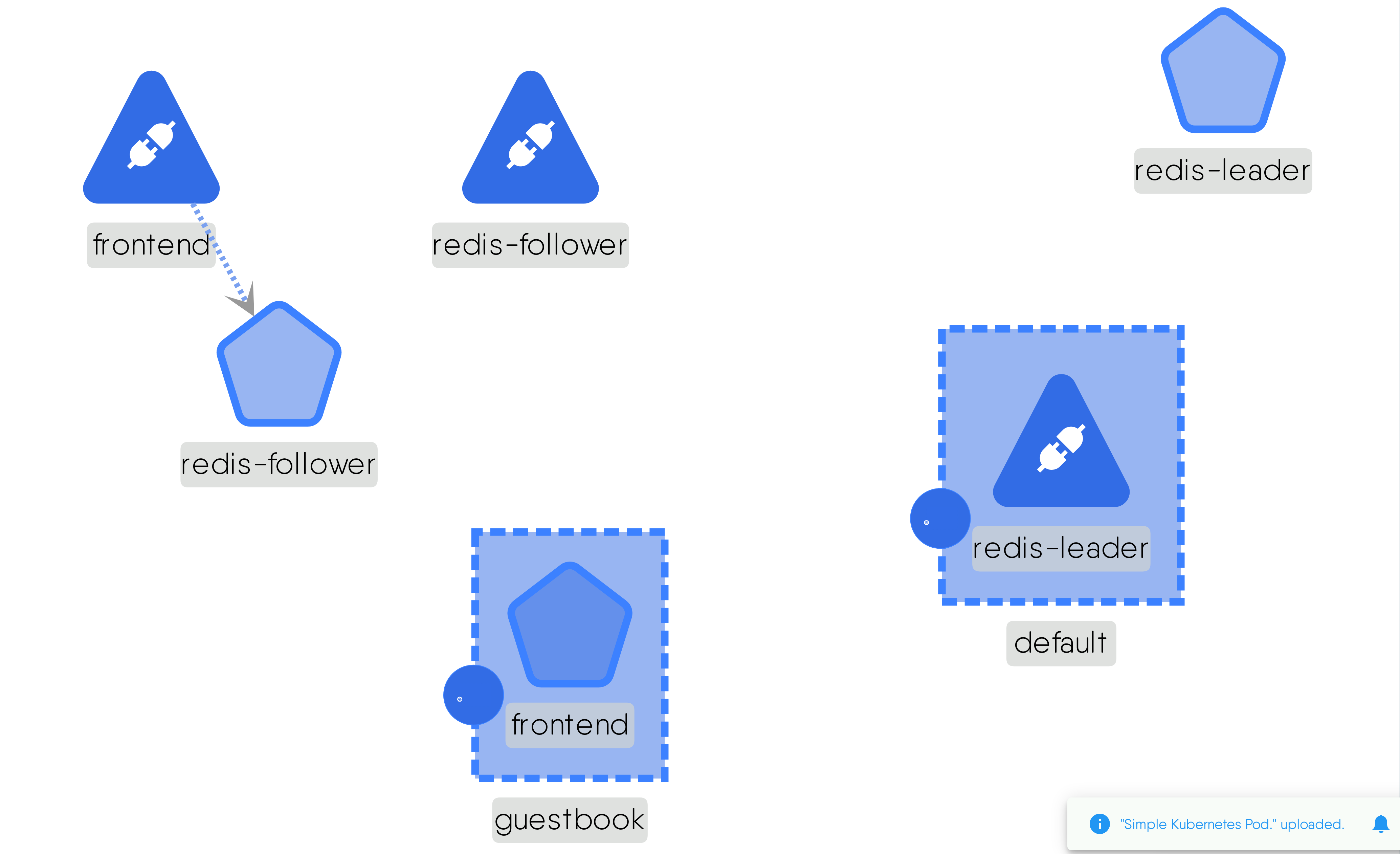

GuestBook App

MESHERY4a54

RELATED PATTERNS

Istio Operator

MESHERY4a76

GUESTBOOK APP

Description

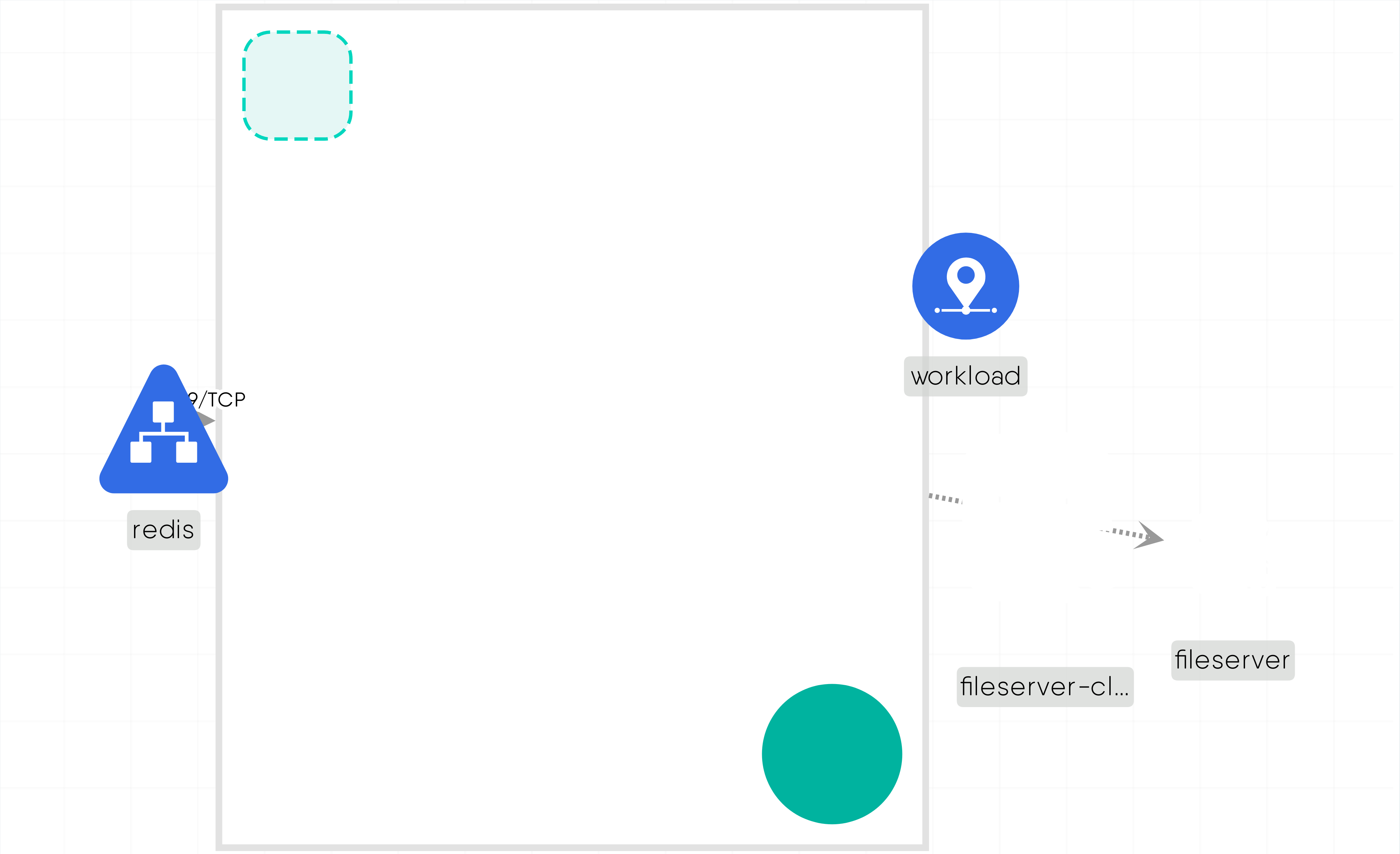

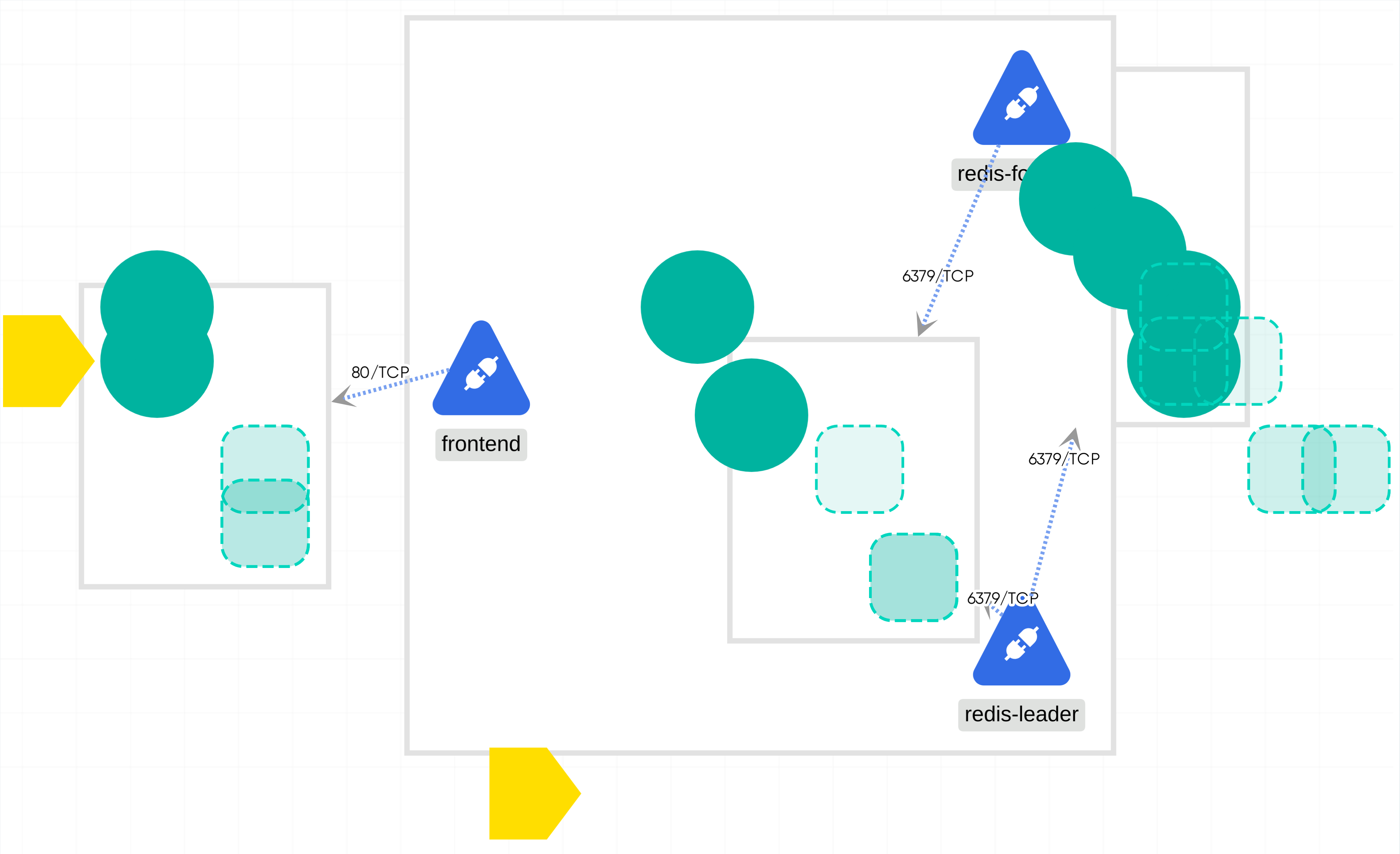

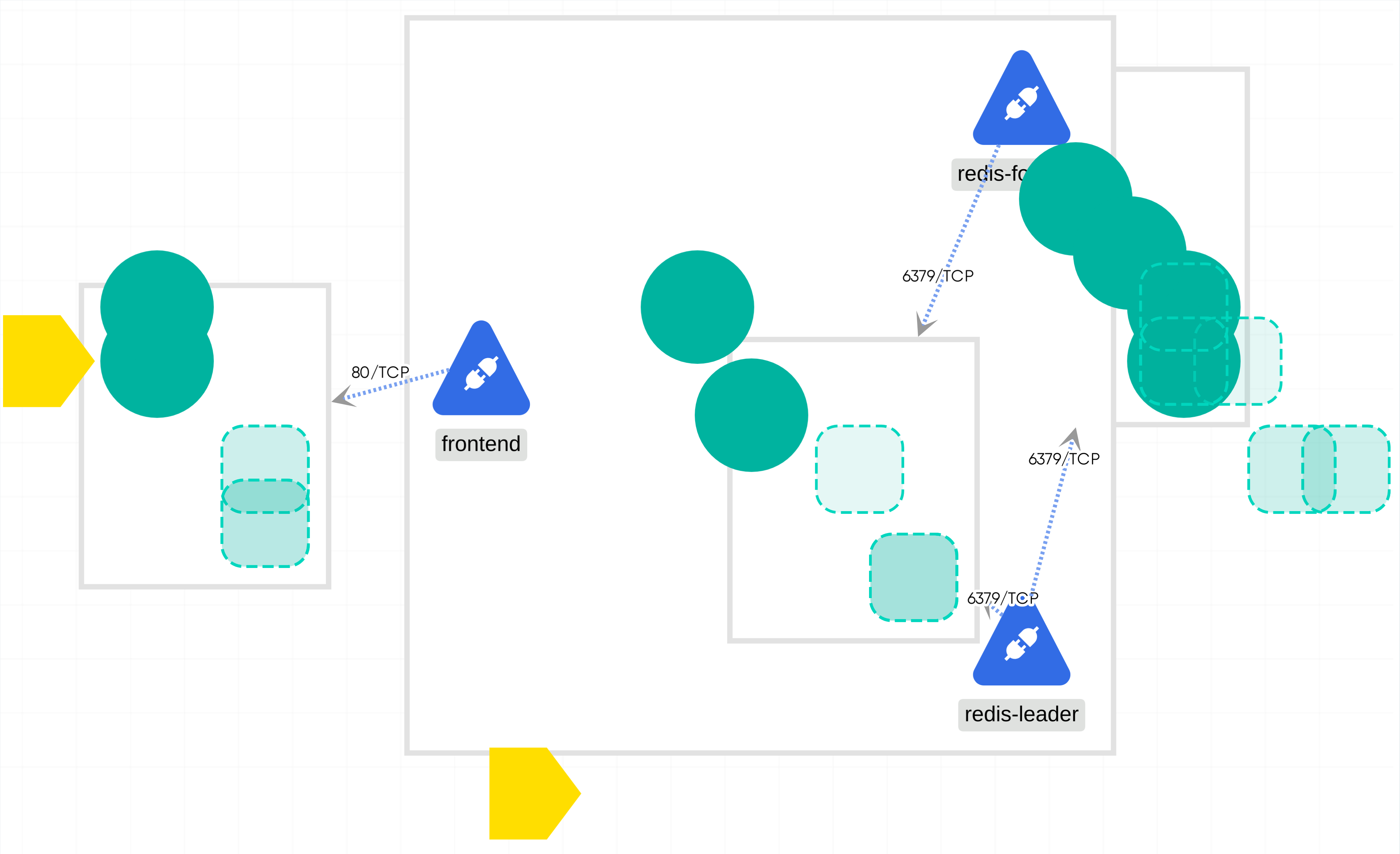

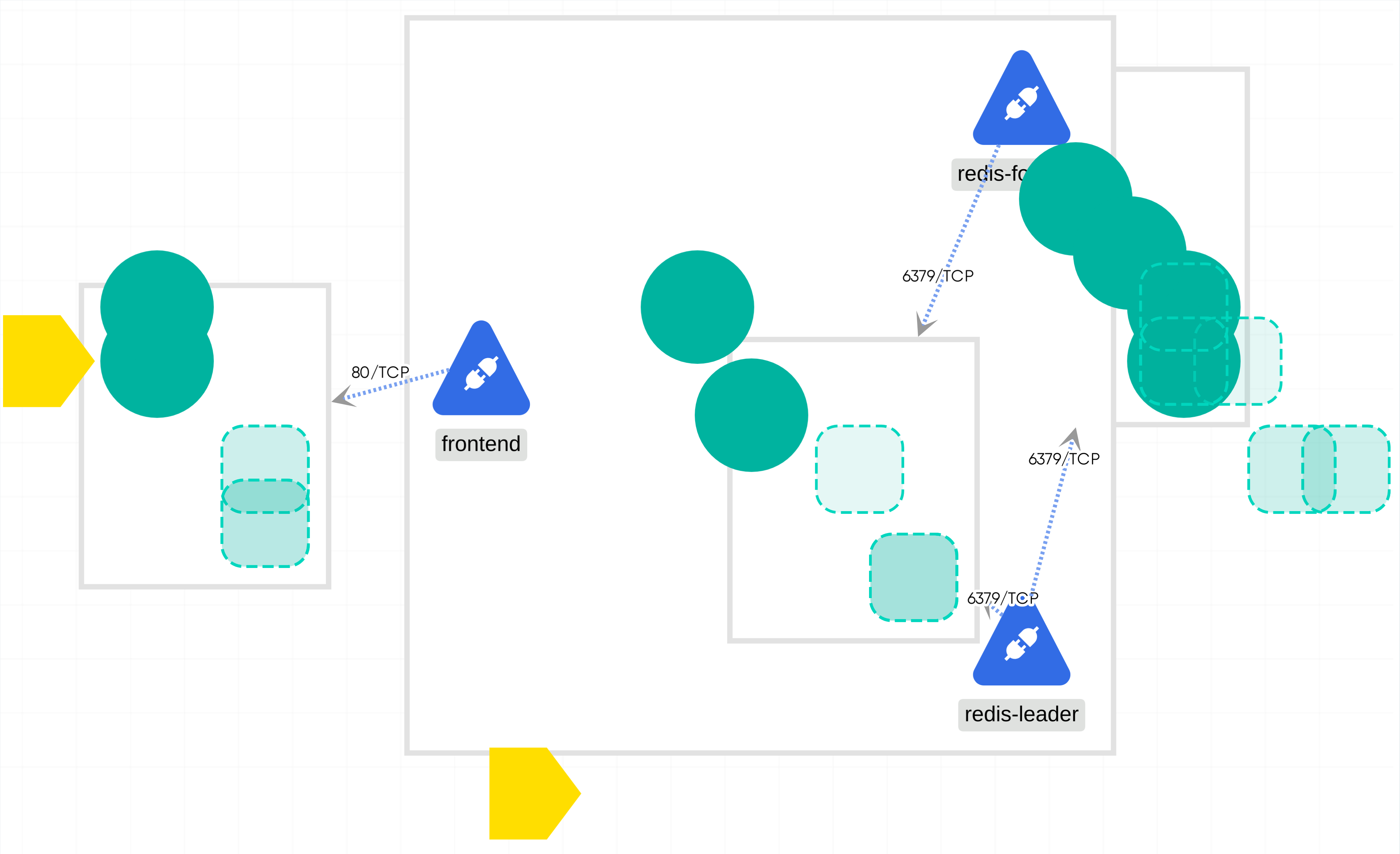

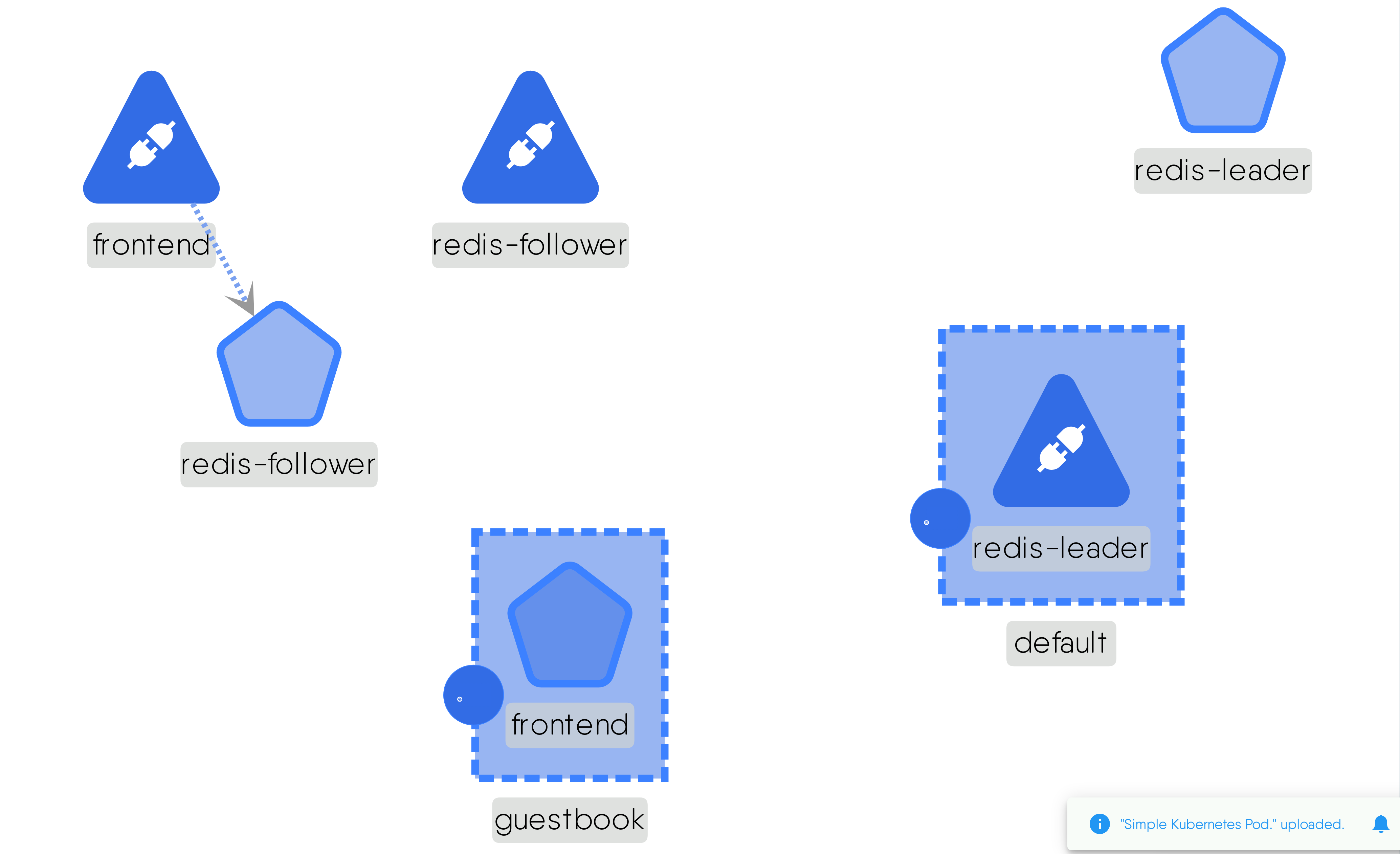

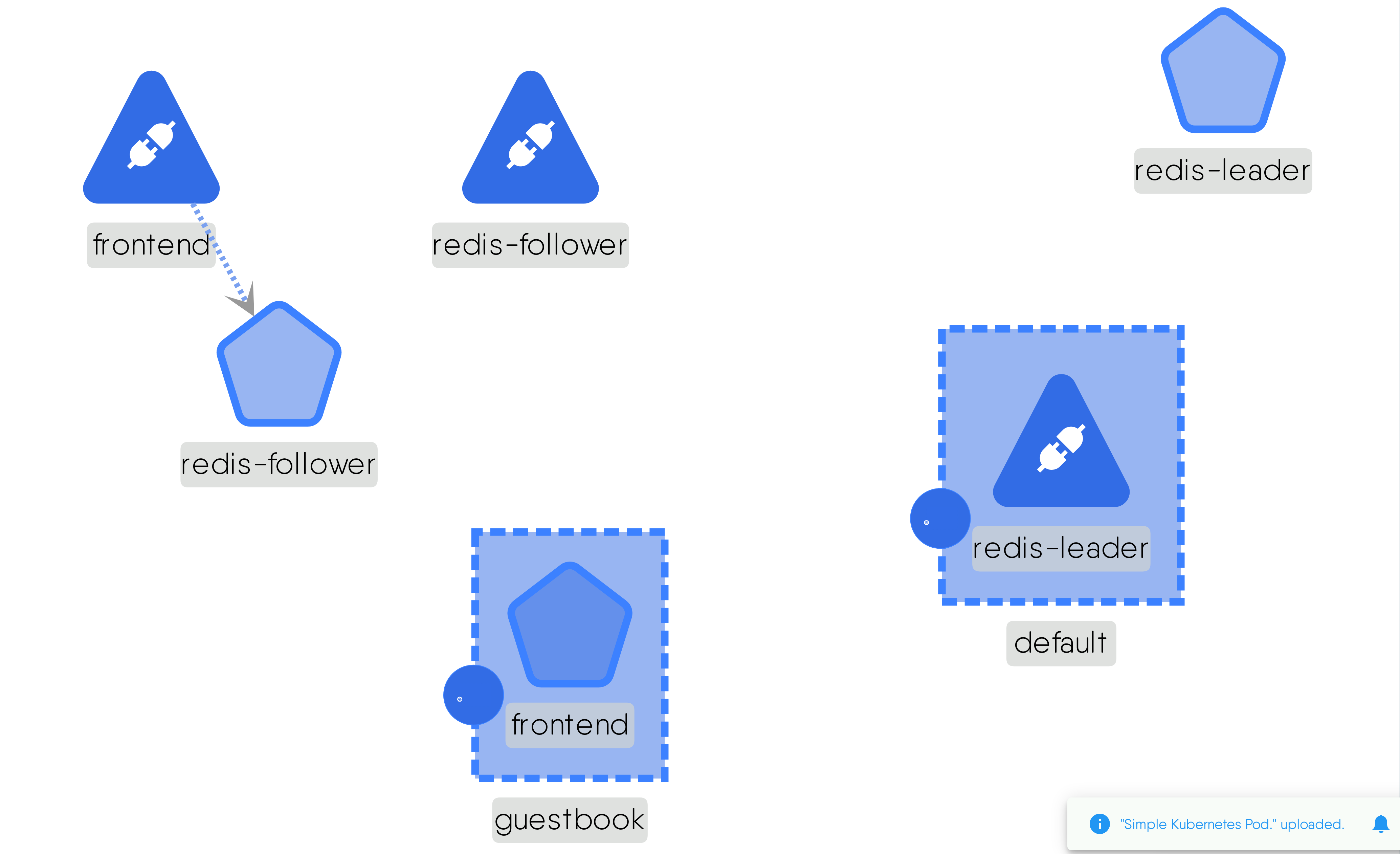

The GuestBook App is a cloud-native application designed using Kubernetes as the underlying orchestration and management system. It consists of various services and components deployed within Kubernetes namespaces. The default namespace represents the main environment where the application operates. The frontend-cyrdx service is responsible for handling frontend traffic and is deployed as a Kubernetes service with a selector for the guestbook application and frontend tier. The frontend-fsfct deployment runs multiple replicas of the frontend component, which utilizes the gb-frontend image and exposes port 80. The guestbook namespace serves as a logical grouping for components related to the GuestBook App. The redis-follower-armov service handles follower Redis instances for the backend, while the redis-follower-nwlew deployment manages multiple replicas of the follower Redis container. The redis-leader-fhxla deployment represents the leader Redis container, and the redis-leader-vjtmi service exposes it as a Kubernetes service. These components work together to create a distributed and scalable architecture for the GuestBook App, leveraging Kubernetes for container orchestration and management.

Read moreCaveats and Considerations

Networking should be properly configured to enable communication between the frontend and backend components of the app.

Technologies

Related Patterns

Istio Operator

MESHERY4a76

GuestBook App

MESHERY4b31

RELATED PATTERNS

Istio Operator

MESHERY4a76

GUESTBOOK APP

Description

The GuestBook App is a cloud-native application designed using Kubernetes as the underlying orchestration and management system. It consists of various services and components deployed within Kubernetes namespaces. The default namespace represents the main environment where the application operates. The frontend-cyrdx service is responsible for handling frontend traffic and is deployed as a Kubernetes service with a selector for the guestbook application and frontend tier. The frontend-fsfct deployment runs multiple replicas of the frontend component, which utilizes the gb-frontend image and exposes port 80. The guestbook namespace serves as a logical grouping for components related to the GuestBook App. The redis-follower-armov service handles follower Redis instances for the backend, while the redis-follower-nwlew deployment manages multiple replicas of the follower Redis container. The redis-leader-fhxla deployment represents the leader Redis container, and the redis-leader-vjtmi service exposes it as a Kubernetes service. These components work together to create a distributed and scalable architecture for the GuestBook App, leveraging Kubernetes for container orchestration and management.

Read moreCaveats and Considerations

Networking should be properly configured to enable communication between the frontend and backend components of the app.

Technologies

Related Patterns

Istio Operator

MESHERY4a76

GuestBook App (Copy)

MESHERY4263

RELATED PATTERNS

Istio Operator

MESHERY4a76

GUESTBOOK APP (COPY)

Description

The GuestBook App is a cloud-native application designed using Kubernetes as the underlying orchestration and management system. It consists of various services and components deployed within Kubernetes namespaces. The default namespace represents the main environment where the application operates. The frontend-cyrdx service is responsible for handling frontend traffic and is deployed as a Kubernetes service with a selector for the guestbook application and frontend tier. The frontend-fsfct deployment runs multiple replicas of the frontend component, which utilizes the gb-frontend image and exposes port 80. The guestbook namespace serves as a logical grouping for components related to the GuestBook App. The redis-follower-armov service handles follower Redis instances for the backend, while the redis-follower-nwlew deployment manages multiple replicas of the follower Redis container. The redis-leader-fhxla deployment represents the leader Redis container, and the redis-leader-vjtmi service exposes it as a Kubernetes service. These components work together to create a distributed and scalable architecture for the GuestBook App, leveraging Kubernetes for container orchestration and management.

Read moreCaveats and Considerations

Networking should be properly configured to enable communication between the frontend and backend components of the app.

Technologies

Related Patterns

Istio Operator

MESHERY4a76

Guestbook App (All-in-One)

MESHERY4b20

RELATED PATTERNS

Pod Readiness

MESHERY4b83

GUESTBOOK APP (ALL-IN-ONE)

Description

This is a sample guestbook app to demonstrate distributed systems

Caveats and Considerations

1. Ensure networking is setup properly. 2. Ensure enough disk space is available

Technologies

Related Patterns

Pod Readiness

MESHERY4b83

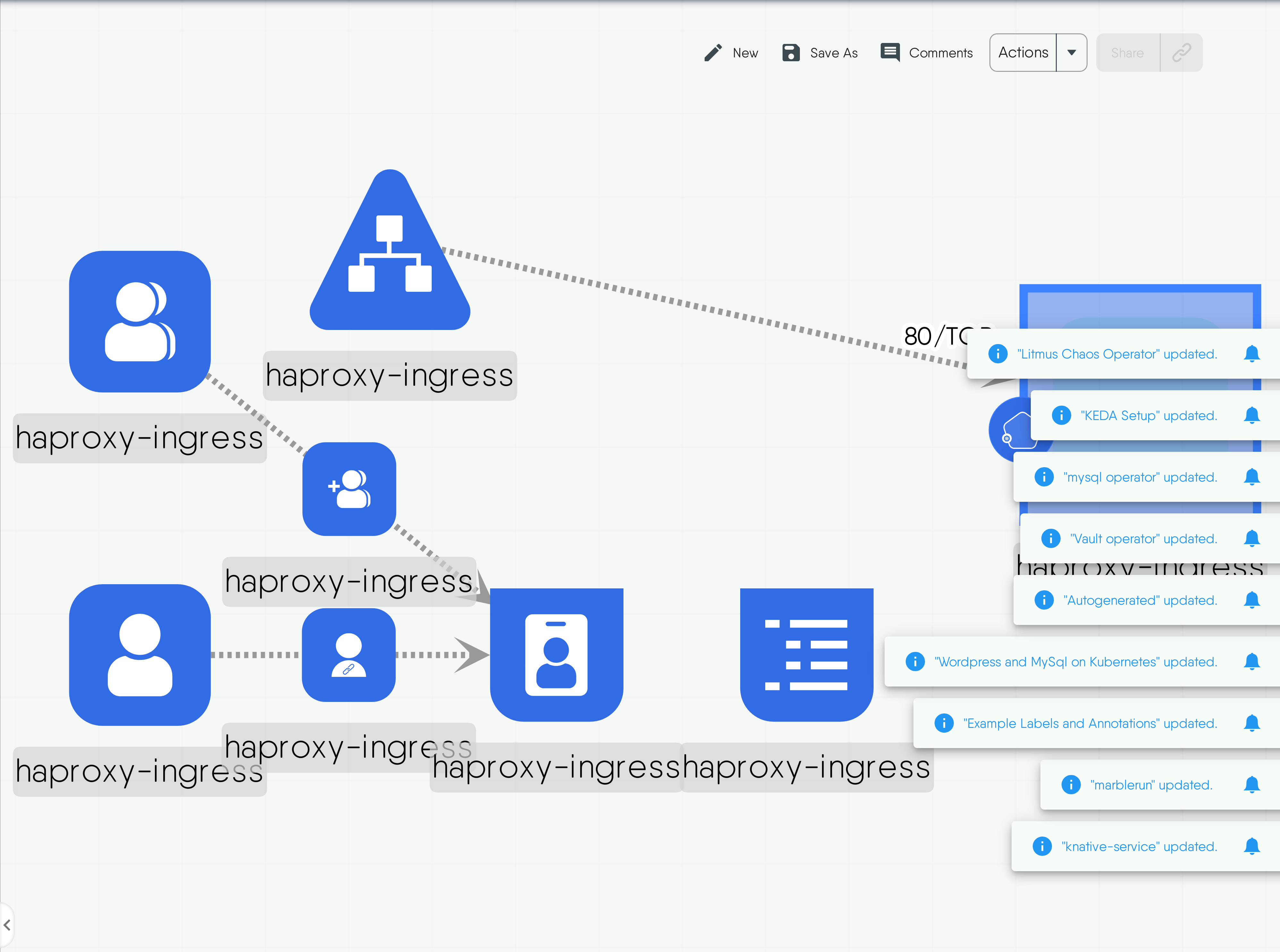

HAProxy_Ingress_Controller

MESHERY45dd

RELATED PATTERNS

Service Internal Traffic Policy

MESHERY41b6

HAPROXY_INGRESS_CONTROLLER

Description

HAProxy Ingress is a Kubernetes ingress controller: it configures a HAProxy instance to route incoming requests from an external network to the in-cluster applications. The routing configurations are built reading specs from the Kubernetes cluster. Updates made to the cluster are applied on the fly to the HAProxy instance.

Read moreCaveats and Considerations

Make sure that paths in ingress are configured correctly and for more Caveats And Considerations checkout this docs https://haproxy-ingress.github.io/docs/

Technologies

Related Patterns

Service Internal Traffic Policy

MESHERY41b6

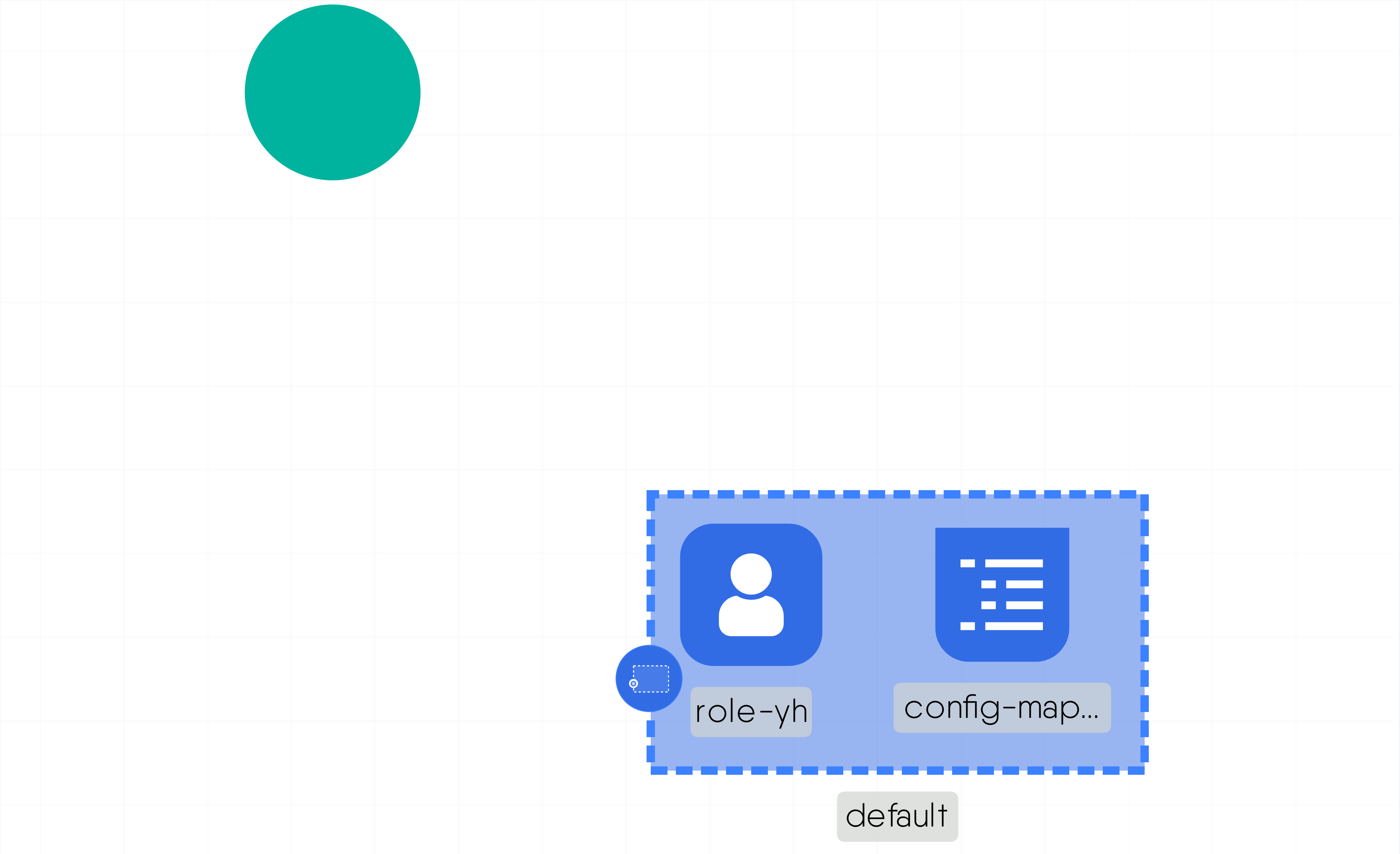

Hello WASM

MESHERY4255

RELATED PATTERNS

Pod Readiness

MESHERY4b83

HELLO WASM

Description

""

Caveats and Considerations

""

Technologies

Related Patterns

Pod Readiness

MESHERY4b83

Hierarchical Parent Relationship

MESHERY4a65

RELATED PATTERNS

Istio Operator

MESHERY4a76

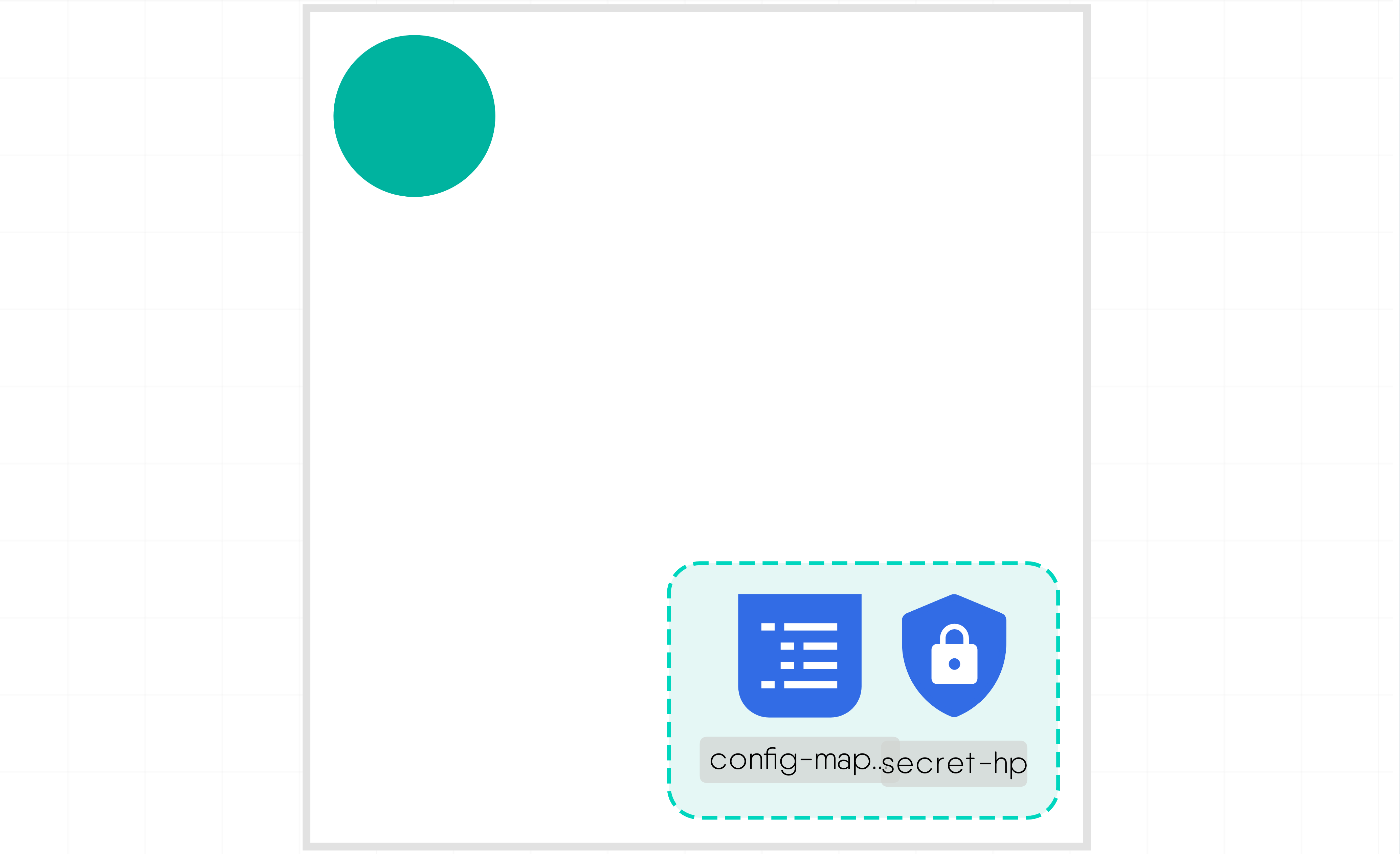

HIERARCHICAL PARENT RELATIONSHIP

Description

A relationship that defines whether a component can be a parent of other components. Eg: Namespace is Parent and Role, ConfigMap are children.

Caveats and Considerations

""

Technologies

Related Patterns

Istio Operator

MESHERY4a76

Hierarchical Inventory Relationship

MESHERY4c5a

RELATED PATTERNS

Istio Operator

MESHERY4a76

HIERARCHICAL INVENTORY RELATIONSHIP

Description

A hierarchical inventory relationship in which the configuration of (parent) component is patched with the configuration of child component. Eg: The configuration of the Deployment (parent) component is patched with the configuration as received from ConfigMap (child) component.

Read moreCaveats and Considerations

NA

Technologies

Related Patterns

Istio Operator

MESHERY4a76

HorizontalPodAutoscaler

MESHERY41d1

RELATED PATTERNS

Autoscaling based on Metrics in GKE

MESHERY400b

HORIZONTALPODAUTOSCALER

Description

A HorizontalPodAutoscaler (HPA for short) automatically updates a workload resource (such as a Deployment or StatefulSet), with the aim of automatically scaling the workload to match demand Horizontal scaling means that the response to increased load is to deploy more Pods. This is different from vertical scaling, which for Kubernetes would mean assigning more resources (for example: memory or CPU) to the Pods that are already running for the workload. If the load decreases, and the number of Pods is above the configured minimum, the HorizontalPodAutoscaler instructs the workload resource (the Deployment, StatefulSet, or other similar resource) to scale back down.

Read moreCaveats and Considerations

Modify deployments and names according to requirement

Technologies

Related Patterns

Autoscaling based on Metrics in GKE

MESHERY400b

Install-Traefik-as-ingress-controller

MESHERY4796

RELATED PATTERNS

Service Internal Traffic Policy

MESHERY41b6

INSTALL-TRAEFIK-AS-INGRESS-CONTROLLER

Description

This design creates a ServiceAccount, DaemonSet, Service, ClusterRole, and ClusterRoleBinding resources for Traefik. The DaemonSet ensures that a single Traefik instance is deployed on each node in the cluster, facilitating load balancing and routing of incoming traffic. The Service allows external traffic to reach Traefik, while the ClusterRole and ClusterRoleBinding provide the necessary permissions for Traefik to interact with Kubernetes resources such as services, endpoints, and ingresses. Overall, this setup enables Traefik to efficiently manage ingress traffic within the Kubernetes environment, providing features like routing, load balancing, and SSL termination.

Read moreCaveats and Considerations

-Resource Utilization: Ensure monitoring and scalability to manage resource consumption across nodes, especially in large clusters. -Security Measures: Implement strict access controls and firewall rules to protect Traefik's admin port (8080) from unauthorized access. -Configuration Complexity: Understand Traefik's configuration intricacies for routing rules and SSL termination to avoid misconfigurations. -Compatibility Testing: Regularly test Traefik's compatibility with Kubernetes and other cluster components before upgrading versions. -High Availability Setup: Employ strategies like pod anti-affinity rules to ensure Traefik's availability and uptime. -Performance Optimization: Conduct performance tests to minimize latency and overhead introduced by Traefik in the data path.

Read moreTechnologies

Related Patterns

Service Internal Traffic Policy

MESHERY41b6

Istio BookInfo Application

MESHERY4bda

RELATED PATTERNS

Pod Readiness

MESHERY4b83

ISTIO BOOKINFO APPLICATION

Description

""

Caveats and Considerations

""

Technologies

Related Patterns

Pod Readiness

MESHERY4b83

Istio Control Plane

MESHERY4a09

RELATED PATTERNS

Istio Operator

MESHERY4a76

ISTIO CONTROL PLANE

Description

This design includes an Istio control plane, which will deploy to the istio-system namespace by default.

Caveats and Considerations

No namespaces are annotated for sidecar provisioning in this design.

Technologies

Related Patterns

Istio Operator

MESHERY4a76

Istio HTTP Header Filter (Clone)

MESHERY4bfd

RELATED PATTERNS

Pod Readiness

MESHERY4b83

ISTIO HTTP HEADER FILTER (CLONE)

Description

This is a test design

Caveats and Considerations

NA

Technologies

Related Patterns

Pod Readiness

MESHERY4b83

Istio Operator

MESHERY4a76

RELATED PATTERNS

Fault-tolerant batch workloads on GKE

MESHERY4b55

ISTIO OPERATOR

Description

This YAML defines a Kubernetes Deployment for the Istio Operator within the istio-operator namespace. The deployment ensures a single replica of the Istio Operator pod is always running, which is managed by a service account named istio-operator. The deployment's metadata includes the namespace and the deployment name. The pod selector matches pods with the label name: istio-operator, ensuring the correct pods are managed. The pod template specifies metadata and details for the containers, including the container name istio-operator and the image gcr.io/istio-testing/operator:1.5-dev, which runs the istio-operator command with the server argument.

Read moreCaveats and Considerations

1. Namespace Configuration: Ensure that the istio-operator namespace exists before applying this deployment. If the namespace is not present, the deployment will fail. 2. Image Version: The image specified (gcr.io/istio-testing/operator:1.5-dev) is a development version. It is crucial to verify the stability and compatibility of this version for production environments. Using a stable release version is generally recommended. 3. Resource Allocation: The resource limits and requests are set to specific values (200m CPU, 256Mi memory for limits; 50m CPU, 128Mi memory for requests). These values should be reviewed and adjusted based on the actual resource availability and requirements of your Kubernetes cluster to prevent resource contention or overallocation. 4. Leader Election: The environment variables include LEADER_ELECTION_NAMESPACE which is derived from the pod's namespace. Ensure that the leader election mechanism is properly configured and that only one instance of the operator becomes the leader to avoid conflicts. 5. Security Context: The deployment does not specify a security context for the container. It is advisable to review and define appropriate security contexts to enhance the security posture of the deployment, such as running the container as a non-root user.

Read moreTechnologies

Related Patterns

Fault-tolerant batch workloads on GKE

MESHERY4b55

JAX 'Hello World' using NVIDIA GPUs A100-80GB on GKE

MESHERY4cfd

RELATED PATTERNS

Istio Operator

MESHERY4a76

JAX 'HELLO WORLD' USING NVIDIA GPUS A100-80GB ON GKE

Description

JAX is a rapidly growing Python library for high-performance numerical computing and machine learning (ML) research. With applications in large language models, drug discovery, physics ML, reinforcement learning, and neural graphics, JAX has seen incredible adoption in the past few years. JAX offers numerous benefits for developers and researchers, including an easy-to-use NumPy API, auto differentiation and optimization. JAX also includes support for distributed processing across multi-node and multi-GPU systems in a few lines of code, with accelerated performance through XLA-optimized kernels on NVIDIA GPUs. We show how to run JAX multi-GPU-multi-node applications on GKE (Google Kubernetes Engine) using the A2 ultra machine series, powered by NVIDIA A100 80GB Tensor Core GPUs. It runs a simple Hello World application on 4 nodes with 8 processes and 8 GPUs each.

Read moreCaveats and Considerations

Ensure networking is setup properly and correct annotation are applied to each resource

Technologies

Related Patterns

Istio Operator

MESHERY4a76

Jaeger operator

MESHERY4ab9

RELATED PATTERNS

Istio Operator

MESHERY4a76

JAEGER OPERATOR

Description

This YAML configuration defines a Kubernetes Deployment for the Jaeger Operator. This Deployment, named "jaeger-operator," specifies that a container will be created using the jaegertracing/jaeger-operator:master image. The container runs with the argument "start," which initiates the operator's main process. Additionally, the container is configured with an environment variable, LOG-LEVEL, set to "debug," enabling detailed logging for troubleshooting and monitoring purposes. This setup allows the Jaeger Operator to manage Jaeger tracing instances within the Kubernetes cluster, ensuring efficient deployment, scaling, and maintenance of distributed tracing components.

Read moreCaveats and Considerations

1. Image Tag: The image tag master indicates that the latest, potentially unstable version of the Jaeger Operator is being used. For production environments, it's safer to use a specific, stable version to avoid unexpected issues. 2. Resource Limits and Requests: The deployment does not specify resource requests and limits for the container. It's crucial to define these to ensure that the Jaeger Operator has enough CPU and memory to function correctly, while also preventing it from consuming excessive resources on the cluster. 3. Replica Count: The spec section does not specify the number of replicas for the deployment. By default, Kubernetes will create one replica, which might not provide high availability. Consider increasing the replica count for redundancy. 4. Namespace: The deployment does not specify a namespace. Ensure that the deployment is applied to the appropriate namespace, particularly if you have a multi-tenant cluster. 5. Security Context: There is no security context defined. Adding a security context can enhance the security posture of the container by restricting permissions and enforcing best practices like running as a non-root user.

Read moreTechnologies

Related Patterns

Istio Operator

MESHERY4a76

Jenkins operator

MESHERY42f3

RELATED PATTERNS

Istio Operator

MESHERY4a76

JENKINS OPERATOR

Description

This YAML configuration defines a Kubernetes Deployment for the Jenkins Operator, ensuring the deployment of a single instance within the cluster. It specifies metadata including labels and annotations for identification and description purposes. The deployment is set to run one replica of the Jenkins Operator container, configured with security settings to run as a non-root user and disallow privilege escalation. Environment variables are provided for dynamic configuration within the container, such as the namespace and Pod name. Resource requests and limits are also defined to manage CPU and memory allocation effectively. Overall, this Deployment aims to ensure the smooth and secure operation of the Jenkins Operator within the Kubernetes environment.

Read moreCaveats and Considerations

1. Resource Allocation: The CPU and memory requests and limits defined in the configuration should be carefully adjusted based on the workload and available resources in the Kubernetes cluster to avoid resource contention and potential performance issues. 2. Image Repository Access: Ensure that the container image specified in the configuration (myregistry/jenkins-operator:latest) is accessible from the Kubernetes cluster. Proper image pull policies and authentication mechanisms should be configured to allow the Kubernetes nodes to pull the image from the specified registry. 3. Security Context: The security settings configured in the security context of the container (runAsNonRoot, allowPrivilegeEscalation) are essential for maintaining the security posture of the Kubernetes cluster. Ensure that these settings align with your organization's security policies and best practices. 4. Environment Variables: The environment variables defined in the configuration, such as WATCH_NAMESPACE, POD_NAME, OPERATOR_NAME, and PLATFORM_TYPE, are used to dynamically configure the Jenkins Operator container. Ensure that these variables are correctly set to provide the necessary context and functionality to the operator.

Read moreTechnologies

Related Patterns

Istio Operator

MESHERY4a76

Key cloak operator

MESHERY4f70

RELATED PATTERNS

Istio Operator

MESHERY4a76

KEY CLOAK OPERATOR

Description

This YAML snippet describes a Kubernetes Deployment for a Keycloak operator, ensuring a single replica. It specifies labels and annotations for metadata, including a service account. The pod template defines a container running the Keycloak operator image, with environment variables set for namespace and pod name retrieval. Security context settings prevent privilege escalation. Probes are configured for liveness and readiness checks on port 8081, with resource requests and limits ensuring proper resource allocation for the container.

Read moreCaveats and Considerations

1. Single Replica: The configuration specifies only one replica, which means there's no built-in redundancy or high availability. Consider adjusting the replica count based on your availability requirements. 2. Resource Allocation: Resource requests and limits are set for CPU and memory. Ensure these values are appropriate for your workload and cluster capacity to avoid performance issues or resource contention. 3. Security Context: The security context is configured to run the container as a non-root user and disallow privilege escalation. Ensure these settings align with your security policies and container requirements. 4. Probes Configuration: Liveness and readiness probes are set up to check the health of the container on port 8081. Ensure that the specified endpoints (/healthz and /readyz) are correctly implemented in the application code. 5. Namespace Configuration: The WATCH_NAMESPACE environment variable is set to an empty string, potentially causing the operator to watch all namespaces. Ensure this behavior aligns with your intended scope of operation and namespace isolation requirements.

Read moreTechnologies

Related Patterns

Istio Operator

MESHERY4a76

Kubernetes Deployment with Azure File Storage

MESHERY487a

RELATED PATTERNS

Pod Readiness

MESHERY4b83

KUBERNETES DEPLOYMENT WITH AZURE FILE STORAGE

Description

This design sets up a Kubernetes Deployment deploying two NGINX containers. Each container utilizes an Azure File storage volume for shared data. The NGINX instances serve web content while accessing an Azure File share, enabling scalable and shared storage for the web servers.

Caveats and Considerations

1. Azure Configuration: Ensure that your Azure configuration, including secrets, is correctly set up to access the Azure File share.

2. Data Sharing: Multiple NGINX containers share the same storage. Be cautious when handling write operations to avoid conflicts or data corruption.

3. Scalability: Consider the scalability of both NGINX and Azure File storage to meet your application's demands.

4. Security: Safeguard the secrets used to access Azure resources and limit access to only authorized entities.

5. Pod Recovery: Ensure that the pod recovery strategy is well-defined to handle disruptions or node failures.

6. Azure Costs: Monitor and manage costs associated with Azure File storage, as it may incur charges based on usage.

7. Maintenance: Plan for regular maintenance and updates of both NGINX and Azure configurations to address security and performance improvements.

8. Monitoring: Implement monitoring and alerts for both the NGINX containers and Azure File storage to proactively detect and address issues.

9. Backup and Disaster Recovery: Establish a backup and disaster recovery plan to safeguard data stored in Azure File storage.

Technologies

Related Patterns

Pod Readiness

MESHERY4b83

Kubernetes Engine Training Example

MESHERY40f1

RELATED PATTERNS

Pod Readiness

MESHERY4b83

KUBERNETES ENGINE TRAINING EXAMPLE

Description

""

Caveats and Considerations

""

Technologies

Related Patterns

Pod Readiness

MESHERY4b83

Kubernetes Metrics Server Configuration

MESHERY4892

RELATED PATTERNS

Kubernetes cronjob

MESHERY4483

KUBERNETES METRICS SERVER CONFIGURATION

Description

This design configures the Kubernetes Metrics Server for monitoring cluster-wide resource metrics. It defines a Kubernetes Deployment, Role-Based Access Control (RBAC) rules, and other resources for the Metrics Server's deployment and operation.

Caveats and Considerations